1. We do not have ip-communication on all vms and ct since upgrading to pve9 (cant reach 10.26.0.1)

-- untouched pve8.4.5 clusters work well on the same subnet including their vms/ct

2. the same lan works well on the pve-hosts itself (they can reach the gw 10.26.0.1)

2. I did use the name-pinning tool (see below)

3. I get some frr-errors, despite not having used frr before

4. I attached the pve-reports of all 3 nodes

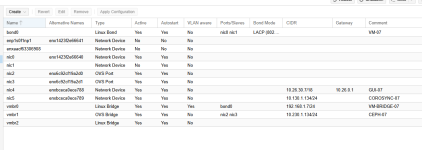

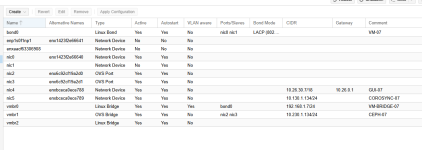

I also used and tried the proxmox-network-interface-pinning tool and it works great. It does include ports that are currently down aswell (nic1 = created), but the down port (old nics alternative-name) still shows up in the web-ui, allthough its NOT present in the /etc/network/interfaces file but its Alternative-Name: enxaacf63306908 this might lead to confusion?

Edit: we ran into an issue, seems like nic1 was not created correctly?

Different node, same test-cluster.

-- untouched pve8.4.5 clusters work well on the same subnet including their vms/ct

2. the same lan works well on the pve-hosts itself (they can reach the gw 10.26.0.1)

2. I did use the name-pinning tool (see below)

3. I get some frr-errors, despite not having used frr before

4. I attached the pve-reports of all 3 nodes

I also used and tried the proxmox-network-interface-pinning tool and it works great. It does include ports that are currently down aswell (nic1 = created), but the down port (old nics alternative-name) still shows up in the web-ui, allthough its NOT present in the /etc/network/interfaces file but its Alternative-Name: enxaacf63306908 this might lead to confusion?

Code:

root@PMX7:~# ip a | grep DOWN

2: nic0: <NO-CARRIER,BROADCAST,MULTICAST,SLAVE,UP> mtu 1500 qdisc mq master bond0 state DOWN group default qlen 1000

5: enp1s0f1np1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

8: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

12: bond0: <NO-CARRIER,BROADCAST,MULTICAST,MASTER,UP> mtu 1500 qdisc noqueue master vmbr0 state DOWN group default qlen 1000

root@PMX7:~# lshw -c network -businfo

Bus info Device Class Description

==========================================================

pci@0000:01:00.0 nic0 network BCM57416 NetXtreme-E Dual-Media 10G RDMA Ethernet Controller

pci@0000:01:00.1 enp1s0f1np1 network BCM57416 NetXtreme-E Dual-Media 10G RDMA Ethernet Controller

pci@0000:41:00.0 nic4 network I350 Gigabit Network Connection

pci@0000:41:00.1 nic5 network I350 Gigabit Network Connection

pci@0000:e1:00.0 nic2 network BCM57508 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet

pci@0000:e1:00.1 nic3 network BCM57508 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet

Edit: we ran into an issue, seems like nic1 was not created correctly?

Code:

Removed '/etc/systemd/system/multi-user.target.wants/dnsmasq@zNB.service'.

Created symlink '/etc/systemd/system/multi-user.target.wants/dnsmasq@zNB.service' -> '/usr/lib/systemd/system/dnsmasq@.service'.

bond0 : error: bond0: skipping slave nic1, does not exist

TASK ERROR: command 'ifreload -a' failed: exit code 1Different node, same test-cluster.

Code:

Removed '/etc/systemd/system/multi-user.target.wants/dnsmasq@zNB.service'.

Created symlink '/etc/systemd/system/multi-user.target.wants/dnsmasq@zNB.service' -> '/usr/lib/systemd/system/dnsmasq@.service'.

nic1 : warning: nic1: interface not recognized - please check interface configuration

TASK ERROR: daemons file does not exist at /usr/share/perl5/PVE/Network/SDN/Frr.pm line 133.Attachments

Last edited: