Hi,heros

I encountered a problem, and it has been happening more frequently lately.

**Hardware:**

PVE version 9.0.3,

E5-2640v4 * 2, 128GB RAM, and 2 SSDs

**Symptoms**

The server becomes unresponsive randomly: 1~7days

When it happen, ping works, but SSH, keyboard input, and web access are all blocked.

NO error log

BUT: All virtual machines remain completely normal.

**Attempt**

I've updated the intel-microcode

Disabled C3/C6 in the motherboard BIOS

Modified the intel_idle.max_cstate=1 setting in GRUB file

Update the kernal.

Yet there's no improvement

Help me my heros!!

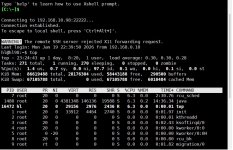

In the log file, after the time: Jan 17 16:22:11, server freeze with no log anymore

pve server ip:192.168.0.100

1of pve vms ip:192.168.0.98

I encountered a problem, and it has been happening more frequently lately.

**Hardware:**

PVE version 9.0.3,

E5-2640v4 * 2, 128GB RAM, and 2 SSDs

**Symptoms**

The server becomes unresponsive randomly: 1~7days

When it happen, ping works, but SSH, keyboard input, and web access are all blocked.

NO error log

BUT: All virtual machines remain completely normal.

**Attempt**

I've updated the intel-microcode

Disabled C3/C6 in the motherboard BIOS

Modified the intel_idle.max_cstate=1 setting in GRUB file

Update the kernal.

Yet there's no improvement

Help me my heros!!

In the log file, after the time: Jan 17 16:22:11, server freeze with no log anymore

pve server ip:192.168.0.100

1of pve vms ip:192.168.0.98

Attachments

Last edited: