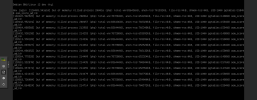

# Memory=6144

# Min memory=2048

# Ballooning=on

root@dev:~# lshw -c memory

*-firmware

description: BIOS

fabriquant: SeaBIOS

identifiant matériel: 0

version: rel-1.16.3-0-ga6ed6b701f0a-prebuilt.qemu.org

date: 04/01/2014

taille: 96KiB

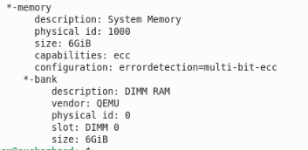

*-memory

description: Mémoire Système

identifiant matériel: 1000

emplacement: Project-Id-Version: @(#) $Id: fr.po 2151 2010-03-15 20:26:20Z lyonel $Report-Msgid-Bugs-To: POT-Creation-Date: 2009-10-08 14:02+0200PO-Revision-Date: 2009-10-08 14:06+0100Last-Translator: Lyonel Vincent <lyonel@ezix.org>Language-Team: MIME-Version: 1.0Content-Type: text/plain; charset=UTF-8Content-Transfer-Encoding: 8bit

taille: 6GiB

fonctionnalités: ecc

configuration: errordetection=multi-bit-ecc

*-bank

description: DIMM RAM

fabriquant: QEMU

identifiant matériel: 0

emplacement: DIMM 0

taille: 6GiB

root@dev:~# free -h

total utilisé libre partagé tamp/cache disponible

Mem: 5,4Gi 836Mi 4,4Gi 145Mi 512Mi 4,6Gi

Échange: 0B 0B 0B

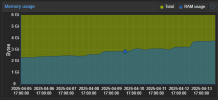

> total go down with time

**************************************************************************************

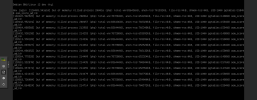

# Memory=6144

# Min memory=2048

# Ballooning=off

root@dev:~# lshw -c memory

*-firmware

description: BIOS

fabriquant: SeaBIOS

identifiant matériel: 0

version: rel-1.16.3-0-ga6ed6b701f0a-prebuilt.qemu.org

date: 04/01/2014

taille: 96KiB

*-memory

description: Mémoire Système

identifiant matériel: 1000

emplacement: Project-Id-Version: @(#) $Id: fr.po 2151 2010-03-15 20:26:20Z lyonel $Report-Msgid-Bugs-To: POT-Creation-Date: 2009-10-08 14:02+0200PO-Revision-Date: 2009-10-08 14:06+0100Last-Translator: Lyonel Vincent <lyonel@ezix.org>Language-Team: MIME-Version: 1.0Content-Type: text/plain; charset=UTF-8Content-Transfer-Encoding: 8bit

taille: 6GiB

fonctionnalités: ecc

configuration: errordetection=multi-bit-ecc

*-bank

description: DIMM RAM

fabriquant: QEMU

identifiant matériel: 0

emplacement: DIMM 0

taille: 6GiB

root@dev:~# free -h

total utilisé libre partagé tamp/cache disponible

Mem: 5,8Gi 819Mi 4,8Gi 145Mi 512Mi 5,0Gi

Échange: 0B 0B 0B

**************************************************************************************

# Memory=6144

# Min memory=6144

# Ballooning=on

root@dev:~# lshw -c memory

*-firmware

description: BIOS

fabriquant: SeaBIOS

identifiant matériel: 0

version: rel-1.16.3-0-ga6ed6b701f0a-prebuilt.qemu.org

date: 04/01/2014

taille: 96KiB

*-memory

description: Mémoire Système

identifiant matériel: 1000

emplacement: Project-Id-Version: @(#) $Id: fr.po 2151 2010-03-15 20:26:20Z lyonel $Report-Msgid-Bugs-To: POT-Creation-Date: 2009-10-08 14:02+0200PO-Revision-Date: 2009-10-08 14:06+0100Last-Translator: Lyonel Vincent <lyonel@ezix.org>Language-Team: MIME-Version: 1.0Content-Type: text/plain; charset=UTF-8Content-Transfer-Encoding: 8bit

taille: 6GiB

fonctionnalités: ecc

configuration: errordetection=multi-bit-ecc

*-bank

description: DIMM RAM

fabriquant: QEMU

identifiant matériel: 0

emplacement: DIMM 0

taille: 6GiB

root@dev:~# free -h

total utilisé libre partagé tamp/cache disponible

Mem: 5,8Gi 817Mi 4,8Gi 145Mi 507Mi 5,0Gi

Échange: 0B 0B 0B