I know this has been discussed in multiple different places, many many times over, but I am trying to get a sense of if my experience is way beyond the norm.

I have been running Proxmox for 2 years now in a homelab setting, I have about a dozen or so VM's considting of pfsense, homeassistant, truenas ubuntu server, some LTXC's, a dozen docker containers, typical homelab type use case. I am running a pair of 980 (non pro's) 500GB as my boot drive in ZFS mirror for proxmox and store the VM's on the boot drives as well, so all of the proxmox logs + VM's are writting to the same pair of 980's.

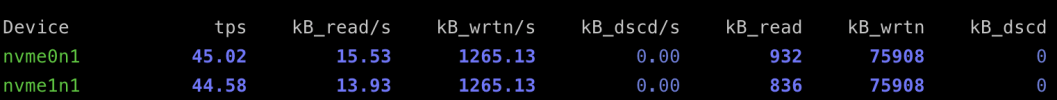

That said, if I am reading this correctly, I have done 135 TB of writes in 2 years - this seems unphathamable. Am I reading this correctly? I am also not sure why I am seeing some data integrety errors, I should probably look into replacing this drive soon (or monitor to see if the number goes up? and if not, maybe just let it be?).

After seeing this, I went looking for solution and this morning I did set the

I have been running Proxmox for 2 years now in a homelab setting, I have about a dozen or so VM's considting of pfsense, homeassistant, truenas ubuntu server, some LTXC's, a dozen docker containers, typical homelab type use case. I am running a pair of 980 (non pro's) 500GB as my boot drive in ZFS mirror for proxmox and store the VM's on the boot drives as well, so all of the proxmox logs + VM's are writting to the same pair of 980's.

That said, if I am reading this correctly, I have done 135 TB of writes in 2 years - this seems unphathamable. Am I reading this correctly? I am also not sure why I am seeing some data integrety errors, I should probably look into replacing this drive soon (or monitor to see if the number goes up? and if not, maybe just let it be?).

After seeing this, I went looking for solution and this morning I did set the

Storage=volatile option in /etc/systemd/journald.conf hoping that will help going forward, but wasn't comfortable modifying anythign beyond that as the other options seemed slightly more invasive/less begnin. I did come across a github page where someone has a mod to write all logs to RAM and then dump to disc at a set interval which seems like a potential plausible option, but I wanted to check in here first to see if this seems normal, or just totally crazy. 135 TB in 2 years to me seems crazy... Honestly even 12.4 TB reads seems impossible.