Hi,

To start I would not recommend that people use this to somehow cook together PVE using a remote cluster via iSCSI as storage for VMs. In our case we have a secondary cluster which used to host a multi-tenant internet based backup service which comprised of 6 servers with 310 TiB available to Ceph. We now use this cluster to do daily incremental Ceph backups of VMs in other clusters and use benjie (https://benji-backup.me/) to deduplicate, compress and encrypt 4 MiB data chunks and then store them in Ceph via the S3 compatible rados gateway (rgw) service. Benjie uses rolling snapshots to essentially be told what data has changed on the images being backed up, so no scanning drives (it has it's own daily data verification logic) and is multi-threaded in that multiple 4 MiB data chunks can get processed simultaneously. This is working well, so well in fact that it requires a fraction of the resources to do backups and the required storage space is minuscule. The best thing is that this cluster has zero load during standard office hours.

Right, so after a year we now store 31 days of daily backups, 12 weekly and 12 monthly backups of images from other Proxmox Ceph clusters. Projecting this forward for 3 years, with a surprisingly linear space increase pattern, means that we safely have 200 TiB available for sale to customers. Each of the 6 nodes can swallow 12 x 3.5 inch discs and has the OS installed on a relatively small software raided partition with separate 2 x SSDs in each system. The remaining space on the SSDs is used as Ceph OSDs to provide a flash only data pool and cache tiering pools for rotational media erasure coded pools (k=3, m=2). All drives are identically distributed on nodes and essentially come from repurposed equipment. We can subsequently easily upgrade storage by replacing the ancient 2 TiB spinners with much larger discs.

PS: We run ec32 and not ec42 as we wish to sustain the failure of an entire node and have Ceph being able to heal itself afterwards, again providing for a failure of a second node before the first is repaired.

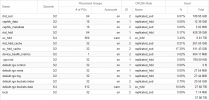

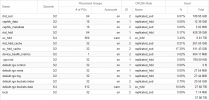

Herewith a sample of the OSD placements:

Our clusters that host customer workloads comprise purely of flash with 3 way RBD replication, so costs aren't cheap but performance is excellent. Having redundant iSCSI connectivity would be really useful in some scenarios where we host physical equipment for customers. We have some benchmark data when running a 2 vCPU Windows 2012r2 VM against multi-path (active/backup) ceph-iscsi gateways and it really doesn't look bad. This is intended as a slow bulk storage tier for some customers that have essentially taken their on-premise file servers in to the cloud before transitioning to something like SharePoint. We intend to access RBD images in this cluster directly via external-ceph, from the current production clusters in the same data centre and will most probably use this very sparingly.

We have the following Ceph pools:

As of writing this PVE 6.3 is layered on top of Debian 10.7 with Proxmox's Ceph Octopus packages being 15.2.8. We installed the ceph-iscsi package from the Debian Buster (10) backports repository and got python3-rtslib-fb_2.1.71 from Debian Sid.

The following are my speed notes, you'll want to review content at the following locations as part of setting this up:

PS: We tested everything using real wildcard certificates so others may need to turn off SSL verification.

Herewith my notes:

The Ceph Dashboard integration isn't necessary but it is pretty:

Quick speed notes on how to setup Ceph Dashboard:

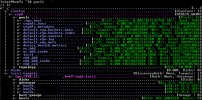

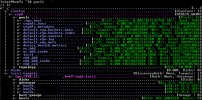

Update: Forgot to include a snippet of the gwcli which represents the pools in a pretty way with loads of information:

To start I would not recommend that people use this to somehow cook together PVE using a remote cluster via iSCSI as storage for VMs. In our case we have a secondary cluster which used to host a multi-tenant internet based backup service which comprised of 6 servers with 310 TiB available to Ceph. We now use this cluster to do daily incremental Ceph backups of VMs in other clusters and use benjie (https://benji-backup.me/) to deduplicate, compress and encrypt 4 MiB data chunks and then store them in Ceph via the S3 compatible rados gateway (rgw) service. Benjie uses rolling snapshots to essentially be told what data has changed on the images being backed up, so no scanning drives (it has it's own daily data verification logic) and is multi-threaded in that multiple 4 MiB data chunks can get processed simultaneously. This is working well, so well in fact that it requires a fraction of the resources to do backups and the required storage space is minuscule. The best thing is that this cluster has zero load during standard office hours.

Right, so after a year we now store 31 days of daily backups, 12 weekly and 12 monthly backups of images from other Proxmox Ceph clusters. Projecting this forward for 3 years, with a surprisingly linear space increase pattern, means that we safely have 200 TiB available for sale to customers. Each of the 6 nodes can swallow 12 x 3.5 inch discs and has the OS installed on a relatively small software raided partition with separate 2 x SSDs in each system. The remaining space on the SSDs is used as Ceph OSDs to provide a flash only data pool and cache tiering pools for rotational media erasure coded pools (k=3, m=2). All drives are identically distributed on nodes and essentially come from repurposed equipment. We can subsequently easily upgrade storage by replacing the ancient 2 TiB spinners with much larger discs.

PS: We run ec32 and not ec42 as we wish to sustain the failure of an entire node and have Ceph being able to heal itself afterwards, again providing for a failure of a second node before the first is repaired.

Herewith a sample of the OSD placements:

Code:

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

100 hdd 1.81898 1.00000 1.8 TiB 236 GiB 234 GiB 4.3 MiB 1.9 GiB 1.6 TiB 12.66 0.99 46 up

101 hdd 1.81898 1.00000 1.8 TiB 257 GiB 255 GiB 3.8 MiB 2.2 GiB 1.6 TiB 13.79 1.08 51 up

102 hdd 5.45799 1.00000 5.5 TiB 707 GiB 702 GiB 10 MiB 5.1 GiB 4.8 TiB 12.65 0.99 141 up

103 hdd 5.45799 1.00000 5.5 TiB 702 GiB 697 GiB 7.7 MiB 5.1 GiB 4.8 TiB 12.56 0.99 139 up

104 hdd 9.09599 1.00000 9.1 TiB 1.1 TiB 1.1 TiB 8.0 MiB 7.5 GiB 8.0 TiB 12.43 0.98 228 up

105 hdd 9.09599 1.00000 9.1 TiB 1.1 TiB 1.1 TiB 7.4 MiB 7.7 GiB 8.0 TiB 12.42 0.98 225 up

106 hdd 9.09599 1.00000 9.1 TiB 1.1 TiB 1.1 TiB 4.6 MiB 7.6 GiB 8.0 TiB 12.47 0.98 235 up

107 hdd 9.09599 1.00000 9.1 TiB 1.1 TiB 1.1 TiB 2.3 MiB 7.5 GiB 8.0 TiB 12.35 0.97 224 up

10100 ssd 0.37700 1.00000 386 GiB 113 GiB 110 GiB 2.5 GiB 846 MiB 273 GiB 29.27 2.30 51 up

10101 ssd 0.37700 1.00000 386 GiB 105 GiB 103 GiB 1.4 GiB 958 MiB 281 GiB 27.28 2.14 55 upOur clusters that host customer workloads comprise purely of flash with 3 way RBD replication, so costs aren't cheap but performance is excellent. Having redundant iSCSI connectivity would be really useful in some scenarios where we host physical equipment for customers. We have some benchmark data when running a 2 vCPU Windows 2012r2 VM against multi-path (active/backup) ceph-iscsi gateways and it really doesn't look bad. This is intended as a slow bulk storage tier for some customers that have essentially taken their on-premise file servers in to the cloud before transitioning to something like SharePoint. We intend to access RBD images in this cluster directly via external-ceph, from the current production clusters in the same data centre and will most probably use this very sparingly.

We have the following Ceph pools:

As of writing this PVE 6.3 is layered on top of Debian 10.7 with Proxmox's Ceph Octopus packages being 15.2.8. We installed the ceph-iscsi package from the Debian Buster (10) backports repository and got python3-rtslib-fb_2.1.71 from Debian Sid.

The following are my speed notes, you'll want to review content at the following locations as part of setting this up:

- https://github.com/ceph/ceph-iscsi/

- https://docs.ceph.com/en/latest/rbd/iscsi-target-cli-manual-install/

- https://access.redhat.com/documenta...way#the_iscsi_initiator_for_microsoft_windows

PS: We tested everything using real wildcard certificates so others may need to turn off SSL verification.

Herewith my notes:

Code:

# Ceph iSCSI stores configuration metadata in a rbd pool. We typically rename the default 'rbd' pool as

# rbd_hdd or rbd_ssd so we create a pool to hold the config and the images. We'll create the images manually

# to place the actual data in the ec_hdd data pool:

ceph osd pool create iscsi 8 replicated replicated_ssd;

ceph osd pool application enable iscsi rbd

# Adjust Ceph OSD timers:

# Default:

# osd_client_watch_timeout: 30

# osd_heartbeat_grace : 20

# osd_heartbeat_interval : 6

for setting in 'osd_client_watch_timeout 15' 'osd_heartbeat_grace 20' 'osd_heartbeat_interval 5'; do echo ceph config set osd "$setting"; done

#for setting in 'osd_client_watch_timeout 15' 'osd_heartbeat_grace 20' 'osd_heartbeat_interval 5'; do echo ceph tell osd.* injectargs "--$setting"; done

for OSD in 100; do for setting in osd_client_watch_timeout osd_heartbeat_grace osd_heartbeat_interval; do ceph daemon osd.$OSD config get $setting; done; done

iscsi_api_passwd=`cat /dev/urandom | tr -dc 'A-Za-z0-9' | fold -w 32 | head -n 1`;

echo $iscsi_api_passwd;

echo -e "[config]\ncluster_name = ceph\npool = iscsi\ngateway_keyring = ceph.client.admin.keyring\napi_secure = true\napi_user = admin\napi_password = $iscsi_api_passwd" > /etc/pve/iscsi-gateway.cfg;

# Ceph Dashboard:

ceph dashboard iscsi-gateway-list;

echo "ceph dashboard iscsi-gateway-add https://admin:$iscsi_api_passwd@kvm7a.fqdn:5000 kvm7a.fqdn" | sh;

echo "ceph dashboard iscsi-gateway-add https://admin:$iscsi_api_passwd@kvm7b.fqdn:5000 kvm7b.fqdn" | sh;

echo "ceph dashboard iscsi-gateway-add https://admin:$iscsi_api_passwd@kvm7e.fqdn:5000 kvm7e.fqdn" | sh;

#ceph dashboard iscsi-gateway-rm kvm7a.fqdn;

#ceph dashboard set-iscsi-api-ssl-verification false;

# Testing communications to api:

# curl --user admin:******************************** -X GET https://kvm7a.fqdn:5000/api/_ping;

# Add --insecure to skip x509 SSL certificate verification

# On each node:

apt-get update; apt-get -y dist-upgrade; apt-get autoremove; apt-get autoclean;

pico /etc/apt/sources.list;

# Backports

deb http://deb.debian.org/debian buster-backports main contrib non-free

# Packages from the Backports repository do not unnecessarily replace packages so you

# have to specify the repository when wanting to install packages. For example:

# apt-get -t buster-backports install "package"

apt-get -t buster-backports install ceph-iscsi;

wget http://debian.mirror.ac.za/debian/pool/main/p/python-rtslib-fb/python3-rtslib-fb_2.1.71-3_all.deb;

dpkg -i python3-rtslib-fb_2.1.71-3_all.deb && rm -f python3-rtslib-fb_2.1.71-3_all.deb;

ln -s /etc/pve/iscsi-gateway.cfg /etc/ceph/iscsi-gateway.cfg;

ln -s /etc/pve/local/pve-ssl.pem /etc/ceph/iscsi-gateway.crt;

ln -s /etc/pve/local/pve-ssl.key /etc/ceph/iscsi-gateway.key;

systemctl status rbd-target-gw;

systemctl status rbd-target-api;

Notes:

Create iSCSI Target:

gwcli

/iscsi-targets create iqn.1998-12.reversefqdn.kvm7:ceph-iscsi

goto gateways

create kvm7a.fqdn 198.19.35.2 skipchecks=true

create kvm7b.fqdn 198.19.35.3 skipchecks=true

create kvm7e.fqdn 198.19.35.6 skipchecks=true

Pre-Create Disk and define it under a new client host:

rbd create iscsi/vm-169-disk-2 --data-pool ec_hdd --size 500G;

# ^^^^^^^^^^^^^ ^^^^^^ ^^^

gwcli

/disks/ create pool=iscsi image=vm-169-disk-2 size=500g

# ^^^^^^^^^^^^^ ^^^

# NB: This command will create new RBD images in the pool if they don't exist yet, make sure to pre-create it to place data in an erasure coded pool

/iscsi-targets/iqn.1998-12.reversefqdn.kvm7:ceph-iscsi/hosts

create iqn.1991-05.com.microsoft:win-test

auth username=ceph-iscsi password=******************************** # cat /dev/urandom | tr -dc 'A-Za-z0-9' | fold -w 32 | head -n 1

disk add iscsi/vm-169-disk-2

# ^^^^^^^^^^^^^^^^^^^

Restart Ceph iSCSI API service if it was disabled due to it repeatedly failing before node joins cluster:

systemctl stop rbd-target-api;

systemctl reset-failed;

systemctl start rbd-target-api;

systemctl status rbd-target-api;

Connecting Windows (tested with 2012r2 although documentation indicated 2016 being required):

https://access.redhat.com/documentation/en-us/red_hat_ceph_storage/3/html/block_device_guide/using_an_iscsi_gateway#the_iscsi_initiator_for_microsoft_windows

Increase disk's kernel cache:

gwcli

/disks/iscsi/vm-169-disk-2

info

reconfigure max_data_area_mb 128

Delete client:

gwcli

/iscsi-targets/iqn.1998-12.reversefqdn.kvm7:ceph-iscsi/hosts

delete iqn.1991-05.com.microsoft:win-test

/iscsi-targets/iqn.1998-12.reversefqdn.kvm7:ceph-iscsi/disks

delete disk=iscsi/vm-169-disk-2

/disks

#delete image_id=iscsi/vm-169-disk-2 # NB: This deletes the RBD image! Rename it before deleting to keep the image

# eg: rbd mv iscsi/vm-169-disk-2 iscsi/vm-169-disk-2_KEEP

# gwcli /disks delete image_id=iscsi/vm-169-disk-2

Dump config:

gwcli export copy

Debug:

tail -f /var/log/rbd-target-api/rbd-target-api.logThe Ceph Dashboard integration isn't necessary but it is pretty:

Quick speed notes on how to setup Ceph Dashboard:

Code:

ceph mgr module enable dashboard;

#ceph dashboard create-self-signed-cert;

ceph dashboard set-ssl-certificate -i /etc/pve/local/pve-ssl.pem;

ceph dashboard set-ssl-certificate-key -i /etc/pve/local/pve-ssl.key;

ceph dashboard ac-user-create admin ***************** administrator

# URL eg: https://kvm7a.fqdn:8443/

#ceph config set mgr mgr/dashboard/ssl true;Update: Forgot to include a snippet of the gwcli which represents the pools in a pretty way with loads of information:

Last edited: