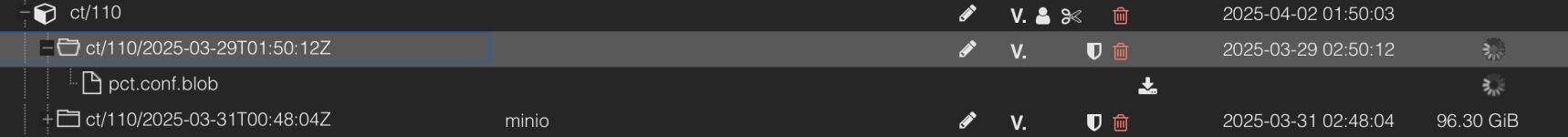

So I configured my PBS to push sync to a remote location. This worked and 2/3 VM backups pushed fine. However, one of them failed at pct.conf.blob, as seen on the receiver end:

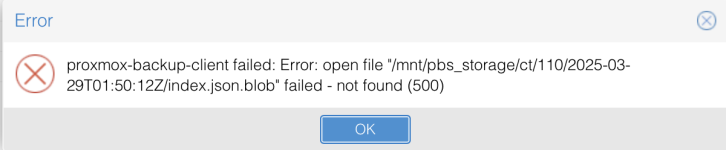

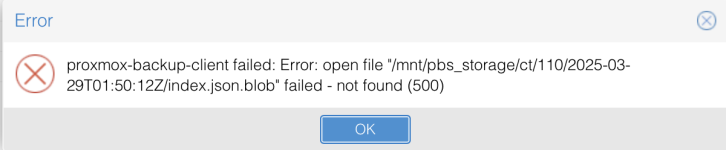

The verification job I run also failed, expectedly so:

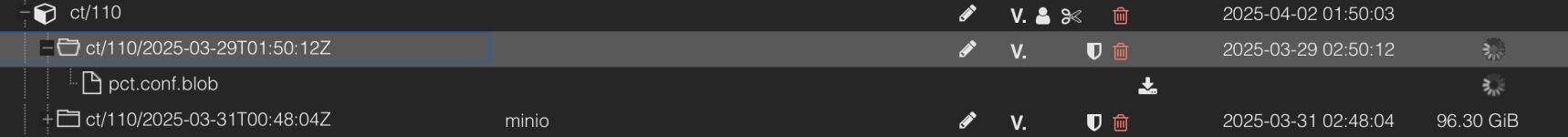

However, the sender seems to be happy as it appears to be skipping the snapshot that failed to upload:

This is quite worrying, to be honest, since an upload can fail at any given point rendering a snapshot useless to no knowledge of the pushing side:

Is this a bug? I would imagine you do check whether snapshot data was uploaded, but maybe don't check for the snapshot's own metadata (pct.conf.blob, index.json.blob)?

The verification job I run also failed, expectedly so:

Code:

2025-04-02T00:00:00+02:00: Failed to verify the following snapshots/groups:

2025-04-02T00:00:00+02:00: ct/110/2025-03-29T01:50:12ZHowever, the sender seems to be happy as it appears to be skipping the snapshot that failed to upload:

Code:

2025-04-02T21:00:00+02:00: Found 4 groups to sync (out of 24 total)

2025-04-02T21:00:00+02:00: skipped: 4 snapshot(s) (2025-03-29T00:30:03Z .. 2025-04-01T23:30:02Z) - older than the newest snapshot present on sync target

2025-04-02T21:00:00+02:00: skipped: 4 snapshot(s) (2025-03-29T01:50:12Z .. 2025-04-01T23:50:03Z) - older than the newest snapshot present on sync target

2025-04-02T21:00:00+02:00: skipped: 2 snapshot(s) (2025-03-31T20:24:22Z .. 2025-04-01T23:58:59Z) - older than the newest snapshot present on sync target

2025-04-02T21:00:00+02:00: skipped: 4 snapshot(s) (2025-03-29T01:48:36Z .. 2025-04-01T23:48:47Z) - older than the newest snapshot present on sync target

2025-04-02T21:00:00+02:00: Finished syncing root namespace, current progress: 3 groups, 0 snapshots

2025-04-02T21:00:00+02:00: Summary: sync job found no new data to pushThis is quite worrying, to be honest, since an upload can fail at any given point rendering a snapshot useless to no knowledge of the pushing side:

Is this a bug? I would imagine you do check whether snapshot data was uploaded, but maybe don't check for the snapshot's own metadata (pct.conf.blob, index.json.blob)?

Last edited: