Hi, I am new to this forum - a home(-lab) user of Proxmox VE for quiet a while already - who updated to Proxmox VE 8.1 now.

As already mentioned by several others I am facing higher server load using kernel 6.5.11-4 on my system (a ProLiant MicroServer Gen8).

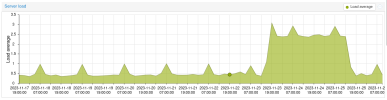

It increases - same load of/running VMs - when using this kernel and declines running under prior kernel 6.2.16-19 (see also screenshot).

Current (since 25-Nov-2023, ~13:30):

As already mentioned by several others I am facing higher server load using kernel 6.5.11-4 on my system (a ProLiant MicroServer Gen8).

It increases - same load of/running VMs - when using this kernel and declines running under prior kernel 6.2.16-19 (see also screenshot).

Current (since 25-Nov-2023, ~13:30):

Code:

root@pve:~# pveversion

pve-manager/8.1.3/b46aac3b42da5d15 (running kernel: 6.2.16-19-pve)Attachments

Last edited: