Hello everyone!

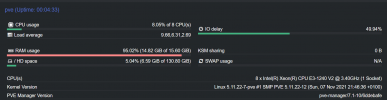

I'm running Proxmox VE on a server that has the following specs: Xeon E3 1240v2, 16GB RAM, 2x Crucial SSDs in RAID 1.

This server is definitely not the sharpest, but the specs are more than enough for my basic home server needs. I'm running just a few VMs with services like Nextcloud, Gitea, Sonatype Nexus... services that, for a long time, I've ran on an underpowered T7800 with 6GB of RAM.

However, Proxmox has become so slow that pretty much any action I take on it freezes the whole system for a few seconds up to minutes. Example: I pull a docker container on a VM. The download starts, then it gets to "extracting" and it sticks to 100% for 15 minutes, while everything else goes down and starts timing out (I have an uptime tracker). Another example: I start streaming something on Jellyfin. It loads instantly but only 30 seconds, then the server freezes, I watch 30 seconds of a movie, and the stream stops until the server is alive again.

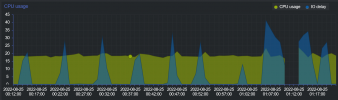

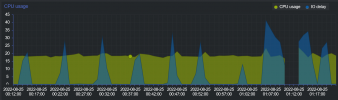

I have noticed IO delay going up exponentially in Proxmox's Web UI, sometimes up to a point where even Proxmox itself stops recording it. However:

- I did not allocate more RAM or CPU cores than what I have to the VMs,

- I left 2GB for the system itself,

- I am using SSDs that, while not server-grade, I have tested extensively with full writes before using them on the server and their performance was more than good enough.

Moreover, the disks still have more than enough space. And after thinking that RAID could be the issue, I moved Nextcloud and Jellyfin to a separate SSD that I mounted by itself without any kind of mirroring, and the problem didn't change. In fact, I find it very weird that this is a disk issue, because if the external SSD was too slow, it should not affect VMs that are running on internal RAID1 SSDs. The fact that this issue affects the whole system no matter how much I try to separate the resources, really bothers me because then why am I even virtualizing them. Especially because some services that are running on docker get automatically killed after being unresponsive for 60s, and I often find myself waking up to a half-dead server overnight because my phone uploaded some photos to Nextclouod automatically.

I am at a loss - I replaced one of the two SSDs, I tried moving VMs to external SSDs, I tried changing resource allocation - I even tried changing disk cache profiles from default (no cache) to write back, but it brought no change - and the fact that I used to run the same services on a much less powerful 2-core CPU really makes me wonder why this is happening. Also because, when I installed Proxmox on this server, everything was butter smooth for a few months/weeks. It's just been getting steadily worse with time.

I even tried running a game server and it brought the whole Proxmox server down, including host itself.

What could the issue be? Does anyone have any clue? If there are things that I can run and test I'll be more than happy to try.

Thank you all very much!

I'm running Proxmox VE on a server that has the following specs: Xeon E3 1240v2, 16GB RAM, 2x Crucial SSDs in RAID 1.

This server is definitely not the sharpest, but the specs are more than enough for my basic home server needs. I'm running just a few VMs with services like Nextcloud, Gitea, Sonatype Nexus... services that, for a long time, I've ran on an underpowered T7800 with 6GB of RAM.

However, Proxmox has become so slow that pretty much any action I take on it freezes the whole system for a few seconds up to minutes. Example: I pull a docker container on a VM. The download starts, then it gets to "extracting" and it sticks to 100% for 15 minutes, while everything else goes down and starts timing out (I have an uptime tracker). Another example: I start streaming something on Jellyfin. It loads instantly but only 30 seconds, then the server freezes, I watch 30 seconds of a movie, and the stream stops until the server is alive again.

I have noticed IO delay going up exponentially in Proxmox's Web UI, sometimes up to a point where even Proxmox itself stops recording it. However:

- I did not allocate more RAM or CPU cores than what I have to the VMs,

- I left 2GB for the system itself,

- I am using SSDs that, while not server-grade, I have tested extensively with full writes before using them on the server and their performance was more than good enough.

Moreover, the disks still have more than enough space. And after thinking that RAID could be the issue, I moved Nextcloud and Jellyfin to a separate SSD that I mounted by itself without any kind of mirroring, and the problem didn't change. In fact, I find it very weird that this is a disk issue, because if the external SSD was too slow, it should not affect VMs that are running on internal RAID1 SSDs. The fact that this issue affects the whole system no matter how much I try to separate the resources, really bothers me because then why am I even virtualizing them. Especially because some services that are running on docker get automatically killed after being unresponsive for 60s, and I often find myself waking up to a half-dead server overnight because my phone uploaded some photos to Nextclouod automatically.

I am at a loss - I replaced one of the two SSDs, I tried moving VMs to external SSDs, I tried changing resource allocation - I even tried changing disk cache profiles from default (no cache) to write back, but it brought no change - and the fact that I used to run the same services on a much less powerful 2-core CPU really makes me wonder why this is happening. Also because, when I installed Proxmox on this server, everything was butter smooth for a few months/weeks. It's just been getting steadily worse with time.

I even tried running a game server and it brought the whole Proxmox server down, including host itself.

What could the issue be? Does anyone have any clue? If there are things that I can run and test I'll be more than happy to try.

Thank you all very much!

Last edited: