Hello,

It's impossible to restore a VM, it takes too much time, it never ends. And errors appear in the kernel logs.

it's been running for about 2 hours for a VM with a 200GB disk containing 50GB of data.

I think this problem is related to https://forum.proxmox.com/threads/kworker-99-io.51459/

Some more information: I have a RAID 1 of 2x1TB of SSD Disk.

dmesg log:

iotop:

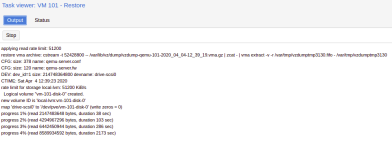

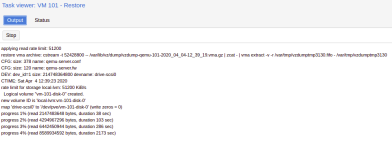

Proxmox GUI backup restore log:

pveversion -v:

proxmox-ve: 6.1-2 (running kernel: 5.3.18-3-pve)

pve-manager: 6.1-8 (running version: 6.1-8/806edfe1)

pve-kernel-helper: 6.1-7

pve-kernel-5.3: 6.1-6

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.0.3-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 2.0.1-1+pve8

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.15-pve1

libpve-access-control: 6.0-6

libpve-apiclient-perl: 3.0-3

libpve-common-perl: 6.0-17

libpve-guest-common-perl: 3.0-5

libpve-http-server-perl: 3.0-5

libpve-storage-perl: 6.1-5

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 3.2.1-1

lxcfs: 4.0.1-pve1

novnc-pve: 1.1.0-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.1-3

pve-cluster: 6.1-4

pve-container: 3.0-23

pve-docs: 6.1-6

pve-edk2-firmware: 2.20200229-1

pve-firewall: 4.0-10

pve-firmware: 3.0-6

pve-ha-manager: 3.0-9

pve-i18n: 2.0-4

pve-qemu-kvm: 4.1.1-4

pve-xtermjs: 4.3.0-1

qemu-server: 6.1-7

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.3-pve1

It's impossible to restore a VM, it takes too much time, it never ends. And errors appear in the kernel logs.

it's been running for about 2 hours for a VM with a 200GB disk containing 50GB of data.

I think this problem is related to https://forum.proxmox.com/threads/kworker-99-io.51459/

Some more information: I have a RAID 1 of 2x1TB of SSD Disk.

dmesg log:

Code:

[ 1089.095626] INFO: task kvm:1882 blocked for more than 483 seconds.

[ 1089.095861] Tainted: P IO 5.3.18-3-pve #1

[ 1089.096024] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 1089.096246] kvm D 0 1882 1 0x00004000

[ 1089.096248] Call Trace:

[ 1089.096257] __schedule+0x2bb/0x660

[ 1089.096259] schedule+0x33/0xa0

[ 1089.096261] schedule_timeout+0x205/0x300

[ 1089.096265] ? dm_make_request+0x56/0xb0

[ 1089.096266] io_schedule_timeout+0x1e/0x50

[ 1089.096268] wait_for_completion_io+0xb7/0x140

[ 1089.096271] ? wake_up_q+0x80/0x80

[ 1089.096276] submit_bio_wait+0x61/0x90

[ 1089.096279] blkdev_issue_flush+0x8e/0xc0

[ 1089.096282] blkdev_fsync+0x35/0x50

[ 1089.096285] vfs_fsync_range+0x48/0x80

[ 1089.096288] ? __fget_light+0x59/0x70

[ 1089.096289] do_fsync+0x3d/0x70

[ 1089.096290] __x64_sys_fdatasync+0x17/0x20

[ 1089.096293] do_syscall_64+0x5a/0x130

[ 1089.096296] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[ 1089.096298] RIP: 0033:0x7fb7d028b2e7

[ 1089.096303] Code: Bad RIP value.

[ 1089.096304] RSP: 002b:00007fb6bd5fa780 EFLAGS: 00000293 ORIG_RAX: 000000000000004b

[ 1089.096306] RAX: ffffffffffffffda RBX: 0000000000000015 RCX: 00007fb7d028b2e7

[ 1089.096307] RDX: 0000000000000000 RSI: 0000000000000000 RDI: 0000000000000015

[ 1089.096308] RBP: 00007fb7c366caf0 R08: 0000000000000000 R09: 00000000ffffffff

[ 1089.096308] R10: 00007fb6bd5fa760 R11: 0000000000000293 R12: 0000556312351612

[ 1089.096309] R13: 00007fb7c366cb58 R14: 00007fb7c3727930 R15: 00007fb6bfa84c90

[ 1089.096313] INFO: task kvm:3561 blocked for more than 483 seconds.

[ 1089.096490] Tainted: P IO 5.3.18-3-pve #1

[ 1089.096652] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 1089.096915] kvm D 0 3561 1 0x00000000

[ 1089.096917] Call Trace:

[ 1089.096919] __schedule+0x2bb/0x660

[ 1089.096921] schedule+0x33/0xa0

[ 1089.096922] schedule_timeout+0x205/0x300

[ 1089.096924] ? dm_make_request+0x56/0xb0

[ 1089.096926] io_schedule_timeout+0x1e/0x50

[ 1089.096928] wait_for_completion_io+0xb7/0x140

[ 1089.096929] ? wake_up_q+0x80/0x80

[ 1089.096931] submit_bio_wait+0x61/0x90

[ 1089.096933] blkdev_issue_flush+0x8e/0xc0

[ 1089.096935] blkdev_fsync+0x35/0x50

[ 1089.096936] vfs_fsync_range+0x48/0x80

[ 1089.096938] ? __fget_light+0x59/0x70

[ 1089.096939] do_fsync+0x3d/0x70

[ 1089.096940] __x64_sys_fdatasync+0x17/0x20

[ 1089.096942] do_syscall_64+0x5a/0x130

[ 1089.096944] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[ 1089.096945] RIP: 0033:0x7f70182e32e7

[ 1089.096947] Code: Bad RIP value.

[ 1089.096948] RSP: 002b:00007f6f055fa780 EFLAGS: 00000293 ORIG_RAX: 000000000000004b

[ 1089.096949] RAX: ffffffffffffffda RBX: 0000000000000015 RCX: 00007f70182e32e7

[ 1089.096950] RDX: 0000000000000000 RSI: 0000000000000000 RDI: 0000000000000015

[ 1089.096951] RBP: 00007f700b66caf0 R08: 0000000000000000 R09: 00007f6f055fa730

[ 1089.096952] R10: 000000005e8b0c9e R11: 0000000000000293 R12: 0000564fd06b8612

[ 1089.096953] R13: 00007f700b66cb58 R14: 00007f700b727850 R15: 00007f6f07a84c90

[ 1209.927947] INFO: task kvm:1882 blocked for more than 604 seconds.

[ 1209.928139] Tainted: P IO 5.3.18-3-pve #1

[ 1209.928301] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 1209.928523] kvm D 0 1882 1 0x00004000

[ 1209.928525] Call Trace:

[ 1209.928534] __schedule+0x2bb/0x660

[ 1209.928536] schedule+0x33/0xa0

[ 1209.928538] schedule_timeout+0x205/0x300

[ 1209.928542] ? dm_make_request+0x56/0xb0

[ 1209.928544] io_schedule_timeout+0x1e/0x50

[ 1209.928546] wait_for_completion_io+0xb7/0x140

[ 1209.928549] ? wake_up_q+0x80/0x80

[ 1209.928553] submit_bio_wait+0x61/0x90

[ 1209.928557] blkdev_issue_flush+0x8e/0xc0

[ 1209.928560] blkdev_fsync+0x35/0x50

[ 1209.928563] vfs_fsync_range+0x48/0x80

[ 1209.928566] ? __fget_light+0x59/0x70

[ 1209.928567] do_fsync+0x3d/0x70

[ 1209.928569] __x64_sys_fdatasync+0x17/0x20

[ 1209.928572] do_syscall_64+0x5a/0x130

[ 1209.928574] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[ 1209.928577] RIP: 0033:0x7fb7d028b2e7

[ 1209.928582] Code: Bad RIP value.

[ 1209.928583] RSP: 002b:00007fb6bd5fa780 EFLAGS: 00000293 ORIG_RAX: 000000000000004b

[ 1209.928584] RAX: ffffffffffffffda RBX: 0000000000000015 RCX: 00007fb7d028b2e7

[ 1209.928585] RDX: 0000000000000000 RSI: 0000000000000000 RDI: 0000000000000015

[ 1209.928586] RBP: 00007fb7c366caf0 R08: 0000000000000000 R09: 00000000ffffffff

[ 1209.928587] R10: 00007fb6bd5fa760 R11: 0000000000000293 R12: 0000556312351612

[ 1209.928588] R13: 00007fb7c366cb58 R14: 00007fb7c3727930 R15: 00007fb6bfa84c90

[ 1209.928591] INFO: task kvm:3561 blocked for more than 604 seconds.

[ 1209.928810] Tainted: P IO 5.3.18-3-pve #1

[ 1209.928972] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 1209.929193] kvm D 0 3561 1 0x00000000

[ 1209.929195] Call Trace:

[ 1209.929197] __schedule+0x2bb/0x660

[ 1209.929199] schedule+0x33/0xa0

[ 1209.929200] schedule_timeout+0x205/0x300

[ 1209.929202] ? dm_make_request+0x56/0xb0

[ 1209.929204] io_schedule_timeout+0x1e/0x50

[ 1209.929206] wait_for_completion_io+0xb7/0x140

[ 1209.929207] ? wake_up_q+0x80/0x80

[ 1209.929209] submit_bio_wait+0x61/0x90

[ 1209.929211] blkdev_issue_flush+0x8e/0xc0

[ 1209.929213] blkdev_fsync+0x35/0x50

[ 1209.929214] vfs_fsync_range+0x48/0x80

[ 1209.929215] ? __fget_light+0x59/0x70

[ 1209.929217] do_fsync+0x3d/0x70

[ 1209.929218] __x64_sys_fdatasync+0x17/0x20

[ 1209.929220] do_syscall_64+0x5a/0x130

[ 1209.929222] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[ 1209.929223] RIP: 0033:0x7f70182e32e7

[ 1209.929225] Code: Bad RIP value.

[ 1209.929226] RSP: 002b:00007f6f055fa780 EFLAGS: 00000293 ORIG_RAX: 000000000000004b

[ 1209.929227] RAX: ffffffffffffffda RBX: 0000000000000015 RCX: 00007f70182e32e7

[ 1209.929228] RDX: 0000000000000000 RSI: 0000000000000000 RDI: 0000000000000015

[ 1209.929229] RBP: 00007f700b66caf0 R08: 0000000000000000 R09: 00007f6f055fa730

[ 1209.929230] R10: 000000005e8b0c9e R11: 0000000000000293 R12: 0000564fd06b8612

[ 1209.929231] R13: 00007f700b66cb58 R14: 00007f700b727850 R15: 00007f6f07a84c90iotop:

Proxmox GUI backup restore log:

pveversion -v:

proxmox-ve: 6.1-2 (running kernel: 5.3.18-3-pve)

pve-manager: 6.1-8 (running version: 6.1-8/806edfe1)

pve-kernel-helper: 6.1-7

pve-kernel-5.3: 6.1-6

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.0.3-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 2.0.1-1+pve8

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.15-pve1

libpve-access-control: 6.0-6

libpve-apiclient-perl: 3.0-3

libpve-common-perl: 6.0-17

libpve-guest-common-perl: 3.0-5

libpve-http-server-perl: 3.0-5

libpve-storage-perl: 6.1-5

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 3.2.1-1

lxcfs: 4.0.1-pve1

novnc-pve: 1.1.0-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.1-3

pve-cluster: 6.1-4

pve-container: 3.0-23

pve-docs: 6.1-6

pve-edk2-firmware: 2.20200229-1

pve-firewall: 4.0-10

pve-firmware: 3.0-6

pve-ha-manager: 3.0-9

pve-i18n: 2.0-4

pve-qemu-kvm: 4.1.1-4

pve-xtermjs: 4.3.0-1

qemu-server: 6.1-7

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.3-pve1