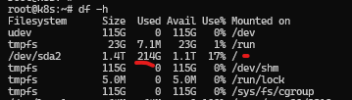

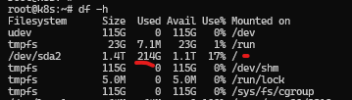

Hi everyone, I have a problem and curiosity about local lvm on my proxmox machine. On the Proxmox machine I only have 1 VM with the ID name VM100 and the disk capacity that I allocated to that VM is 1.5TB.

Currently the VM has reached 215GB on disk in used, and this VM has 2 snapshots (not including RAM).

then my question is why in local-lvm proxmox can reach 1.27TB from 1.37TB (92.11%)? even though the disk used by the VM is only 215GB and plus 2 snapshoots, the logic should be 215GB(VM) + 215GB(snapshoot) + 215GB(snapshoot) = 645GB

however local-lvm on it hit 1.27TB of 1.37TB (92.11%).

I ask for your help, thank you.

CAPTURE and INFORMATION DISK in VM100 (Shell) :

=================================================================================

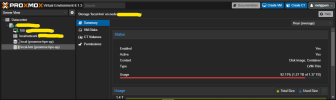

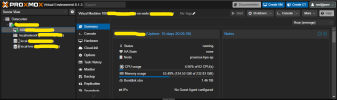

CAPTURE and INFORMATION DISK in PROXMOX MACHINE (Shell and GUI)

===============================================================

root@xxx:~# lvdisplay

--- Logical volume ---

LV Name data

VG Name pve

LV UUID eyWK1K-EOpu-Zf83-ezqV-esW9-Rviz-MUSoTS

LV Write Access read/write (activated read only)

LV Creation host, time proxmox, 2024-02-12 11:59:05 +0700

LV Pool metadata data_tmeta

LV Pool data data_tdata

LV Status available

# open 0

LV Size <1.25 TiB

Allocated pool data 92.11%

Allocated metadata 2.70%

Current LE 327617

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:5

--- Logical volume ---

LV Path /dev/pve/swap

LV Name swap

VG Name pve

LV UUID jX1eBe-epHv-b0B4-m2u2-u6eL-KRjG-pXDVuS

LV Write Access read/write

LV Creation host, time proxmox, 2024-02-12 11:58:49 +0700

LV Status available

# open 2

LV Size 8.00 GiB

Current LE 2048

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:0

--- Logical volume ---

LV Path /dev/pve/root

LV Name root

VG Name pve

LV UUID af7NHe-UTKH-zUi2-P138-4HzG-RNfI-fx2TwV

LV Write Access read/write

LV Creation host, time proxmox, 2024-02-12 11:58:49 +0700

LV Status available

# open 1

LV Size 425.62 GiB

Current LE 108959

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:1

--- Logical volume ---

LV Path /dev/pve/snap_vm-100-disk-0_OS_Ubuntu_ready_for_xxxxxxx

LV Name snap_vm-100-disk-0_OS_Ubuntu_ready_for_xxxxxxxx

VG Name pve

LV UUID eiOw7k-4Z0p-9omf-qf1t-AjNg-4rBM-ZQsgND

LV Write Access read only

LV Creation host, time proxmox-hpe-ap, 2024-02-25 22:38:46 +0700

LV Pool name data

LV Status NOT available

LV Size 1.36 TiB

Current LE 357628

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/pve/vm-100-disk-0

LV Name vm-100-disk-0

VG Name pve

LV UUID 6Ddri7-DSat-8Mxy-5SeA-V1pj-NYs7-KYHIYi

LV Write Access read/write

LV Creation host, time proxmox-hpe-ap, 2024-04-22 02:23:31 +0700

LV Pool name data

LV Status available

# open 1

LV Size 1.47 TiB

Mapped size 56.37%

Current LE 385788

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:6

--- Logical volume ---

LV Path /dev/pve/snap_vm-100-disk-0_Check1

LV Name snap_vm-100-disk-0_Check1

VG Name pve

LV UUID PEl5qB-p40m-Vn2c-9nIE-DPFZ-al3B-VDhi5J

LV Write Access read only

LV Creation host, time proxmox-hpe-ap, 2024-06-19 12:42:46 +0700

LV Pool name data

LV Thin origin name vm-100-disk-0

LV Status NOT available

LV Size 1.46 TiB

Current LE 383228

Segments 1

Allocation inherit

Read ahead sectors auto

============================================================

root@xxx:~# pvdisplay

--- Physical volume ---

PV Name /dev/sda3

VG Name pve

PV Size 1.74 TiB / not usable 2.98 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 457343

Free PE 12033

Allocated PE 445310

PV UUID XH6K9q-OdnV-JO0p-Q4aJ-yDrQ-8UGT-edmyLS

==========================================================

Currently the VM has reached 215GB on disk in used, and this VM has 2 snapshots (not including RAM).

then my question is why in local-lvm proxmox can reach 1.27TB from 1.37TB (92.11%)? even though the disk used by the VM is only 215GB and plus 2 snapshoots, the logic should be 215GB(VM) + 215GB(snapshoot) + 215GB(snapshoot) = 645GB

however local-lvm on it hit 1.27TB of 1.37TB (92.11%).

I ask for your help, thank you.

CAPTURE and INFORMATION DISK in VM100 (Shell) :

=================================================================================

CAPTURE and INFORMATION DISK in PROXMOX MACHINE (Shell and GUI)

===============================================================

root@xxx:~# lvdisplay

--- Logical volume ---

LV Name data

VG Name pve

LV UUID eyWK1K-EOpu-Zf83-ezqV-esW9-Rviz-MUSoTS

LV Write Access read/write (activated read only)

LV Creation host, time proxmox, 2024-02-12 11:59:05 +0700

LV Pool metadata data_tmeta

LV Pool data data_tdata

LV Status available

# open 0

LV Size <1.25 TiB

Allocated pool data 92.11%

Allocated metadata 2.70%

Current LE 327617

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:5

--- Logical volume ---

LV Path /dev/pve/swap

LV Name swap

VG Name pve

LV UUID jX1eBe-epHv-b0B4-m2u2-u6eL-KRjG-pXDVuS

LV Write Access read/write

LV Creation host, time proxmox, 2024-02-12 11:58:49 +0700

LV Status available

# open 2

LV Size 8.00 GiB

Current LE 2048

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:0

--- Logical volume ---

LV Path /dev/pve/root

LV Name root

VG Name pve

LV UUID af7NHe-UTKH-zUi2-P138-4HzG-RNfI-fx2TwV

LV Write Access read/write

LV Creation host, time proxmox, 2024-02-12 11:58:49 +0700

LV Status available

# open 1

LV Size 425.62 GiB

Current LE 108959

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:1

--- Logical volume ---

LV Path /dev/pve/snap_vm-100-disk-0_OS_Ubuntu_ready_for_xxxxxxx

LV Name snap_vm-100-disk-0_OS_Ubuntu_ready_for_xxxxxxxx

VG Name pve

LV UUID eiOw7k-4Z0p-9omf-qf1t-AjNg-4rBM-ZQsgND

LV Write Access read only

LV Creation host, time proxmox-hpe-ap, 2024-02-25 22:38:46 +0700

LV Pool name data

LV Status NOT available

LV Size 1.36 TiB

Current LE 357628

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/pve/vm-100-disk-0

LV Name vm-100-disk-0

VG Name pve

LV UUID 6Ddri7-DSat-8Mxy-5SeA-V1pj-NYs7-KYHIYi

LV Write Access read/write

LV Creation host, time proxmox-hpe-ap, 2024-04-22 02:23:31 +0700

LV Pool name data

LV Status available

# open 1

LV Size 1.47 TiB

Mapped size 56.37%

Current LE 385788

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:6

--- Logical volume ---

LV Path /dev/pve/snap_vm-100-disk-0_Check1

LV Name snap_vm-100-disk-0_Check1

VG Name pve

LV UUID PEl5qB-p40m-Vn2c-9nIE-DPFZ-al3B-VDhi5J

LV Write Access read only

LV Creation host, time proxmox-hpe-ap, 2024-06-19 12:42:46 +0700

LV Pool name data

LV Thin origin name vm-100-disk-0

LV Status NOT available

LV Size 1.46 TiB

Current LE 383228

Segments 1

Allocation inherit

Read ahead sectors auto

============================================================

root@xxx:~# pvdisplay

--- Physical volume ---

PV Name /dev/sda3

VG Name pve

PV Size 1.74 TiB / not usable 2.98 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 457343

Free PE 12033

Allocated PE 445310

PV UUID XH6K9q-OdnV-JO0p-Q4aJ-yDrQ-8UGT-edmyLS

==========================================================