So I was using my server a three weeks ago (to update the network settings because I moved) and everything worked great. During this time, I restarted it multiple times and shutdown and cold boot multiple times.

Turned it on today, and it hangs at the Proxmox loading page:

I do have a GPU passthrough to a VM and I do have this VM start at boot automatically. So I assume this is the problem. Here is what I tried:

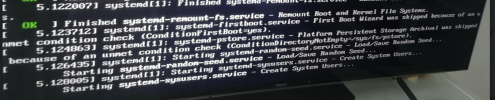

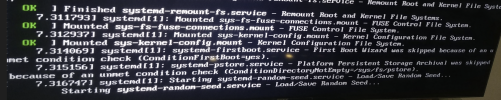

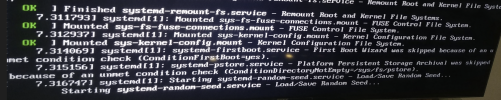

1. In Grub, removed quiet and amd_immou = on . It loads further but hangs here:

2. I tried disabling SVM and IMMOU in BIOS, and it hangs at the initial screen shot above.

3. I tried removing the GPU entirely and I still cannot access the server via the web gui. So I assume it does not work still.

Ethernet light is green and seems to be connected OK.

Any advice would be appreciated.

Thanks.

Turned it on today, and it hangs at the Proxmox loading page:

I do have a GPU passthrough to a VM and I do have this VM start at boot automatically. So I assume this is the problem. Here is what I tried:

1. In Grub, removed quiet and amd_immou = on . It loads further but hangs here:

2. I tried disabling SVM and IMMOU in BIOS, and it hangs at the initial screen shot above.

3. I tried removing the GPU entirely and I still cannot access the server via the web gui. So I assume it does not work still.

Ethernet light is green and seems to be connected OK.

Any advice would be appreciated.

Thanks.

Last edited: