Hello Proxmoxer,

I created a tool to automatically set the CPU affinity (at runtime) for VMs.

https://github.com/egandro/proxmox-cpu-affinity

This picks the best combination of cores. As mentioned by this project: https://github.com/nviennot/core-to-core-latency.

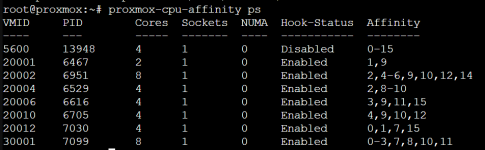

Here an example.

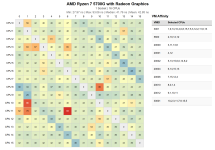

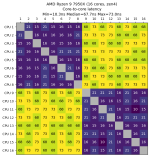

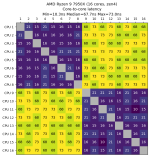

CPU 1 loves CPU 2

CPU 16 hates CPU 2 - it's about 3.5x slower to pick this affinity (on average)

If you have 128 Cores (with multiple dies on a chip) or even a dual socket machine the results are even more dramatic.

(Fun fact. Every CPU has a "buddy" with the (lowest) latency. That is most likely it's HT twin.)

If you have 10 VMs with 8 cores (2 Sockets x 4 cores or 1 Socket x 4 cores ...) it would be awesome to set the affinity.

Proxmox supports affinity - however - this is not calculated for your specific CPU.

Wouldn't it be nice if someone can (automaticually pick).

- CPU1 with 3, 5, 6 ...

- CPU2 with 3, 4, 5 ...

- ...

- CPU9 with 10, 11, 12, ...

That is where proxmox-cpu-affinity kicks in.

It has a service. This scans your CPU at start time and gets the best cores combo. (It can even listen to CPU changes e.g. if you have data center hardware with CPU hotswap.)

You have to install a hookscript to your VM. In the onStart Event the VM knocks at the service. The service can now detect the PId and via a round robin algorithm align optimal Cores with low latency to the VMs.

There is a cli too, that helps you attaching / detaching the hookscript.

Hints:

- This is not a scheduler - that is what your kernel does.

- This is not a Loadbalancer - this is also done in your kernel.

- CPU affinity is not a dictatorship it's a wish request from user to the kernel. The kernel will try to pin a process or thread on this CPU.

- By purpose the web hook is not installed on VMs templates nor on VMs that are part of HA (Proxmox config limitations would require that the hookscript is available at the same storage on all HA machines).

I have no idea how I can measure a performance gain! I think pinning processes / treads to cores with optimal latency outsmarts the kernel. Or taskset (1) would not exist at the first place.

If you have access to > 128 or > 512 core systems please ping me I am interested in making this fly (or to add a tool to gather some data)

PVE Hook Scripts: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_hookscripts

PRs + Bug Reports are welcome.

Happy xmas!

I created a tool to automatically set the CPU affinity (at runtime) for VMs.

https://github.com/egandro/proxmox-cpu-affinity

This picks the best combination of cores. As mentioned by this project: https://github.com/nviennot/core-to-core-latency.

Here an example.

CPU 1 loves CPU 2

CPU 16 hates CPU 2 - it's about 3.5x slower to pick this affinity (on average)

If you have 128 Cores (with multiple dies on a chip) or even a dual socket machine the results are even more dramatic.

(Fun fact. Every CPU has a "buddy" with the (lowest) latency. That is most likely it's HT twin.)

If you have 10 VMs with 8 cores (2 Sockets x 4 cores or 1 Socket x 4 cores ...) it would be awesome to set the affinity.

Proxmox supports affinity - however - this is not calculated for your specific CPU.

Wouldn't it be nice if someone can (automaticually pick).

- CPU1 with 3, 5, 6 ...

- CPU2 with 3, 4, 5 ...

- ...

- CPU9 with 10, 11, 12, ...

That is where proxmox-cpu-affinity kicks in.

It has a service. This scans your CPU at start time and gets the best cores combo. (It can even listen to CPU changes e.g. if you have data center hardware with CPU hotswap.)

You have to install a hookscript to your VM. In the onStart Event the VM knocks at the service. The service can now detect the PId and via a round robin algorithm align optimal Cores with low latency to the VMs.

There is a cli too, that helps you attaching / detaching the hookscript.

Hints:

- This is not a scheduler - that is what your kernel does.

- This is not a Loadbalancer - this is also done in your kernel.

- CPU affinity is not a dictatorship it's a wish request from user to the kernel. The kernel will try to pin a process or thread on this CPU.

- By purpose the web hook is not installed on VMs templates nor on VMs that are part of HA (Proxmox config limitations would require that the hookscript is available at the same storage on all HA machines).

I have no idea how I can measure a performance gain! I think pinning processes / treads to cores with optimal latency outsmarts the kernel. Or taskset (1) would not exist at the first place.

If you have access to > 128 or > 512 core systems please ping me I am interested in making this fly (or to add a tool to gather some data)

PVE Hook Scripts: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_hookscripts

PRs + Bug Reports are welcome.

Happy xmas!