Need a bit of help/advice/tips with the scenario below. We've read the documentation thoroughly but feel like we're bit in a blackhole with all the info.

We're currently learning and trailing Proxmox after, well .... Vmware.

Our aim is to setup a 3 node Cluster that connects to a Fortinet that will handle all the networking side of things (VLAN's, DHCP, 2x WAN's etc)

Now, we're bit new to the Proxmox networking side of things. But we all have quite good understanding of the on prem single server hosting with proxmox but not clustering and advanced networking yet.

We would like to create failover in case the primary link goes down and i saw that one can add multiple links during the initial Cluster config with the lowest number as the highest priority. 3X Identical Servers

What is the best case setup here? Bridge vs Bond? Linux vs OVS?

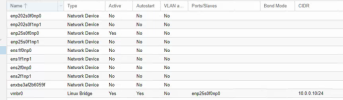

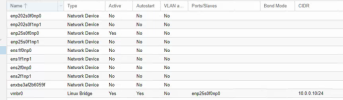

See attached screenshot of ports on 1 server pre setup and cluster join. All 3 nodes are identical so far in this regard.

Quick overrun of intended setup.

VLAN Trunks 10Gbps (Server Ports 1 and 2 ) LAG/bond etc? 2 per server

IPMI Management 1 port per server

Corosync via 20Gbps ports - 2 ports per server (Serv1 P1 to Serv 2 P1, Serv1P2 to Serv3 P1, Serv2 P2 to Serv3 P2)

Ceph via 25Gbps ports - 4ports per server. Same concept

Fortinet

2x WAN (Different ISP's for redundancy)

3 Ports birdged for VLAN trunked server connection

3 Ports bridged for IPMI management.

We're currently learning and trailing Proxmox after, well .... Vmware.

Our aim is to setup a 3 node Cluster that connects to a Fortinet that will handle all the networking side of things (VLAN's, DHCP, 2x WAN's etc)

Now, we're bit new to the Proxmox networking side of things. But we all have quite good understanding of the on prem single server hosting with proxmox but not clustering and advanced networking yet.

We would like to create failover in case the primary link goes down and i saw that one can add multiple links during the initial Cluster config with the lowest number as the highest priority. 3X Identical Servers

What is the best case setup here? Bridge vs Bond? Linux vs OVS?

See attached screenshot of ports on 1 server pre setup and cluster join. All 3 nodes are identical so far in this regard.

Quick overrun of intended setup.

VLAN Trunks 10Gbps (Server Ports 1 and 2 ) LAG/bond etc? 2 per server

IPMI Management 1 port per server

Corosync via 20Gbps ports - 2 ports per server (Serv1 P1 to Serv 2 P1, Serv1P2 to Serv3 P1, Serv2 P2 to Serv3 P2)

Ceph via 25Gbps ports - 4ports per server. Same concept

Fortinet

2x WAN (Different ISP's for redundancy)

3 Ports birdged for VLAN trunked server connection

3 Ports bridged for IPMI management.