Hello everyone,

I am running a Proxmox Ceph cluster with three nodes configured as follows:

I am unsure whether these changes will actually bring noticeable improvements:

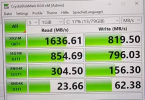

Performance is currently this:

I am hoping for a performance increase with KRBD as I have already read in some posts like this one e.g. Rocket Fly

My question is:

Can I shut down a Proxmox Ceph cluster completely without hesitation?

In my tests with the new cluster, this has worked without any problems so far. I stopped all VMs, shut down the nodes cleanly one after the other and then restarted them in the same order - without any noticeable problems.

Expectations & conclusion

As I would like to take this cluster into a productive environment, I would appreciate your feedback on my optimizations and any concerns. I am particularly interested in opinions from experienced users - and of course input from a Proxmox employee would also be very welcome.

Many thanks in advance!

I am running a Proxmox Ceph cluster with three nodes configured as follows:

- Ceph: 2×10G SFP+ (Active/Backup)

- VMs: 2×10G copper (Active/Backup)

- Corosync: 2×1Gbit copper (dedicated ports)

- WebUI: 2×1Gbit copper (active/backup) → These ports were still free, so I use them for the WebUI with redundancy.

Optimization of my Ceph cluster

I have already read some posts in the forum and considered the following points for optimization:- Enable KRBD on the Ceph storage (requires cold reboot of VMs) - I don't currently use this

- VMs: Use SCSI + virtio-scsi-single - I already use this

- Enable write-back cache, set SSD option, enable discard, use IO thread - I already use this

- Change Async IO from default (io_uring) to threads - I don't use this at the moment

I am unsure whether these changes will actually bring noticeable improvements:

Question about stability during a complete shutdown

In an earlier test cluster I had problems because Ceph could no longer heal itself. I received error messages like:In the end, I reinstalled the entire Proxmox cluster. To be fair, I have to say that the switches had a problem back then (manufacturer firmware), for which I am now using a pre-release version. They had always restarted automatically, which was still an RSTP problem, which is now fixed. I had also removed and deleted a disk as a test via. Ceph, which I attribute to this problem, which was probably the cause.libceph: mon1 (1)10.12.12.102:6789 socket closed (con state V1_BANNER)

libceph: mon1 (1)10.12.12.102:6789 socket closed (con state V1_BANNER)

libceph: mon1 (1)10.12.12.102:6789 socket closed (con state V1_BANNER)

libceph: mon0 (1)10.12.12.101:6789 socket closed (con state OPEN)

libceph: mon2 (1)10.12.12.103:6789 socket closed (con state OPEN)

ceph: No mds Server is up or the cluster is laggy

Performance is currently this:

I am hoping for a performance increase with KRBD as I have already read in some posts like this one e.g. Rocket Fly

My question is:

Can I shut down a Proxmox Ceph cluster completely without hesitation?

In my tests with the new cluster, this has worked without any problems so far. I stopped all VMs, shut down the nodes cleanly one after the other and then restarted them in the same order - without any noticeable problems.

Expectations & conclusion

As I would like to take this cluster into a productive environment, I would appreciate your feedback on my optimizations and any concerns. I am particularly interested in opinions from experienced users - and of course input from a Proxmox employee would also be very welcome.

Many thanks in advance!

Last edited: