Hello,

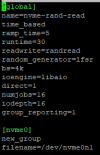

We recently got a NetApp AFF-A250 and we want to test NVMe over TCP with proxmox.

We do have NVMe/TCP working on VMware and in a windows environment it gives us 33k IOPs with NVMe/TCP.

We got NVMe/TCP working following this tutorial:

https://linbit.com/blog/configuring-highly-available-nvme-of-attached-storage-in-proxmox-ve/

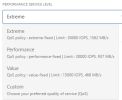

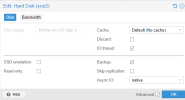

But when testing the IOPs we only get around 16k IOPs. The network speed is 20Gbps so that should not be the issue (tested with iperf3).

Are there settings we need to finetune in proxmox for this to work better? How can I troubleshoot this?

We recently got a NetApp AFF-A250 and we want to test NVMe over TCP with proxmox.

We do have NVMe/TCP working on VMware and in a windows environment it gives us 33k IOPs with NVMe/TCP.

We got NVMe/TCP working following this tutorial:

https://linbit.com/blog/configuring-highly-available-nvme-of-attached-storage-in-proxmox-ve/

But when testing the IOPs we only get around 16k IOPs. The network speed is 20Gbps so that should not be the issue (tested with iperf3).

Are there settings we need to finetune in proxmox for this to work better? How can I troubleshoot this?