Hey everyone,

First time I post here.

I'm facing a very big and strange problem: I installed few weeks ago a brand new Proxmox VE 9cluster (no upgrade) of 3 then 4 nodes. No ZFS, but a Ceph cluster.

Three nodes have 128 Go of memory and 4 NVME 1 To each, one has 192 Go of memory and 2 NVME 1 To each.

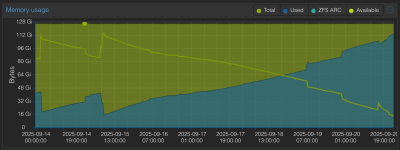

And I discovered that my memory is been eating every day until OOM after 8 or 9 days, for each node.

I have checked everything, made a lot of search onto Internet. Found nothing.

Even one node, without running any VMs (only two stopped VM) is eating all the memory day by day, hour by hour.

The Linux buffers or not so big.

The OSD are using 2 to 4 Go each.

The only way to have the memory back is to reboot the node.

When I sum processes memory, Linux buffers and caches, the total is very far away from what should be available.

I use PVE firewal, PVE SDN and Ceph.

But the all seem to use normal memory size.

I don't use HA and ZFS.

Thanks for any help.

First time I post here.

I'm facing a very big and strange problem: I installed few weeks ago a brand new Proxmox VE 9cluster (no upgrade) of 3 then 4 nodes. No ZFS, but a Ceph cluster.

Three nodes have 128 Go of memory and 4 NVME 1 To each, one has 192 Go of memory and 2 NVME 1 To each.

And I discovered that my memory is been eating every day until OOM after 8 or 9 days, for each node.

I have checked everything, made a lot of search onto Internet. Found nothing.

Even one node, without running any VMs (only two stopped VM) is eating all the memory day by day, hour by hour.

The Linux buffers or not so big.

The OSD are using 2 to 4 Go each.

The only way to have the memory back is to reboot the node.

When I sum processes memory, Linux buffers and caches, the total is very far away from what should be available.

I use PVE firewal, PVE SDN and Ceph.

But the all seem to use normal memory size.

I don't use HA and ZFS.

Thanks for any help.

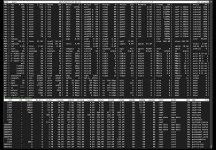

Code:

root@pve3:~# free -h

total used free shared buff/cache available

Mem: 125Gi 69Gi 41Gi 67Mi 15Gi 55Gi

Swap: 2.0Gi 0B 2.0Gi

root@pve3:~# ps faux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

.../...

root 1 0.9 0.0 27360 17760 ? Ss Sep15 56:18 /sbin/init

root 483 0.0 0.1 252568 174060 ? Ss Sep15 0:34 /usr/lib/systemd/systemd-journald

root 532 0.0 0.0 36660 10716 ? Ss Sep15 0:00 /usr/lib/systemd/systemd-udevd

root 680 0.0 0.0 7508 4172 ? Ss Sep15 0:02 /usr/sbin/mdadm --monitor --scan

_rpc 906 0.0 0.0 6564 3780 ? Ss Sep15 0:00 /usr/sbin/rpcbind -f -w

root 940 0.0 0.0 5256 1456 ? Ss Sep15 0:00 /usr/sbin/blkmapd

ceph 1065 0.0 0.0 22272 13968 ? Ss Sep15 0:00 /usr/bin/python3 /usr/bin/ceph-crash

root 1067 0.0 0.0 8548 4240 ? Ss Sep15 0:17 /bin/bash /usr/bin/check_mk_agent

root 2634788 0.0 0.0 6012 1900 ? S 07:54 0:00 \_ sleep 60

cmk-age+ 1087 0.0 0.0 19384 5808 ? Ssl Sep15 0:54 /usr/bin/cmk-agent-ctl daemon

_chrony 1093 0.0 0.0 19916 3560 ? S Sep15 0:01 /usr/sbin/chronyd -F 1

_chrony 1094 0.0 0.0 11760 3264 ? S Sep15 0:00 \_ /usr/sbin/chronyd -F 1

message+ 1099 0.0 0.0 8220 4264 ? Ss Sep15 0:18 /usr/bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation --syslog-only

root 1132 0.0 0.0 5516 2428 ? Ss Sep15 0:00 /usr/libexec/lxc/lxc-monitord --daemon

root 1141 0.0 0.0 276132 3616 ? Ssl Sep15 0:00 /usr/lib/x86_64-linux-gnu/pve-lxc-syscalld/pve-lxc-syscalld --system /run/pve-lxc-syscalld/socket

root 1183 0.0 0.0 617828 8084 ? Ssl Sep15 1:01 /usr/bin/rrdcached -g

root 1199 0.0 0.0 9688 5640 ? Ss Sep15 0:00 /usr/sbin/smartd -n -q never

root 1212 0.1 0.0 658484 72964 ? Ssl Sep15 6:41 /usr/bin/pmxcfs

root 1228 0.0 0.0 18616 8756 ? Ss Sep15 0:11 /usr/lib/systemd/systemd-logind

root 1235 0.0 0.0 2432 1256 ? Ss Sep15 0:08 /usr/sbin/watchdog-mux

root 1279 0.0 0.0 104396 6144 ? Ssl Sep15 0:00 /usr/sbin/zed -F

root 1288 0.0 0.0 159064 2392 ? Ssl Sep15 0:00 /usr/bin/lxcfs /var/lib/lxcfs

root 1294 0.0 0.0 11768 8000 ? Ss Sep15 0:00 sshd: /usr/sbin/sshd -D [listener] 0 of 10-100 startups

root 2634953 0.0 0.0 19340 12296 ? Ss 07:55 0:00 \_ sshd-session: root [priv]

root 2635015 0.1 0.0 19464 7000 ? S 07:55 0:00 \_ sshd-session: root@pts/0

root 2635034 0.0 0.0 8904 5632 pts/0 Ss 07:55 0:00 \_ -bash

root 2635641 0.0 0.0 6536 3756 pts/0 R+ 07:55 0:00 \_ ps faux

root 1320 0.0 0.0 2572 1252 ? S< Sep15 0:47 /usr/sbin/atopacctd

root 1337 0.0 0.0 39856 21920 ? Ss Sep15 0:19 /usr/bin/python3 /usr/share/unattended-upgrades/unattended-upgrade-shutdown --wait-for-signal

root 1347 0.0 0.0 8160 2656 tty1 Ss+ Sep15 0:00 /sbin/agetty -o -- \u --noreset --noclear - linux

root 1348 0.0 0.0 8204 2760 ttyS0 Ss+ Sep15 0:00 /sbin/agetty -o -- \u --noreset --noclear --keep-baud 115200,57600,38400,9600 - vt220

root 1368 0.0 0.0 43984 4488 ? Ss Sep15 0:00 /usr/lib/postfix/sbin/master -w

postfix 1370 0.0 0.0 44060 7424 ? S Sep15 0:00 \_ qmgr -l -t unix -u

postfix 994423 0.0 0.0 52900 13532 ? S Sep16 0:00 \_ tlsmgr -l -t unix -u -c

postfix 2606784 0.0 0.0 44008 7252 ? S 07:25 0:00 \_ pickup -l -t unix -u -c

ceph 1376 0.1 0.0 315764 53684 ? Ssl Sep15 7:00 /usr/bin/ceph-mds -f --cluster ceph --id pve3 --setuser ceph --setgroup ceph

ceph 1377 0.4 0.4 860148 628392 ? Ssl Sep15 27:08 /usr/bin/ceph-mon -f --cluster ceph --id pve3 --setuser ceph --setgroup ceph

root 1445 0.7 0.1 577396 184580 ? SLsl Sep15 44:16 /usr/sbin/corosync -f

root 1449 0.0 0.0 7268 2676 ? Ss Sep15 0:01 /usr/sbin/cron -f

root 1452 0.0 0.0 13808 6228 ? Ss Sep15 0:19 /usr/libexec/proxmox/proxmox-firewall start

root 1669 0.3 0.1 174868 137596 ? Ss Sep15 19:01 pvestatd

root 1671 0.4 0.0 177308 111988 ? Ss Sep15 27:01 pve-firewall

root 1983 0.0 0.1 216964 175344 ? Ss Sep15 0:04 pvedaemon

root 2600969 0.0 0.1 228092 157576 ? S 07:19 0:00 \_ pvedaemon worker

root 2600970 0.0 0.1 228160 157396 ? S 07:19 0:00 \_ pvedaemon worker

root 2600971 0.0 0.1 228272 157772 ? S 07:19 0:00 \_ pvedaemon worker

ceph 2268 4.1 2.0 4166004 2718636 ? Ssl Sep15 238:55 /usr/bin/ceph-osd -f --cluster ceph --id 10 --setuser ceph --setgroup ceph

ceph 2269 3.7 2.1 4120872 2784396 ? Ssl Sep15 217:36 /usr/bin/ceph-osd -f --cluster ceph --id 11 --setuser ceph --setgroup ceph

ceph 2270 3.5 1.9 3871128 2513548 ? Ssl Sep15 205:22 /usr/bin/ceph-osd -f --cluster ceph --id 8 --setuser ceph --setgroup ceph

ceph 2304 4.3 1.9 4033356 2627164 ? Ssl Sep15 249:24 /usr/bin/ceph-osd -f --cluster ceph --id 9 --setuser ceph --setgroup ceph

www-data 2774 0.0 0.1 218180 176748 ? Ss Sep15 0:06 pveproxy

www-data 2600981 0.0 0.1 227452 160268 ? S 07:19 0:00 \_ pveproxy worker

www-data 2600982 0.0 0.1 226904 158236 ? S 07:19 0:00 \_ pveproxy worker

www-data 2600983 0.0 0.1 227408 160572 ? S 07:19 0:00 \_ pveproxy worker

www-data 2821 0.0 0.0 91768 67652 ? Ss Sep15 0:04 spiceproxy

www-data 2600980 0.0 0.0 92052 56224 ? S 07:19 0:00 \_ spiceproxy worker

root 3136 0.0 0.1 199316 150768 ? Ss Sep15 0:27 pvescheduler

root 2185665 0.0 0.0 44012 43500 ? S<Ls 00:00 0:03 /usr/bin/atop -w /var/log/atop/atop_20250919 600

root 2207239 0.0 0.0 79612 2380 ? Ssl 00:22 0:02 /usr/sbin/pvefw-logger

root 2600907 0.0 0.0 5736 1408 ? Ss 07:19 0:00 /usr/sbin/qmeventd /var/run/qmeventd.sock

root 2601001 0.0 0.0 202732 119756 ? Ss 07:19 0:00 pve-ha-lrm

root 2601041 0.0 0.0 203476 120808 ? Ss 07:19 0:00 pve-ha-crm

root 2634958 0.4 0.0 22688 13000 ? Ss 07:55 0:00 /usr/lib/systemd/systemd --user

root 2634960 0.0 0.0 22384 3412 ? S 07:55 0:00 \_ (sd-pam)Attachments

Last edited: