After the upgrade, an error occurred on one of the nodes, the VM located on this node and in the group are not moved to other nodes in the cluster.

when the service is restarted, it is restored, but when you try to move the VM to another node, it crashes again. If you exclude the VM from the HA, then it is transferred without problems. On the rest of the recognitions, this problem is not observed.

please help, my Linux/Proxmox skill is very small (((.

root@vp1:~# ha-manager status

quorum OK

master vp4 (active, Thu Jul 8 15:59:04 2021)

lrm master (idle, Thu Jul 8 15:59:11 2021)

lrm vp1 (old timestamp - dead?, Thu Jul 8 15:41:28 2021)

lrm vp2 (active, Thu Jul 8 15:59:14 2021)

lrm vp3 (active, Thu Jul 8 15:59:05 2021)

lrm vp4 (active, Thu Jul 8 15:59:04 2021)

service vm:100 (vp2, started)

service vm:101 (vp3, started)

service vm:102 (vp4, started)

service vm:103 (vp3, started)

service vm:104 (vp1, freeze) -- this machine is on the failed node and gets the status freze every time as a paraet lrm

when the service is restarted, it is restored, but when you try to move the VM to another node, it crashes again. If you exclude the VM from the HA, then it is transferred without problems. On the rest of the recognitions, this problem is not observed.

please help, my Linux/Proxmox skill is very small (((.

root@vp1:~# ha-manager status

quorum OK

master vp4 (active, Thu Jul 8 15:59:04 2021)

lrm master (idle, Thu Jul 8 15:59:11 2021)

lrm vp1 (old timestamp - dead?, Thu Jul 8 15:41:28 2021)

lrm vp2 (active, Thu Jul 8 15:59:14 2021)

lrm vp3 (active, Thu Jul 8 15:59:05 2021)

lrm vp4 (active, Thu Jul 8 15:59:04 2021)

service vm:100 (vp2, started)

service vm:101 (vp3, started)

service vm:102 (vp4, started)

service vm:103 (vp3, started)

service vm:104 (vp1, freeze) -- this machine is on the failed node and gets the status freze every time as a paraet lrm

Code:

-- Boot 0804184aa15d464695d6acc11ae0d013 --

Jul 08 14:26:48 vp1 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Jul 08 14:26:49 vp1 pve-ha-lrm[2907]: starting server

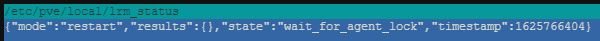

Jul 08 14:26:49 vp1 pve-ha-lrm[2907]: status change startup => wait_for_agent_lock

Jul 08 14:26:49 vp1 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Jul 08 14:33:01 vp1 pve-ha-lrm[2907]: successfully acquired lock 'ha_agent_vp1_lock'

Jul 08 14:33:01 vp1 pve-ha-lrm[2907]: ERROR: unable to open watchdog socket - No such file or directory

Jul 08 14:33:01 vp1 pve-ha-lrm[2907]: restart LRM, freeze all services

Jul 08 14:33:01 vp1 pve-ha-lrm[2907]: server stopped

Jul 08 14:33:01 vp1 systemd[1]: pve-ha-lrm.service: Main process exited, code=exited, status=255/EXCEPTION

Jul 08 14:33:01 vp1 systemd[1]: pve-ha-lrm.service: Failed with result 'exit-code'.

Jul 08 14:42:22 vp1 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Jul 08 14:42:23 vp1 pve-ha-lrm[16360]: starting server

Jul 08 14:42:23 vp1 pve-ha-lrm[16360]: status change startup => wait_for_agent_lock

Jul 08 14:42:23 vp1 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Jul 08 14:42:29 vp1 pve-ha-lrm[16360]: successfully acquired lock 'ha_agent_vp1_lock'

Jul 08 14:42:29 vp1 pve-ha-lrm[16360]: ERROR: unable to open watchdog socket - No such file or directory

Jul 08 14:42:29 vp1 pve-ha-lrm[16360]: restart LRM, freeze all services

Jul 08 14:42:29 vp1 pve-ha-lrm[16360]: server stopped

Jul 08 14:42:29 vp1 systemd[1]: pve-ha-lrm.service: Main process exited, code=exited, status=255/EXCEPTION

Jul 08 14:42:29 vp1 systemd[1]: pve-ha-lrm.service: Failed with result 'exit-code'.

Jul 08 14:43:06 vp1 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Jul 08 14:43:07 vp1 pve-ha-lrm[17441]: starting server

Jul 08 14:43:07 vp1 pve-ha-lrm[17441]: status change startup => wait_for_agent_lock

Jul 08 14:43:07 vp1 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Jul 08 14:44:33 vp1 pve-ha-lrm[17441]: successfully acquired lock 'ha_agent_vp1_lock'

Jul 08 14:44:33 vp1 pve-ha-lrm[17441]: ERROR: unable to open watchdog socket - No such file or directory

Jul 08 14:44:33 vp1 pve-ha-lrm[17441]: restart LRM, freeze all services

Jul 08 14:44:33 vp1 pve-ha-lrm[17441]: server stopped

Jul 08 14:44:33 vp1 systemd[1]: pve-ha-lrm.service: Main process exited, code=exited, status=255/EXCEPTION

Jul 08 14:44:33 vp1 systemd[1]: pve-ha-lrm.service: Failed with result 'exit-code'.

Jul 08 15:24:21 vp1 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Jul 08 15:24:22 vp1 pve-ha-lrm[60122]: starting server

Jul 08 15:24:22 vp1 pve-ha-lrm[60122]: status change startup => wait_for_agent_lock

Jul 08 15:24:22 vp1 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Jul 08 15:24:44 vp1 systemd[1]: Stopping PVE Local HA Resource Manager Daemon...

Jul 08 15:24:45 vp1 pve-ha-lrm[60122]: received signal TERM

Jul 08 15:24:45 vp1 pve-ha-lrm[60122]: restart LRM, freeze all services

Jul 08 15:24:45 vp1 pve-ha-lrm[60122]: server stopped

Jul 08 15:24:46 vp1 systemd[1]: pve-ha-lrm.service: Succeeded.

Jul 08 15:24:46 vp1 systemd[1]: Stopped PVE Local HA Resource Manager Daemon.

Jul 08 15:24:46 vp1 systemd[1]: pve-ha-lrm.service: Consumed 1.408s CPU time.

Jul 08 15:24:46 vp1 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Jul 08 15:24:47 vp1 pve-ha-lrm[60684]: starting server

Jul 08 15:24:47 vp1 pve-ha-lrm[60684]: status change startup => wait_for_agent_lock

Jul 08 15:24:47 vp1 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Jul 08 15:33:25 vp1 pve-ha-lrm[60684]: successfully acquired lock 'ha_agent_vp1_lock'

Jul 08 15:33:25 vp1 pve-ha-lrm[60684]: ERROR: unable to open watchdog socket - No such file or directory

Jul 08 15:33:25 vp1 pve-ha-lrm[60684]: restart LRM, freeze all services

Jul 08 15:33:25 vp1 pve-ha-lrm[60684]: server stopped

Jul 08 15:33:25 vp1 systemd[1]: pve-ha-lrm.service: Main process exited, code=exited, status=255/EXCEPTION

Jul 08 15:33:25 vp1 systemd[1]: pve-ha-lrm.service: Failed with result 'exit-code'.

Jul 08 15:38:08 vp1 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Jul 08 15:38:09 vp1 pve-ha-lrm[71994]: starting server

Jul 08 15:38:09 vp1 pve-ha-lrm[71994]: status change startup => wait_for_agent_lock

Jul 08 15:38:09 vp1 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Jul 08 15:39:25 vp1 pve-ha-lrm[71994]: successfully acquired lock 'ha_agent_vp1_lock'

Jul 08 15:39:25 vp1 pve-ha-lrm[71994]: ERROR: unable to open watchdog socket - No such file or directory

Jul 08 15:39:25 vp1 pve-ha-lrm[71994]: restart LRM, freeze all services

Jul 08 15:39:25 vp1 pve-ha-lrm[71994]: server stopped

Jul 08 15:39:25 vp1 systemd[1]: pve-ha-lrm.service: Main process exited, code=exited, status=255/EXCEPTION

Jul 08 15:39:25 vp1 systemd[1]: pve-ha-lrm.service: Failed with result 'exit-code'.

Jul 08 15:41:12 vp1 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Jul 08 15:41:12 vp1 pve-ha-lrm[74821]: starting server

Jul 08 15:41:12 vp1 pve-ha-lrm[74821]: status change startup => wait_for_agent_lock

Jul 08 15:41:12 vp1 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Jul 08 15:41:28 vp1 pve-ha-lrm[74821]: successfully acquired lock 'ha_agent_vp1_lock'

Jul 08 15:41:28 vp1 pve-ha-lrm[74821]: ERROR: unable to open watchdog socket - No such file or directory

Jul 08 15:41:28 vp1 pve-ha-lrm[74821]: restart LRM, freeze all services

Jul 08 15:41:28 vp1 pve-ha-lrm[74821]: server stopped

Jul 08 15:41:28 vp1 systemd[1]: pve-ha-lrm.service: Main process exited, code=exited, status=255/EXCEPTION

Jul 08 15:41:28 vp1 systemd[1]: pve-ha-lrm.service: Failed with result 'exit-code'.

Last edited: