vm-106-disk-0 backup-drive Vwi-aotz-- 50.00g backup-drive snap_vm-106-disk-0_docker 29.08

So it's a 50g volume ... maybe your snapshots and backups still used 26g if just 24g are inside vm now ... and your backup-drive is a pure volume too ?

Still wondering why anybody likes all such confusing lvm gabble of output, understanding and handling ...

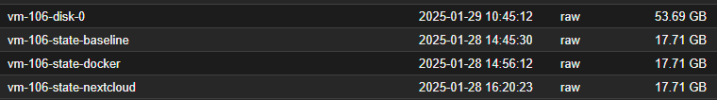

We do all pure file based (completely without lvm) as that's user friendly, revert to older state is just ref cp in few msec (!!) and could easy remote replicated, eg

[root@srv1 images]# ll 158

total 16787736

-rw-r----- 1 root root 17182752768 Jan 30 18:09 vm-158-disk-0.qcow2

-rw-r----- 1 root root 34365243392 Nov 20 00:09 vm-158-disk-1.qcow2

[root@srv1 images]# time find ../../.xfssnaps/ -name "vm-158*" -ls # look for available older versions under 2s

80198541 16780644 -rw-r----- 1 root root 17182752768 Dec 15 00:01 ../../.xfssnaps/weekly.2024-12-15_0001/srv/data/images/158/vm-158-disk-0.qcow2

80198542 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2024-12-15_0001/srv/data/images/158/vm-158-disk-1.qcow2

36507251105 16780736 -rw-r----- 1 root root 17182752768 Dec 22 00:01 ../../.xfssnaps/weekly.2024-12-22_0001/srv/data/images/158/vm-158-disk-0.qcow2

36507251106 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2024-12-22_0001/srv/data/images/158/vm-158-disk-1.qcow2

36507233543 16780828 -rw-r----- 1 root root 17182752768 Dec 29 00:01 ../../.xfssnaps/weekly.2024-12-29_0001/srv/data/images/158/vm-158-disk-0.qcow2

36507233544 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2024-12-29_0001/srv/data/images/158/vm-158-disk-1.qcow2

45097243182 16780872 -rw-r----- 1 root root 17182752768 Jan 1 00:01 ../../.xfssnaps/monthly.2025-01-01_0001/srv/data/images/158/vm-158-disk-0.qcow2

45097243183 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/monthly.2025-01-01_0001/srv/data/images/158/vm-158-disk-1.qcow2

51539617185 16781028 -rw-r----- 1 root root 17182752768 Jan 12 00:01 ../../.xfssnaps/weekly.2025-01-12_0001/srv/data/images/158/vm-158-disk-0.qcow2

51539617186 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2025-01-12_0001/srv/data/images/158/vm-158-disk-1.qcow2

12889668664 16781092 -rw-r----- 1 root root 17182752768 Jan 16 00:01 ../../.xfssnaps/daily.2025-01-16_0001/srv/data/images/158/vm-158-disk-0.qcow2

12889668665 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-16_0001/srv/data/images/158/vm-158-disk-1.qcow2

57986972344 16781128 -rw-r----- 1 root root 17182752768 Jan 19 00:01 ../../.xfssnaps/weekly.2025-01-19_0001/srv/data/images/158/vm-158-disk-0.qcow2

57986972345 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2025-01-19_0001/srv/data/images/158/vm-158-disk-1.qcow2

25769966847 16781152 -rw-r----- 1 root root 17182752768 Jan 21 00:01 ../../.xfssnaps/daily.2025-01-21_0001/srv/data/images/158/vm-158-disk-0.qcow2

25769974107 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-21_0001/srv/data/images/158/vm-158-disk-1.qcow2

4313250683 16781172 -rw-r----- 1 root root 17182752768 Jan 22 00:01 ../../.xfssnaps/daily.2025-01-22_0001/srv/data/images/158/vm-158-disk-0.qcow2

4313250684 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-22_0001/srv/data/images/158/vm-158-disk-1.qcow2

40802190024 16781212 -rw-r----- 1 root root 17182752768 Jan 25 00:01 ../../.xfssnaps/daily.2025-01-25_0001/srv/data/images/158/vm-158-disk-0.qcow2

40802190025 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-25_0001/srv/data/images/158/vm-158-disk-1.qcow2

10743807179 16781228 -rw-r----- 1 root root 17182752768 Jan 26 00:00 ../../.xfssnaps/weekly.2025-01-26_0001/srv/data/images/158/vm-158-disk-0.qcow2

10743807180 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2025-01-26_0001/srv/data/images/158/vm-158-disk-1.qcow2

51539634367 16781264 -rw-r----- 1 root root 17182752768 Jan 29 00:01 ../../.xfssnaps/daily.2025-01-29_0001/srv/data/images/158/vm-158-disk-0.qcow2

51539653474 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-29_0001/srv/data/images/158/vm-158-disk-1.qcow2

23626639150 16781276 -rw-r----- 1 root root 17182752768 Jan 30 00:01 ../../.xfssnaps/daily.2025-01-30_0001/srv/data/images/158/vm-158-disk-0.qcow2

23626639151 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-30_0001/srv/data/images/158/vm-158-disk-1.qcow2

8599215162 16780548 -rw-r----- 1 root root 17182752768 Dec 8 00:01 ../../.xfssnaps/weekly.2024-12-08_0001/srv/data/images/158/vm-158-disk-0.qcow2

8599215163 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2024-12-08_0001/srv/data/images/158/vm-158-disk-1.qcow2

23627024731 16780932 -rw-r----- 1 root root 17182752768 Jan 5 00:01 ../../.xfssnaps/weekly.2025-01-05_0001/srv/data/images/158/vm-158-disk-0.qcow2

23627024732 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/weekly.2025-01-05_0001/srv/data/images/158/vm-158-disk-1.qcow2

40802216371 16781108 -rw-r----- 1 root root 17182752768 Jan 17 00:01 ../../.xfssnaps/daily.2025-01-17_0001/srv/data/images/158/vm-158-disk-0.qcow2

40802216372 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-17_0001/srv/data/images/158/vm-158-disk-1.qcow2

17180484214 16781116 -rw-r----- 1 root root 17182752768 Jan 18 00:01 ../../.xfssnaps/daily.2025-01-18_0001/srv/data/images/158/vm-158-disk-0.qcow2

17180484215 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-18_0001/srv/data/images/158/vm-158-disk-1.qcow2

45097165729 16781140 -rw-r----- 1 root root 17182752768 Jan 20 00:01 ../../.xfssnaps/daily.2025-01-20_0001/srv/data/images/158/vm-158-disk-0.qcow2

45097165730 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-20_0001/srv/data/images/158/vm-158-disk-1.qcow2

47244642739 16781180 -rw-r----- 1 root root 17182752768 Jan 23 00:01 ../../.xfssnaps/daily.2025-01-23_0001/srv/data/images/158/vm-158-disk-0.qcow2

47244642740 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-23_0001/srv/data/images/158/vm-158-disk-1.qcow2

25769994814 16781192 -rw-r----- 1 root root 17182752768 Jan 24 00:01 ../../.xfssnaps/daily.2025-01-24_0001/srv/data/images/158/vm-158-disk-0.qcow2

25769994815 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-24_0001/srv/data/images/158/vm-158-disk-1.qcow2

45097348065 16781240 -rw-r----- 1 root root 17182752768 Jan 27 00:01 ../../.xfssnaps/daily.2025-01-27_0001/srv/data/images/158/vm-158-disk-0.qcow2

45097348066 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-27_0001/srv/data/images/158/vm-158-disk-1.qcow2

17180554000 16781248 -rw-r----- 1 root root 17182752768 Jan 28 00:01 ../../.xfssnaps/daily.2025-01-28_0001/srv/data/images/158/vm-158-disk-0.qcow2

17180554001 5320 -rw-r----- 1 root root 34365243392 Nov 20 00:09 ../../.xfssnaps/daily.2025-01-28_0001/srv/data/images/158/vm-158-disk-1.qcow2

real 0m1.754s

user 0m0.762s

sys 0m0.937s