Hello,

I'm writing to raise a problem I have with my LXC container with PBS.

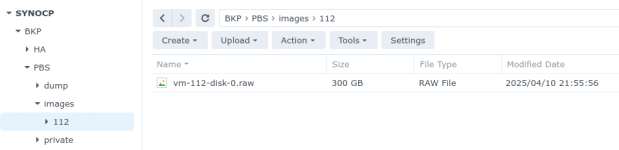

Some time ago I found a video that explained how to mount an NFS share on my Porxmox VE as storage (which I later changed to SMB), this resource was added to the LXC container options as a mount point. Once PBS is started, the Datastore is created on this mount point. Once the Datastore is created, a new storage is created from Proxomox VE which is then used by the backup service to make scheduled copies.

When the host starts everything works correctly. The container starts, the mount points and storages work, etc...

The problem starts when I decide that I don't want Proxmox to be constantly accessing my NAS since this way it can't go to sleep, it consumes energy uselessly and I only make backups from 4 to 5 in the morning.

The issue is that I have set up a Cron script to disable storage and stop the container, and when it is time to start the container it fails. I have checked everything I can think of but I don't know what else to look at.

The error when I start the container is the following:

And the output of the pct start command with debug is this:

Can someone tell me what I can check?

Thank you

I'm writing to raise a problem I have with my LXC container with PBS.

Some time ago I found a video that explained how to mount an NFS share on my Porxmox VE as storage (which I later changed to SMB), this resource was added to the LXC container options as a mount point. Once PBS is started, the Datastore is created on this mount point. Once the Datastore is created, a new storage is created from Proxomox VE which is then used by the backup service to make scheduled copies.

When the host starts everything works correctly. The container starts, the mount points and storages work, etc...

The problem starts when I decide that I don't want Proxmox to be constantly accessing my NAS since this way it can't go to sleep, it consumes energy uselessly and I only make backups from 4 to 5 in the morning.

The issue is that I have set up a Cron script to disable storage and stop the container, and when it is time to start the container it fails. I have checked everything I can think of but I don't know what else to look at.

The error when I start the container is the following:

Code:

run_buffer: 571 Script exited with status 255

lxc_init: 845 Failed to run lxc.hook.pre-start for container "112"

__lxc_start: 2034 Failed to initialize container "112"

TASK ERROR: startup for container '112' failedAnd the output of the pct start command with debug is this:

Code:

run_buffer: 571 Script exited with status 255

lxc_init: 845 Failed to run lxc.hook.pre-start for container "112"

__lxc_start: 2034 Failed to initialize container "112"

0 hostid 100000 range 65536

INFO lsm - ../src/lxc/lsm/lsm.c:lsm_init_static:38 - Initialized LSM security driver AppArmor

INFO utils - ../src/lxc/utils.c:run_script_argv:587 - Executing script "/usr/share/lxc/hooks/lxc-pve-prestart-hook" for container "112", config section "lxc"

DEBUG utils - ../src/lxc/utils.c:run_buffer:560 - Script exec /usr/share/lxc/hooks/lxc-pve-prestart-hook 112 lxc pre-start produced output: mount: /var/lib/lxc/.pve-staged-mounts/mp0: can't read superblock on /dev/loop0.

dmesg(1) may have more information after failed mount system call.

DEBUG utils - ../src/lxc/utils.c:run_buffer:560 - Script exec /usr/share/lxc/hooks/lxc-pve-prestart-hook 112 lxc pre-start produced output: command 'mount /dev/loop0 /var/lib/lxc/.pve-staged-mounts/mp0' failed: exit code 32

ERROR utils - ../src/lxc/utils.c:run_buffer:571 - Script exited with status 255

ERROR start - ../src/lxc/start.c:lxc_init:845 - Failed to run lxc.hook.pre-start for container "112"

ERROR start - ../src/lxc/start.c:__lxc_start:2034 - Failed to initialize container "112"

INFO utils - ../src/lxc/utils.c:run_script_argv:587 - Executing script "/usr/share/lxcfs/lxc.reboot.hook" for container "112", config section "lxc"

TASK ERROR: startup for container '112' failedCan someone tell me what I can check?

Thank you