Hi,

Anyone have any ideas why when after i resized a lxc running Debian 13 proxmox backup fails.

It's as if the space has not resized in the Debian container and me being new to Linux it's probably me missing something . Error is below.

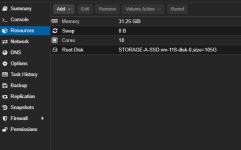

From the web interface the lxc in question i can see under status

Bootdisk size

65.75% (64.35 GiB of 97.87 GiB)

No space left on device (28)

118: 2025-09-10 18:18:38 ERROR: rsync error: error in file IO (code 11) at receiver.c(381) [receiver=3.4.1]

118: 2025-09-10 18:18:38 ERROR: rsync: [sender] write error: Broken pipe (32)

118: 2025-09-10 18:18:46 ERROR: Backup of VM 118 failed - command 'rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative '--exclude=/tmp/?*' '--exclude=/var/tmp/?*' '--exclude=/var/run/?*.pid' /proc/3726308/root//./ /var/tmp/vzdumptmp3773999_118' failed: exit code 11

Anyone have any ideas why when after i resized a lxc running Debian 13 proxmox backup fails.

It's as if the space has not resized in the Debian container and me being new to Linux it's probably me missing something . Error is below.

From the web interface the lxc in question i can see under status

Bootdisk size

65.75% (64.35 GiB of 97.87 GiB)

No space left on device (28)

118: 2025-09-10 18:18:38 ERROR: rsync error: error in file IO (code 11) at receiver.c(381) [receiver=3.4.1]

118: 2025-09-10 18:18:38 ERROR: rsync: [sender] write error: Broken pipe (32)

118: 2025-09-10 18:18:46 ERROR: Backup of VM 118 failed - command 'rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative '--exclude=/tmp/?*' '--exclude=/var/tmp/?*' '--exclude=/var/run/?*.pid' /proc/3726308/root//./ /var/tmp/vzdumptmp3773999_118' failed: exit code 11