# **Proxmox Multi-GPU NVIDIA VFIO Setup (P400 + GTX 1060)**

I’ve been running a home server setup where my GTX 1060 was dedicated to a windows gaming/streaming VM, while my P400 served another VM for different workloads. One of the challenges I quickly ran into was the **power consumption** of having both GPUs always powered, even when idle. The GTX 1060, in particular, tends to draw a noticeable amount of power when sitting idle in a VM passthrough scenario, and the P400, while less hungry, still added to the total.

After experimenting with GPU passthrough management and driver unbinding techniques, I managed to **significantly reduce idle power draw**, saving roughly **40 watts**. This improvement not only helps reduce electricity costs but also lessens heat output and wear on the hardware — which is important for a system that runs 24/7.

The struggle was balancing **VM accessibility** with **host control**, making sure I could still assign the GPUs to VMs when needed without leaving them permanently consuming power on the host.

**Important:** Both GPUs **must be present on the host during driver installation** so that the NVIDIA persistence daemon can manage both devices properly.

---

## **Step 0 — Preparation**

1. Update system and install build tools:

```bash

apt update && apt full-upgrade -y

apt install build-essential dkms pve-headers pkg-config

```

2. Check kernel and headers:

```bash

uname -r

dpkg -l | grep pve-headers

```

- Ensure headers match the running kernel (e.g., `6.14.11-2-pve`).

3. Blacklist conflicting modules in /etc/modprobe.d/blacklist.conf:

blacklist nouveau

blacklist radeon

#blacklist nvidia

Update initramfs and reboot:

update-initramfs -u

reboot

---

## **Step 1 — Prepare GPUs for Driver Installation**

1. List all NVIDIA GPUs:

lspci -nn | grep -i nvidia

- Example output:

02:00.0 Quadro P400

02:00.1 P400 Audio

65:00.0 GTX 1060

65:00.1 GTX 1060 Audio

2. **Important:** Both P400 and GTX 1060 **must be visible to the host** when installing the NVIDIA driver. This ensures the NVIDIA persistence daemon can manage both GPUs, allowing idle power management and avoiding errors during passthrough.

3. Temporarily unbind the GPU you want to dedicate to VFIO (GTX 1060) **only for driver installation**, so the driver can see it:

For my gtx 1060

echo 0000:65:00.0 > /sys/bus/pci/devices/0000:65:00.0/driver/unbind

echo 0000:65:00.1 > /sys/bus/pci/devices/0000:65:00.1/driver/unbind

echo "" > /sys/bus/pci/devices/0000:65:00.0/driver_override

echo "" > /sys/bus/pci/devices/0000:65:00.1/driver_override

- For my Quadro P400

echo 0000:02:00.0 > /sys/bus/pci/devices/0000:02:00.0/driver/unbind

echo 0000:02:00.1 > /sys/bus/pci/devices/0000:02:00.1/driver/unbind

echo "" > /sys/bus/pci/devices/0000:02:00.0/driver_override

echo "" > /sys/bus/pci/devices/0000:02:00.1/driver_override

- After installation, both GPUs should be visible to the host again under ```nvidia-smi```

---

## **Step 2 — Install NVIDIA Driver**

1. Make installer executable (this can be downloaded from Nvidia page):

chmod +x NVIDIA-Linux-x86_64-*.run

2. Run installer:

./NVIDIA-Linux-x86_64-*.run

- **Important:** Make sure both GPUs are visible on the host **during installation**. This ensures the NVIDIA persistence daemon can initialize both devices.

- Choose **No** for module signing if Secure Boot is disabled.

- Ignore X or 32-bit warnings; they are not needed for headless VFIO setups.

- Confirm installation of `nvidia-persistenced` if prompted.

---

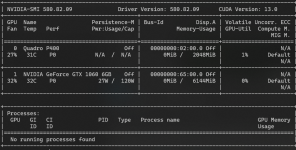

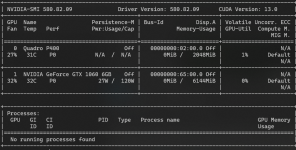

## **Step 3 — Verify Driver Installation**

nvidia-smi

lsmod | grep nvidia

- Both P400 and GTX 1060 should appear.

- Persistence mode for both GPUs can now be managed via the NVIDIA persistence daemon.

- If one GPU is missing, **persistence mode will not work correctly** for that device, potentially causing errors during passthrough or idle power issues.

## **Step 4 — Enable NVIDIA Persistence Daemon**

Create the systemd service:

cat <<EOF > /etc/systemd/system/nvidia-persistenced.service

[Unit]

Description=NVIDIA Persistence Daemon

After=network.target

[Service]

Type=forking

ExecStart=/usr/bin/nvidia-persistenced --user root --no-persistence-mode

ExecStop=/usr/bin/nvidia-persistenced --terminate

RemainAfterExit=yes

[Install]

WantedBy=multi-user.target

EOF

Reload systemd and enable:

systemctl daemon-reload

systemctl enable nvidia-persistenced

systemctl start nvidia-persistenced

systemctl status nvidia-persistenced

- **Both GPUs must be visible to the host** at this point for the daemon to manage persistence mode correctly.

## **Step 5 — Return GTX 1060 to VFIO for Passthrough**

echo vfio-pci > /sys/bus/pci/devices/0000:65:00.0/driver_override

echo vfio-pci > /sys/bus/pci/devices/0000:65:00.1/driver_override

echo 0000:65:00.0 > /sys/bus/pci/drivers_probe

echo 0000:65:00.1 > /sys/bus/pci/drivers_probe

- VM 311 will now use GTX 1060.

- Host keeps control of P400 for persistence and idle management.

---

## **Step 6 — Hook Scripts for Automatic Passthrough**

I have created two files with:

```bash

nano /var/lib/vz/snippets/gtx1060.sh

nano /var/lib/vz/snippets/p400.sh

```

### **GTX 1060 → VM 311**

#!/usr/bin/env bash

# GTX 1060 GPU passthrough hook for VM 311

GPU_VGA="0000:65:00.0" # Replace with your GPU PCI ID

GPU_AUDIO="0000:65:00.1" # Replace with HDMI/audio PCI ID

if [ "$2" == "pre-start" ]; then

# Disable persistence mode to allow unbinding

nvidia-smi -i "$GPU_VGA" --persistence-mode=0

# Unbind GPU and audio from NVIDIA driver

echo "$GPU_VGA" > /sys/bus/pci/devices/$GPU_VGA/driver/unbind

echo "$GPU_AUDIO" > /sys/bus/pci/devices/$GPU_AUDIO/driver/unbind

# Override driver to vfio-pci

echo vfio-pci > /sys/bus/pci/devices/$GPU_VGA/driver_override

echo vfio-pci > /sys/bus/pci/devices/$GPU_AUDIO/driver_override

# Bind GPU and audio to vfio-pci for VM passthrough

echo "$GPU_VGA" > /sys/bus/pci/drivers/vfio-pci/bind

echo "$GPU_AUDIO" > /sys/bus/pci/drivers/vfio-pci/bind

elif [ "$2" == "post-stop" ]; then

# Unbind GPU and audio from vfio-pci

echo "$GPU_VGA" > /sys/bus/pci/devices/$GPU_VGA/driver/unbind

echo "$GPU_AUDIO" > /sys/bus/pci/devices/$GPU_AUDIO/driver/unbind

# Rebind to NVIDIA driver

echo nvidia > /sys/bus/pci/devices/$GPU_VGA/driver_override

echo "$GPU_VGA" > /sys/bus/pci/drivers/nvidia/bind

# Re-enable persistence mode for host use

nvidia-smi -i "$GPU_VGA" --persistence-mode=1

fi

exit 0

### **P400 → VM 306

#!/usr/bin/env bash

# GTX 1060 GPU passthrough hook for VM 311

GPU_VGA="0000:02:00.0" # Replace with your GPU PCI ID

GPU_AUDIO="0000:02:00.1" # Replace with HDMI/audio PCI ID

if [ "$2" == "pre-start" ]; then

# Disable persistence mode to allow unbinding

nvidia-smi -i "$GPU_VGA" --persistence-mode=0

# Unbind GPU and audio from NVIDIA driver

echo "$GPU_VGA" > /sys/bus/pci/devices/$GPU_VGA/driver/unbind

echo "$GPU_AUDIO" > /sys/bus/pci/devices/$GPU_AUDIO/driver/unbind

# Override driver to vfio-pci

echo vfio-pci > /sys/bus/pci/devices/$GPU_VGA/driver_override

echo vfio-pci > /sys/bus/pci/devices/$GPU_AUDIO/driver_override

# Bind GPU and audio to vfio-pci for VM passthrough

echo "$GPU_VGA" > /sys/bus/pci/drivers/vfio-pci/bind

echo "$GPU_AUDIO" > /sys/bus/pci/drivers/vfio-pci/bind

elif [ "$2" == "post-stop" ]; then

# Unbind GPU and audio from vfio-pci

echo "$GPU_VGA" > /sys/bus/pci/devices/$GPU_VGA/driver/unbind

echo "$GPU_AUDIO" > /sys/bus/pci/devices/$GPU_AUDIO/driver/unbind

# Rebind to NVIDIA driver

echo nvidia > /sys/bus/pci/devices/$GPU_VGA/driver_override

echo "$GPU_VGA" > /sys/bus/pci/drivers/nvidia/bind

# Re-enable persistence mode for host use

nvidia-smi -i "$GPU_VGA" --persistence-mode=1

fi

exit 0

**Then make it executable:**

```bash

chmod +x /var/lib/vz/snippets/gtx1060.sh

chmod +x /var/lib/vz/snippets/p400.sh

```

VM configs:

```ini

# VM 311

hookscript: local:snippets/gtx1060.sh

# VM 306

hookscript: local:snippets/p400.sh

```

---

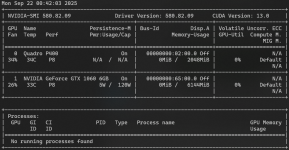

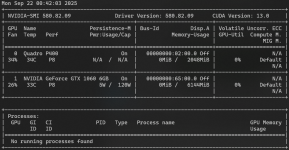

## **Step 7 — Test Setup**

1. Reboot host for a clean state.

2. Start VM 311 → GTX 1060 is assigned to VM.

3. Stop VM 311 → GTX 1060 returns to host.

4. Start VM 312 → P400 is assigned to VM.

5. Stop VM 312 → P400 returns to host.

Verify:

```bash

nvidia-smi # Host GPU should be idle when not used by VMs

systemctl status nvidia-persistenced

```

---

**Result**

- Both GPUs managed by host initially.

- Each GPU assigned to its own VM when running.

- NVIDIA persistence daemon ensures low idle power and proper GPU initialization.

- Host always regains control after VM shutdown.

- Future driver updates can be applied safely with temporary unbind/bind.

I think this approach is pretty elegant compared to putting the GPU in another low-power VM. The Linux driver from NVIDIA is kind of frustrating—it won’t allow the GPU to enter P8 state for power saving—but fortunately, there is still a small workaround for this problem.