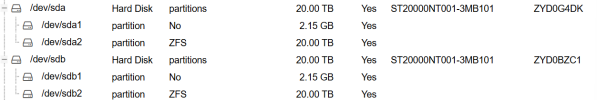

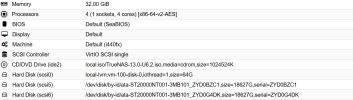

I am experiencing issues with a ZFS pool (named "DISK") running on a Proxmox VE server with TrueNAS as a VM. The pool consists of two 20 TB disks in a mirrored configuration (RAID 1). The main problem is that the pool reports data errors and shows permanent data loss for certain files. I have conducted multiple zpool scrub operations, and while they report no repaired errors, data errors persist. Attempts to import the pool with various flags (-f, -o readonly=on) often result in I/O errors and segmentation faults.

SMART tests have been run on the disks, showing no critical errors, yet TrueNAS encounters access issues with specific blocks, indicating underlying read problems. Additionally, zdb commands reveal block errors and leaked space.

I am seeking assistance with:

SMART tests have been run on the disks, showing no critical errors, yet TrueNAS encounters access issues with specific blocks, indicating underlying read problems. Additionally, zdb commands reveal block errors and leaked space.

I am seeking assistance with:

- Understanding the root causes of these data errors.

- Recommendations on potential repair options to recover data, given that I do not have a separate backup for this data.