hi guys,

i have a really weird problem atm.

i have a 3 node proxmox-cluster and 1 sperate node, all managed by PDM.

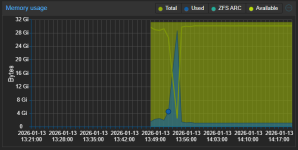

i am trying to migrate a truenas-vm from one of my cluster-nodes to the seperate node and while the disk is beeing copied the system invokes the oomkiller and prevents the migration from completing.

here a few bits of information.

VM to be migrated:

nothing special here.

package-version of the PDM:

tasklog of the migration on PDM:

i have a really weird problem atm.

i have a 3 node proxmox-cluster and 1 sperate node, all managed by PDM.

i am trying to migrate a truenas-vm from one of my cluster-nodes to the seperate node and while the disk is beeing copied the system invokes the oomkiller and prevents the migration from completing.

here a few bits of information.

VM to be migrated:

Code:

root@pve2:~# cat /etc/pve/qemu-server/106.conf

agent: 1

boot: order=scsi0;ide2;net0

cores: 4

cpu: host

ide2: none,media=cdrom

machine: q35

memory: 8192

meta: creation-qemu=10.1.2,ctime=1764169689

name: truenas

net0: virtio=BC:24:11:C8:ED:74,bridge=vmbr0

numa: 0

ostype: l26

scsi0: local-lvm:vm-106-disk-1,backup=0,cache=writeback,discard=on,iothread=1,replicate=0,size=32G,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=9cd41c9e-780b-481d-8bb1-dbaef3070a0e

sockets: 1

startup: order=1,up=60

vmgenid: 16165d01-8b6e-46d4-b1c4-d1ce555a0b2dnothing special here.

pveversion -v of source node:

Code:

root@pve2:~# pveversion -v

proxmox-ve: 9.1.0 (running kernel: 6.17.4-2-pve)

pve-manager: 9.1.4 (running version: 9.1.4/5ac30304265fbd8e)

proxmox-kernel-helper: 9.0.4

proxmox-kernel-6.17.4-2-pve-signed: 6.17.4-2

proxmox-kernel-6.17: 6.17.4-2

proxmox-kernel-6.17.4-1-pve-signed: 6.17.4-1

proxmox-kernel-6.17.2-2-pve-signed: 6.17.2-2

proxmox-kernel-6.17.2-1-pve-signed: 6.17.2-1

proxmox-kernel-6.14.11-5-pve-signed: 6.14.11-5

proxmox-kernel-6.14: 6.14.11-5

proxmox-kernel-6.14.11-4-pve-signed: 6.14.11-4

proxmox-kernel-6.14.8-2-pve-signed: 6.14.8-2

amd64-microcode: 3.20250311.1

ceph-fuse: 19.2.3-pve1

corosync: 3.1.9-pve2

criu: 4.1.1-1

frr-pythontools: 10.4.1-1+pve1

ifupdown2: 3.3.0-1+pmx11

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libproxmox-acme-perl: 1.7.0

libproxmox-backup-qemu0: 2.0.1

libproxmox-rs-perl: 0.4.1

libpve-access-control: 9.0.5

libpve-apiclient-perl: 3.4.2

libpve-cluster-api-perl: 9.0.7

libpve-cluster-perl: 9.0.7

libpve-common-perl: 9.1.4

libpve-guest-common-perl: 6.0.2

libpve-http-server-perl: 6.0.5

libpve-network-perl: 1.2.4

libpve-rs-perl: 0.11.4

libpve-storage-perl: 9.1.0

libspice-server1: 0.15.2-1+b1

lvm2: 2.03.31-2+pmx1

lxc-pve: 6.0.5-3

lxcfs: 6.0.4-pve1

novnc-pve: 1.6.0-3

proxmox-backup-client: 4.1.1-1

proxmox-backup-file-restore: 4.1.1-1

proxmox-backup-restore-image: 1.0.0

proxmox-firewall: 1.2.1

proxmox-kernel-helper: 9.0.4

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-offline-mirror-helper: 0.7.3

proxmox-widget-toolkit: 5.1.5

pve-cluster: 9.0.7

pve-container: 6.0.18

pve-docs: 9.1.2

pve-edk2-firmware: 4.2025.05-2

pve-esxi-import-tools: 1.0.1

pve-firewall: 6.0.4

pve-firmware: 3.17-2

pve-ha-manager: 5.1.0

pve-i18n: 3.6.6

pve-qemu-kvm: 10.1.2-5

pve-xtermjs: 5.5.0-3

qemu-server: 9.1.3

smartmontools: 7.4-pve1

spiceterm: 3.4.1

swtpm: 0.8.0+pve3

vncterm: 1.9.1

zfsutils-linux: 2.3.4-pve1

root@pve2:~#pve-version -v of target node:

Code:

root@rhodan:~# pveversion -v

proxmox-ve: 9.1.0 (running kernel: 6.17.4-2-pve)

pve-manager: 9.1.4 (running version: 9.1.4/5ac30304265fbd8e)

proxmox-kernel-helper: 9.0.4

proxmox-kernel-6.17.4-2-pve-signed: 6.17.4-2

proxmox-kernel-6.17: 6.17.4-2

proxmox-kernel-6.17.2-1-pve-signed: 6.17.2-1

ceph-fuse: 19.2.3-pve2

corosync: 3.1.9-pve2

criu: 4.1.1-1

frr-pythontools: 10.4.1-1+pve1

ifupdown2: 3.3.0-1+pmx11

intel-microcode: 3.20251111.1~deb13u1

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libproxmox-acme-perl: 1.7.0

libproxmox-backup-qemu0: 2.0.1

libproxmox-rs-perl: 0.4.1

libpve-access-control: 9.0.5

libpve-apiclient-perl: 3.4.2

libpve-cluster-api-perl: 9.0.7

libpve-cluster-perl: 9.0.7

libpve-common-perl: 9.1.4

libpve-guest-common-perl: 6.0.2

libpve-http-server-perl: 6.0.5

libpve-network-perl: 1.2.4

libpve-rs-perl: 0.11.4

libpve-storage-perl: 9.1.0

libspice-server1: 0.15.2-1+b1

lvm2: 2.03.31-2+pmx1

lxc-pve: 6.0.5-3

lxcfs: 6.0.4-pve1

novnc-pve: 1.6.0-3

proxmox-backup-client: 4.1.1-1

proxmox-backup-file-restore: 4.1.1-1

proxmox-backup-restore-image: 1.0.0

proxmox-firewall: 1.2.1

proxmox-kernel-helper: 9.0.4

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-offline-mirror-helper: 0.7.3

proxmox-widget-toolkit: 5.1.5

pve-cluster: 9.0.7

pve-container: 6.0.18

pve-docs: 9.1.2

pve-edk2-firmware: 4.2025.05-2

pve-esxi-import-tools: 1.0.1

pve-firewall: 6.0.4

pve-firmware: 3.17-2

pve-ha-manager: 5.1.0

pve-i18n: 3.6.6

pve-qemu-kvm: 10.1.2-5

pve-xtermjs: 5.5.0-3

qemu-server: 9.1.3

smartmontools: 7.4-pve1

spiceterm: 3.4.1

swtpm: 0.8.0+pve3

vncterm: 1.9.1

zfsutils-linux: 2.3.4-pve1

root@rhodan:~#package-version of the PDM:

Code:

proxmox-datacenter-manager-meta: 1.0.0 (running kernel: 6.17.4-2-pve)

proxmox-datacenter-manager: 1.0.2 (running version: 1.0.2)

proxmox-kernel-helper: 9.0.4

proxmox-kernel-6.17.4-2-pve-signed: 6.17.4-2

proxmox-kernel-6.17: 6.17.4-2

proxmox-kernel-6.17.2-2-pve-signed: 6.17.2-2

proxmox-kernel-6.17.2-1-pve-signed: 6.17.2-1

proxmox-kernel-6.17.1-1-pve-signed: 6.17.1-1

proxmox-kernel-6.14.11-5-pve-signed: 6.14.11-5

proxmox-kernel-6.14: 6.14.11-5

proxmox-kernel-6.14.11-4-pve-signed: 6.14.11-4

proxmox-kernel-6.14.11-3-pve-signed: 6.14.11-3

proxmox-kernel-6.14.11-2-pve-signed: 6.14.11-2

proxmox-kernel-6.14.11-1-pve-signed: 6.14.11-1

proxmox-kernel-6.11.11-2-pve-signed: 6.11.11-2

proxmox-kernel-6.11: 6.11.11-2

proxmox-kernel-6.11.11-1-pve-signed: 6.11.11-1

proxmox-kernel-6.8: 6.8.12-15

proxmox-kernel-6.8.12-15-pve-signed: 6.8.12-15

proxmox-kernel-6.8.12-14-pve-signed: 6.8.12-14

proxmox-kernel-6.8.12-9-pve-signed: 6.8.12-9

proxmox-kernel-6.8.12-8-pve-signed: 6.8.12-8

proxmox-kernel-6.8.12-5-pve-signed: 6.8.12-5

ifupdown2: 3.3.0-1+pmx11

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-offline-mirror-helper: 0.7.3

pve-xtermjs: 5.5.0-3

zfsutils-linux: 2.3.4-pve1tasklog of the migration on PDM:

Code:

Task Viewer: VM 106 Migrate

2026-01-13 13:51:25 remote: started tunnel worker 'UPID:rhodan:00000846:00001404:69663FCD:qmtunnel:106:root@pam!pdm-admin-pdm:'

tunnel: -> sending command "version" to remote

tunnel: <- got reply

2026-01-13 13:51:26 local WS tunnel version: 2

2026-01-13 13:51:26 remote WS tunnel version: 2

2026-01-13 13:51:26 minimum required WS tunnel version: 2

websocket tunnel started

2026-01-13 13:51:26 starting migration of VM 106 to node 'rhodan' (rhodan.catacombs.lan)

tunnel: -> sending command "bwlimit" to remote

tunnel: <- got reply

2026-01-13 13:51:26 found local disk 'local-lvm:vm-106-disk-1' (attached)

2026-01-13 13:51:26 copying local disk images

tunnel: -> sending command "disk-import" to remote

tunnel: <- got reply

tunnel: accepted new connection on '/run/pve/106.storage'

tunnel: requesting WS ticket via tunnel

tunnel: established new WS for forwarding '/run/pve/106.storage'

336592896 bytes (337 MB, 321 MiB) copied, 1 s, 337 MB/s

729350144 bytes (729 MB, 696 MiB) copied, 2 s, 365 MB/s

1150222336 bytes (1.2 GB, 1.1 GiB) copied, 3 s, 383 MB/s

1564999680 bytes (1.6 GB, 1.5 GiB) copied, 4 s, 391 MB/s

1980235776 bytes (2.0 GB, 1.8 GiB) copied, 5 s, 396 MB/s

2389770240 bytes (2.4 GB, 2.2 GiB) copied, 6 s, 398 MB/s

2785935360 bytes (2.8 GB, 2.6 GiB) copied, 7 s, 398 MB/s

3143172096 bytes (3.1 GB, 2.9 GiB) copied, 8 s, 393 MB/s

3531866112 bytes (3.5 GB, 3.3 GiB) copied, 9 s, 392 MB/s

3923771392 bytes (3.9 GB, 3.7 GiB) copied, 10 s, 392 MB/s

4314365952 bytes (4.3 GB, 4.0 GiB) copied, 11 s, 392 MB/s

4819714048 bytes (4.8 GB, 4.5 GiB) copied, 12 s, 402 MB/s

5257822208 bytes (5.3 GB, 4.9 GiB) copied, 13 s, 404 MB/s

5668012032 bytes (5.7 GB, 5.3 GiB) copied, 14 s, 405 MB/s

6079971328 bytes (6.1 GB, 5.7 GiB) copied, 15 s, 405 MB/s

6490619904 bytes (6.5 GB, 6.0 GiB) copied, 16 s, 406 MB/s

6904807424 bytes (6.9 GB, 6.4 GiB) copied, 17 s, 406 MB/s

7313555456 bytes (7.3 GB, 6.8 GiB) copied, 18 s, 406 MB/s

7731544064 bytes (7.7 GB, 7.2 GiB) copied, 19 s, 407 MB/s

8148877312 bytes (8.1 GB, 7.6 GiB) copied, 20 s, 407 MB/s

8566603776 bytes (8.6 GB, 8.0 GiB) copied, 21 s, 408 MB/s

8981774336 bytes (9.0 GB, 8.4 GiB) copied, 22 s, 408 MB/s

9319743488 bytes (9.3 GB, 8.7 GiB) copied, 23 s, 405 MB/s

9741729792 bytes (9.7 GB, 9.1 GiB) copied, 24 s, 406 MB/s

10146349056 bytes (10 GB, 9.4 GiB) copied, 25 s, 406 MB/s

10553065472 bytes (11 GB, 9.8 GiB) copied, 26 s, 406 MB/s

10915610624 bytes (11 GB, 10 GiB) copied, 27 s, 404 MB/s

11219435520 bytes (11 GB, 10 GiB) copied, 28 s, 401 MB/s

11335958528 bytes (11 GB, 11 GiB) copied, 29 s, 389 MB/s

11774197760 bytes (12 GB, 11 GiB) copied, 30 s, 392 MB/s

12296847360 bytes (12 GB, 11 GiB) copied, 31 s, 397 MB/s

12819496960 bytes (13 GB, 12 GiB) copied, 32 s, 401 MB/s

13135577088 bytes (13 GB, 12 GiB) copied, 33 s, 392 MB/s

13231390720 bytes (13 GB, 12 GiB) copied, 34 s, 389 MB/s

13297057792 bytes (13 GB, 12 GiB) copied, 35 s, 380 MB/s

13438091264 bytes (13 GB, 13 GiB) copied, 36 s, 373 MB/s

13800833024 bytes (14 GB, 13 GiB) copied, 37 s, 373 MB/s

14186643456 bytes (14 GB, 13 GiB) copied, 38 s, 373 MB/s

14585626624 bytes (15 GB, 14 GiB) copied, 39 s, 374 MB/s

14886567936 bytes (15 GB, 14 GiB) copied, 41 s, 366 MB/s

15055519744 bytes (15 GB, 14 GiB) copied, 41 s, 367 MB/s

15573516288 bytes (16 GB, 15 GiB) copied, 42 s, 371 MB/s

16078667776 bytes (16 GB, 15 GiB) copied, 43 s, 374 MB/s

16486629376 bytes (16 GB, 15 GiB) copied, 45 s, 368 MB/s

16586113024 bytes (17 GB, 15 GiB) copied, 45 s, 369 MB/s

16991846400 bytes (17 GB, 16 GiB) copied, 46 s, 369 MB/s

17401446400 bytes (17 GB, 16 GiB) copied, 47 s, 370 MB/s

17804623872 bytes (18 GB, 17 GiB) copied, 48 s, 371 MB/s

18218680320 bytes (18 GB, 17 GiB) copied, 49 s, 372 MB/s

18630836224 bytes (19 GB, 17 GiB) copied, 50 s, 373 MB/s

19047186432 bytes (19 GB, 18 GiB) copied, 51 s, 373 MB/s

19460063232 bytes (19 GB, 18 GiB) copied, 52 s, 374 MB/s

19877396480 bytes (20 GB, 19 GiB) copied, 53 s, 375 MB/s

20253900800 bytes (20 GB, 19 GiB) copied, 54 s, 375 MB/s

20645216256 bytes (21 GB, 19 GiB) copied, 55 s, 375 MB/s

20972765184 bytes (21 GB, 20 GiB) copied, 56 s, 375 MB/s

21248540672 bytes (21 GB, 20 GiB) copied, 57 s, 373 MB/s

21412249600 bytes (21 GB, 20 GiB) copied, 59 s, 361 MB/s

21412315136 bytes (21 GB, 20 GiB) copied, 59 s, 361 MB/s

21742157824 bytes (22 GB, 20 GiB) copied, 60 s, 362 MB/s

22203662336 bytes (22 GB, 21 GiB) copied, 61 s, 364 MB/s

22657171456 bytes (23 GB, 21 GiB) copied, 62 s, 365 MB/s

22790799360 bytes (23 GB, 21 GiB) copied, 64 s, 357 MB/s

22816817152 bytes (23 GB, 21 GiB) copied, 64 s, 357 MB/s

23190110208 bytes (23 GB, 22 GiB) copied, 65 s, 357 MB/s

23573430272 bytes (24 GB, 22 GiB) copied, 66 s, 357 MB/s

23957798912 bytes (24 GB, 22 GiB) copied, 67 s, 358 MB/s

24332140544 bytes (24 GB, 23 GiB) copied, 68 s, 358 MB/s

24699142144 bytes (25 GB, 23 GiB) copied, 69 s, 358 MB/s

25061163008 bytes (25 GB, 23 GiB) copied, 70 s, 358 MB/s

25397755904 bytes (25 GB, 24 GiB) copied, 71 s, 358 MB/s

25761218560 bytes (26 GB, 24 GiB) copied, 72 s, 358 MB/s

26140999680 bytes (26 GB, 24 GiB) copied, 73 s, 358 MB/s

26210729984 bytes (26 GB, 24 GiB) copied, 75 s, 347 MB/s

26210795520 bytes (26 GB, 24 GiB) copied, 75 s, 347 MB/s

26443972608 bytes (26 GB, 25 GiB) copied, 76 s, 348 MB/s

26787971072 bytes (27 GB, 25 GiB) copied, 77 s, 348 MB/s

27262910464 bytes (27 GB, 25 GiB) copied, 78 s, 350 MB/s

27666743296 bytes (28 GB, 26 GiB) copied, 79 s, 350 MB/s

28043313152 bytes (28 GB, 26 GiB) copied, 80 s, 351 MB/s

28406775808 bytes (28 GB, 26 GiB) copied, 81 s, 351 MB/s

28719644672 bytes (29 GB, 27 GiB) copied, 82 s, 350 MB/s

29034151936 bytes (29 GB, 27 GiB) copied, 83 s, 350 MB/s

29366681600 bytes (29 GB, 27 GiB) copied, 84 s, 350 MB/s

29694427136 bytes (30 GB, 28 GiB) copied, 85 s, 349 MB/s

30078730240 bytes (30 GB, 28 GiB) copied, 86 s, 350 MB/s

30458052608 bytes (30 GB, 28 GiB) copied, 87 s, 350 MB/s

30828527616 bytes (31 GB, 29 GiB) copied, 88 s, 350 MB/s

31180128256 bytes (31 GB, 29 GiB) copied, 89 s, 350 MB/s

31528321024 bytes (32 GB, 29 GiB) copied, 90 s, 350 MB/s

31676366848 bytes (32 GB, 30 GiB) copied, 94 s, 337 MB/s

31676432384 bytes (32 GB, 30 GiB) copied, 94 s, 337 MB/s

31676497920 bytes (32 GB, 30 GiB) copied, 94 s, 337 MB/s

31681282048 bytes (32 GB, 30 GiB) copied, 94 s, 337 MB/s

32163430400 bytes (32 GB, 30 GiB) copied, 95 s, 339 MB/s

32646168576 bytes (33 GB, 30 GiB) copied, 96 s, 340 MB/s

33130217472 bytes (33 GB, 31 GiB) copied, 97 s, 342 MB/s

33466548224 bytes (33 GB, 31 GiB) copied, 98 s, 341 MB/s

33785708544 bytes (34 GB, 31 GiB) copied, 99 s, 341 MB/s

34131279872 bytes (34 GB, 32 GiB) copied, 100 s, 341 MB/s

524288+0 records in

524288+0 records out

34359738368 bytes (34 GB, 32 GiB) copied, 101.02 s, 340 MB/s

tunnel: -> sending command "query-disk-import" to remote

tunnel: done handling forwarded connection from '/run/pve/106.storage'

2026-01-13 13:53:18 ERROR: no reply to command '{"cmd":"query-disk-import"}': reading from tunnel failed: got timeout

2026-01-13 13:53:18 aborting phase 1 - cleanup resources

tunnel: Tunnel to https://rhodan.catacombs.lan:8006/api2/json/nodes/rhodan/qemu/106/mtunnelwebsocket?ticket=PVETUNNEL%3A69663FCD%3A%3AupRea38WOp1dT9SdrQ1pFzZGyHawYolR%2FZeaLC2%2FZUCe5gYuoCz05VWeJHFWv175kQ1nDeng9R2l%2F1vj6idDXInT6zOYou%2FYjH9DmspURsqlV%2Bhzpb4K7nyQ%2FhTNJFFq%2Fe94hV59e%2Bg3zDicEvDNlrYMWYH8V3HgdBDTYzgj8m2PxURvcVSXX1CrzsRFuAoAXiPZwQFdrEwYzS4FzbokSugShzdvpLn0jx6NZoA%2FVE6zYUNq4AfjWSI0rPr6njMKKndnnvPZw9Zhht3hMcBRQ9NSBVRoV2afrYSVnMVwe7ggS7VSRNJzuT26k7LMJTzw0x1Bv09GxmR2YMHMgwUy8Q%3D%3D&socket=%2Frun%2Fqemu-server%2F106.mtunnel failed - WS closed unexpectedly

tunnel: Error: channel closed

CMD websocket tunnel died: command 'proxmox-websocket-tunnel' failed: exit code 1

2026-01-13 13:54:03 ERROR: no reply to command '{"cmd":"quit","cleanup":1}': reading from tunnel failed: got timeout

2026-01-13 13:54:03 ERROR: migration aborted (duration 00:02:38): no reply to command '{"cmd":"query-disk-import"}': reading from tunnel failed: got timeout

TASK ERROR: migration aborted

Last edited: