Hi, I'm new to the forum, but am a long term user of Proxmox and really, really need help, because I don't have any clue of what I should do to make this work.

I'll try to make it short: I followed the official documentation (https://pve.proxmox.com/wiki/PCI_Passthrough) and did all steps necessary:

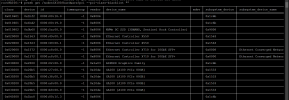

As you can see below, the result of

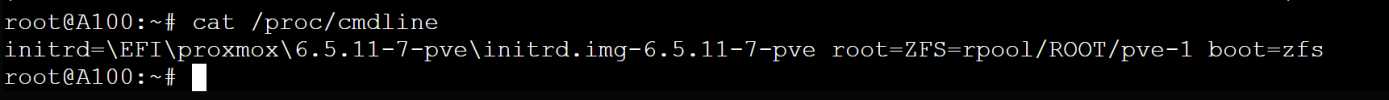

However, there is an issue, that I think might be the root of my problems: When I run

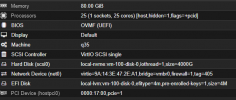

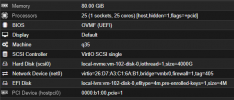

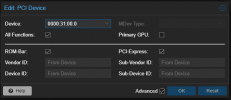

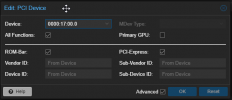

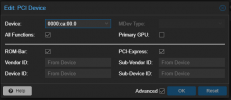

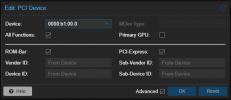

I configured each GPU to it's own VM, and again configured it and it's shwon on the documentation I cited above (you can check the configuration on the spoiler below:

Ok, now that I showed all the configuration, I can tell about the VM installation: I installed CUDA-Toolkit version 12.1 and NVidia Driver version 530.30.02, but I have also tried everything witj CUDA 11.8 and 11.7 and drivers 525 and 520 for example.

In all machines I get the GPU recognized on

However, this is when the problems beggin:

I use

Also,

And of course

Also I tried installing Pytorch and Tensorflow. The installation goes without a problem. For instance, I used Pytorch 2.0 on conda, following the directions at the official website (https://pytorch.org/get-started/locally/). However, when I try to test the installation, I get the following error as well (again, this happens only on the other three VMs, the first one works without a problem):

Now, this is the whole story and I'm at a loss as to why it works on the first VM and not on the other three. And this is when I ask for your help.

Again, I suspect that it has something to do with the way the GPUs are from the DGX configuration and having the same vendor_id, but if this is the problem, I also don't know how to overcome it and found nothing online about this problem on a similar hardware configuration.

If anyone can help me fix this or if I somehow solve this problem myself, I will create a blogpost and documentation about it.

Please, I need some help.

I'll try to make it short: I followed the official documentation (https://pve.proxmox.com/wiki/PCI_Passthrough) and did all steps necessary:

- https://pve.proxmox.com/wiki/PCI_Passthrough#Enable_the_IOMMU

- https://pve.proxmox.com/wiki/PCI_Passthrough#Required_Modules

- https://pve.proxmox.com/wiki/PCI_Passthrough#IOMMU_Interrupt_Remapping

- https://pve.proxmox.com/wiki/PCI_Passthrough#Verify_IOMMU_Isolation

- etc ...

- 112 x Intel(R) Xeon(R) Gold 6330 CPU @ 2.00GHz (2 Sockets)

- 400GB of RAM

- 4 x A100 80GB (DGX card with 4 cards connected via NVLink) (the docs are here: https://docs.nvidia.com/datacenter/tesla/hgx-software-guide/index.html#abstract)

As you can see below, the result of

lspci on the host machine, I have 4 GPUs showing, they all have separate PCI slots showing.

Bash:

0000:17:00.0 3D controller: NVIDIA Corporation GA100 [A100 SXM4 80GB] (rev a1)

Subsystem: NVIDIA Corporation Device 147f

Physical Slot: 5

Flags: fast devsel, IRQ 18, NUMA node 0

Memory at d4000000 (32-bit, non-prefetchable) [size=16M]

Memory at 24000000000 (64-bit, prefetchable) [size=128G]

Memory at 27428000000 (64-bit, prefetchable) [size=32M]

Capabilities: [60] Power Management version 3

Capabilities: [68] Null

Capabilities: [78] Express Endpoint, MSI 00

Capabilities: [c8] MSI-X: Enable- Count=6 Masked-

Capabilities: [100] Virtual Channel

Capabilities: [250] Latency Tolerance Reporting

Capabilities: [258] L1 PM Substates

Capabilities: [128] Power Budgeting <?>

Capabilities: [420] Advanced Error Reporting

Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?>

Capabilities: [900] Secondary PCI Express

Capabilities: [bb0] Physical Resizable BAR

Capabilities: [bcc] Single Root I/O Virtualization (SR-IOV)

Capabilities: [c14] Alternative Routing-ID Interpretation (ARI)

Capabilities: [c1c] Physical Layer 16.0 GT/s <?>

Capabilities: [d00] Lane Margining at the Receiver <?>

Capabilities: [e00] Data Link Feature <?>

Kernel modules: nvidiafb, nouveau

0000:31:00.0 3D controller: NVIDIA Corporation GA100 [A100 SXM4 80GB] (rev a1)

Subsystem: NVIDIA Corporation Device 147f

Physical Slot: 6

Flags: fast devsel, IRQ 18, NUMA node 0

Memory at d8000000 (32-bit, non-prefetchable) [size=16M]

Memory at 28000000000 (64-bit, prefetchable) [size=128G]

Memory at 2b428000000 (64-bit, prefetchable) [size=32M]

Capabilities: [60] Power Management version 3

Capabilities: [68] Null

Capabilities: [78] Express Endpoint, MSI 00

Capabilities: [c8] MSI-X: Enable- Count=6 Masked-

Capabilities: [100] Virtual Channel

Capabilities: [250] Latency Tolerance Reporting

Capabilities: [258] L1 PM Substates

Capabilities: [128] Power Budgeting <?>

Capabilities: [420] Advanced Error Reporting

Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?>

Capabilities: [900] Secondary PCI Express

Capabilities: [bb0] Physical Resizable BAR

Capabilities: [bcc] Single Root I/O Virtualization (SR-IOV)

Capabilities: [c14] Alternative Routing-ID Interpretation (ARI)

Capabilities: [c1c] Physical Layer 16.0 GT/s <?>

Capabilities: [d00] Lane Margining at the Receiver <?>

Capabilities: [e00] Data Link Feature <?>

Kernel modules: nvidiafb, nouveau

0000:b1:00.0 3D controller: NVIDIA Corporation GA100 [A100 SXM4 80GB] (rev a1)

Subsystem: NVIDIA Corporation Device 147f

Physical Slot: 3

Flags: fast devsel, IRQ 18, NUMA node 1

Memory at ee000000 (32-bit, non-prefetchable) [size=16M]

Memory at 3c000000000 (64-bit, prefetchable) [size=128G]

Memory at 3f428000000 (64-bit, prefetchable) [size=32M]

Capabilities: [60] Power Management version 3

Capabilities: [68] Null

Capabilities: [78] Express Endpoint, MSI 00

Capabilities: [c8] MSI-X: Enable- Count=6 Masked-

Capabilities: [100] Virtual Channel

Capabilities: [250] Latency Tolerance Reporting

Capabilities: [258] L1 PM Substates

Capabilities: [128] Power Budgeting <?>

Capabilities: [420] Advanced Error Reporting

Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?>

Capabilities: [900] Secondary PCI Express

Capabilities: [bb0] Physical Resizable BAR

Capabilities: [bcc] Single Root I/O Virtualization (SR-IOV)

Capabilities: [c14] Alternative Routing-ID Interpretation (ARI)

Capabilities: [c1c] Physical Layer 16.0 GT/s <?>

Capabilities: [d00] Lane Margining at the Receiver <?>

Capabilities: [e00] Data Link Feature <?>

Kernel modules: nvidiafb, nouveau

0000:ca:00.0 3D controller: NVIDIA Corporation GA100 [A100 SXM4 80GB] (rev a1)

Subsystem: NVIDIA Corporation Device 147f

Physical Slot: 4

Flags: fast devsel, IRQ 18, NUMA node 1

Memory at f2000000 (32-bit, non-prefetchable) [size=16M]

Memory at 40000000000 (64-bit, prefetchable) [size=128G]

Memory at 43428000000 (64-bit, prefetchable) [size=32M]

Capabilities: [60] Power Management version 3

Capabilities: [68] Null

Capabilities: [78] Express Endpoint, MSI 00

Capabilities: [c8] MSI-X: Enable- Count=6 Masked-

Capabilities: [100] Virtual Channel

Capabilities: [250] Latency Tolerance Reporting

Capabilities: [258] L1 PM Substates

Capabilities: [128] Power Budgeting <?>

Capabilities: [420] Advanced Error Reporting

Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?>

Capabilities: [900] Secondary PCI Express

Capabilities: [bb0] Physical Resizable BAR

Capabilities: [bcc] Single Root I/O Virtualization (SR-IOV)

Capabilities: [c14] Alternative Routing-ID Interpretation (ARI)

Capabilities: [c1c] Physical Layer 16.0 GT/s <?>

Capabilities: [d00] Lane Margining at the Receiver <?>

Capabilities: [e00] Data Link Feature <?>

Kernel modules: nvidiafb, nouveauHowever, there is an issue, that I think might be the root of my problems: When I run

lspci -n -s ID to get the vendor id and set it on /etc/modprobe.d/vfio.conf as shown on the documentation here: https://pve.proxmox.com/wiki/PCI_Passthrough, I get the same vendor id to all the GPUs:

Bash:

0000:17:00.0 0302: 10de:20b2 (rev a1)

0000:b1:00.0 0302: 10de:20b2 (rev a1)

0000:31:00.0 0302: 10de:20b2 (rev a1)

0000:ca:00.0 0302: 10de:20b2 (rev a1)I configured each GPU to it's own VM, and again configured it and it's shwon on the documentation I cited above (you can check the configuration on the spoiler below:

Ok, now that I showed all the configuration, I can tell about the VM installation: I installed CUDA-Toolkit version 12.1 and NVidia Driver version 530.30.02, but I have also tried everything witj CUDA 11.8 and 11.7 and drivers 525 and 520 for example.

In all machines I get the GPU recognized on

nvidia-smi:

Bash:

$ nvidia-smi

Mon May 8 23:18:50 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.30.02 Driver Version: 530.30.02 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A100-SXM4-80GB On | 00000000:01:00.0 Off | 0 |

| N/A 33C P0 73W / 500W| 0MiB / 81920MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+However, this is when the problems beggin:

I use

cuda-samples (https://github.com/NVIDIA/cuda-samples) to check my installation and when I run deviceQuery, on all but the first VM, I get the following error. These errors happen only on the other VMs, the first one I created works fine and I can use the GPU:

Bash:

$ ./deviceQuery

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

cudaGetDeviceCount returned 3

-> initialization error

Result = FAILAlso,

deviceQueryDrv returns a similar error:

Bash:

$ ./deviceQueryDrv

./deviceQueryDrv Starting...

CUDA Device Query (Driver API) statically linked version

checkCudaErrors() Driver API error = 0003 "initialization error" from file <deviceQueryDrv.cpp>, line 54.And of course

bandwidthTest:

Bash:

$ ./bandwidthTest

[CUDA Bandwidth Test] - Starting...

Running on...

cudaGetDeviceProperties returned 3

-> initialization error

CUDA error at bandwidthTest.cu:256 code=3(cudaErrorInitializationError) "cudaSetDevice(currentDevice)"Also I tried installing Pytorch and Tensorflow. The installation goes without a problem. For instance, I used Pytorch 2.0 on conda, following the directions at the official website (https://pytorch.org/get-started/locally/). However, when I try to test the installation, I get the following error as well (again, this happens only on the other three VMs, the first one works without a problem):

Bash:

~$ python

Python 3.10.9 (main, Mar 1 2023, 18:23:06) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.cuda.is_available()

False

>>> available_gpus = [torch.cuda.device(i) for i in range(torch.cuda.device_count())]

>>> available_gpus

[]

>>> torch.cuda.device_count()

0Now, this is the whole story and I'm at a loss as to why it works on the first VM and not on the other three. And this is when I ask for your help.

Again, I suspect that it has something to do with the way the GPUs are from the DGX configuration and having the same vendor_id, but if this is the problem, I also don't know how to overcome it and found nothing online about this problem on a similar hardware configuration.

If anyone can help me fix this or if I somehow solve this problem myself, I will create a blogpost and documentation about it.

Please, I need some help.