Hi,

due to 'wholes' in the administration diagrams during backups, and some failed backups, I tried to reduce the incoming traffic.

I reduced it to Rate In: 256.00 MiB/s.

But ....

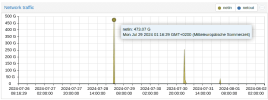

in the Network traffic diagram I can still see a peek of netin: 33.85G

Is the traffic control not working?

The PBS is installed on bare metal.

The CPU load is maximum at 35%

Server load maximum at 40%

But all these loads are long before the dropout in the diagrams.

Best regards,

Bernd

due to 'wholes' in the administration diagrams during backups, and some failed backups, I tried to reduce the incoming traffic.

I reduced it to Rate In: 256.00 MiB/s.

But ....

in the Network traffic diagram I can still see a peek of netin: 33.85G

Is the traffic control not working?

The PBS is installed on bare metal.

The CPU load is maximum at 35%

Server load maximum at 40%

But all these loads are long before the dropout in the diagrams.

Best regards,

Bernd

Last edited: