I’m not sure if this is the right place to post, but I wanted to share my experience after switching from Incus to Proxmox. I made the switch after reading about High Availability and live migrations, and I thought I’d try it out with my OPNsense setup.

At first, I saw a lot of advice saying not to use virtio drivers and to stick with PCI passthrough instead. However, on my consumer hardware, trying to pass through my Intel 540 NICs always grabbed both ports, which was a problem. After a lot of trial and error, I gave up on PCI passthrough and SR-IOV.

I decided to give virtio a shot even though I saw a lot of people say it’s not great. Unfortunately, it really hurt my network performance. My 1 Gbps download and 50 Mbps upload dropped to around 150 Mbps down and 40 Mbps up. My kids weren’t happy with that, to say the least!

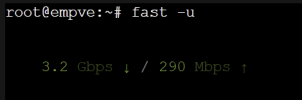

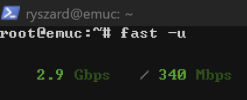

After trying different setups, I finally got things working by using Open vSwitch (OVS) for bridges. My WAN is bridged to an Intel 226 NIC, and my internal bridge uses OVS bonded across two Intel 540-BT2 ports. Now, during off-peak times like 4 AM, I’m able to hit my ISP’s speed limit—and sometimes even higher, though I’m not sure how accurate that is. During busy times when my kids are streaming, things are still pretty good as you can see in my screenshots.

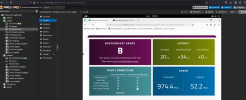

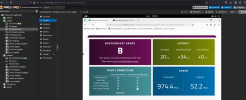

The only problem I’m still dealing with is bufferbloat. OPNsense’s traffic shaping doesn’t fully work with virtio, but I think setting the queues to 6 might help. I’ll be testing that out soon.

I’m thinking about writing a guide on this because I’m excited it worked, especially after reading so many negative things about virtio performance. For now, I just wanted to share the good news.

At first, I saw a lot of advice saying not to use virtio drivers and to stick with PCI passthrough instead. However, on my consumer hardware, trying to pass through my Intel 540 NICs always grabbed both ports, which was a problem. After a lot of trial and error, I gave up on PCI passthrough and SR-IOV.

I decided to give virtio a shot even though I saw a lot of people say it’s not great. Unfortunately, it really hurt my network performance. My 1 Gbps download and 50 Mbps upload dropped to around 150 Mbps down and 40 Mbps up. My kids weren’t happy with that, to say the least!

After trying different setups, I finally got things working by using Open vSwitch (OVS) for bridges. My WAN is bridged to an Intel 226 NIC, and my internal bridge uses OVS bonded across two Intel 540-BT2 ports. Now, during off-peak times like 4 AM, I’m able to hit my ISP’s speed limit—and sometimes even higher, though I’m not sure how accurate that is. During busy times when my kids are streaming, things are still pretty good as you can see in my screenshots.

The only problem I’m still dealing with is bufferbloat. OPNsense’s traffic shaping doesn’t fully work with virtio, but I think setting the queues to 6 might help. I’ll be testing that out soon.

I’m thinking about writing a guide on this because I’m excited it worked, especially after reading so many negative things about virtio performance. For now, I just wanted to share the good news.

Code:

root@midgard:~# qm config 100

agent: 1

bios: ovmf

boot: order=ide0

cores: 6

cpu: x86-64-v2-AES,flags=+aes

efidisk0: vms:vm-100-disk-0,efitype=4m,size=1M

ide0: vms:vm-100-disk-1,size=32G

machine: q35

memory: 8048

meta: creation-qemu=9.0.2,ctime=1735731223

name: OPNSENSE

net0: virtio=BC:24:11:2D:BA:DD,bridge=vmbr2,queues=8

net1: virtio=BC:24:11:7C:27:A2,bridge=vmbr1,queues=6

numa: 0

onboot: 1

ostype: other

scsihw: virtio-scsi-single

smbios1: uuid=06768059-2e63-4ee6-b4a3-3dc40e0958b4

sockets: 1

tags: opnsense;prod

vmgenid: fa2a3330-ad42-48a1-b7f5-11179d1ba82d