I have a bunch of older servers - almost all have a 4port x 1GB card and 2x onboard gb ports.

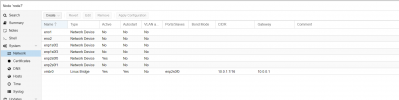

View attachment 34485

right now I only am using one of the on board nics for all the nodes - one of the onboard ones... I have a linux bridge assigned vmbr0 to that on-board port and then all the VM's LXCs run over that one 1GB port to my switch and then to 1GB WAN uplink

View attachment 34486

Works fine - until I added ceph cluster over the whole stack of nodes... still works fine - but I do see a lot of traffic and some issues with speed moving or migrating live vm from one node to the other...

I would like an expert to tell me (other than to buy new hardware, of course that is a given... maybe any tips on cheap 10gb cards I can install)... but advice on HOW to separate Internet uplink traffic from local corosync, ceph and management --> using all 6 of these available ports.

I was thinking of using the 4 port card for all ceph and local node to node - but not entirely sure what is best to do for setup. Setup Bonds 0/1 and 2/3 and assign somehow pve coms over one bong and ceph over the other? How?

I do want to use HA and learn this best practice... it all works swell on one nic - but I am 100% positive if I get much traffic it will crawl to a halt and mess with the ceph cluster speeds and everything else.

What would you suggest I do with 6x1GB nics?

View attachment 34485

right now I only am using one of the on board nics for all the nodes - one of the onboard ones... I have a linux bridge assigned vmbr0 to that on-board port and then all the VM's LXCs run over that one 1GB port to my switch and then to 1GB WAN uplink

View attachment 34486

Works fine - until I added ceph cluster over the whole stack of nodes... still works fine - but I do see a lot of traffic and some issues with speed moving or migrating live vm from one node to the other...

I would like an expert to tell me (other than to buy new hardware, of course that is a given... maybe any tips on cheap 10gb cards I can install)... but advice on HOW to separate Internet uplink traffic from local corosync, ceph and management --> using all 6 of these available ports.

I was thinking of using the 4 port card for all ceph and local node to node - but not entirely sure what is best to do for setup. Setup Bonds 0/1 and 2/3 and assign somehow pve coms over one bong and ceph over the other? How?

I do want to use HA and learn this best practice... it all works swell on one nic - but I am 100% positive if I get much traffic it will crawl to a halt and mess with the ceph cluster speeds and everything else.

What would you suggest I do with 6x1GB nics?