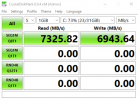

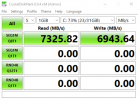

Set up a brand new Dell PowerEdge R6515 with an Epyc 24 Core CPU with NVMe storage. If I store the virtual disk on the NVMe via local LVM in Proxmox, I get the expected speeds in a Windows VM.

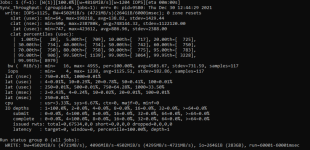

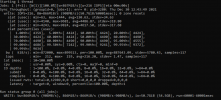

If I try to pass through the NVMe storage, however, it severely bottlenecks the drives and I cannot figure out where the issue is. Any thoughts?

If I try to pass through the NVMe storage, however, it severely bottlenecks the drives and I cannot figure out where the issue is. Any thoughts?