Hey all,

This is a long post. Hopefully I'm giving enough information to go on.

I'm trying to complete a trial of a VDI solution we're hoping to migrate away from VMware Horizon View to. The software has support for Proxmox, which we've used on our primary virtualization cluster for a few years now. I have the NVIDIA GRID GPU drivers and tools installed and running, but I'm running into an issue.

I should add - we're a licensed NVIDIA GRID vGPU customer - we're not using the vgpu_unlock modification or anything like that.

I have followed the guide here:

https://pve.proxmox.com/wiki/NVIDIA_vGPU_on_Proxmox_VE

Server specs:

Dell PowerEdge R7525

2x AMD EPYC 7F72 (24c48t) CPUs

1.0TB RAM

3x NVIDIA A10 GPUs

In the Dell BIOS I have "SR-IOV Global Enable" enabled.

UEFI with Secure Boot Enabled

NVIDIA Drivers were signed, keys adoped in MOK

Proxmox 8.1 (with latest updates as of this post)

NVIDIA-GRID-Linux-KVM-550.54.16-550.54.15-551.78 package from NVIDIA's enterprise download site

/proc/cmdline:

Linux kernel reports at boot:

I do not see any DMAR errors.

All GPUs (and their sub-PCIe devices) are displayed in separate IOMMU groups, visible using:

pvesh get /nodes/localhost/hardware/pci --pci-class-blacklist ""

nvidia-smi vgpu displays:

I also see all of the NVIDIA profiles available in:

/sys/bus/pci/devices/0000:*/mdev_supported_types/*

However,

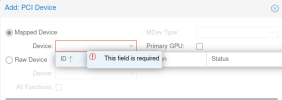

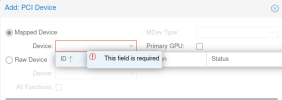

The Proxmox interface will not show any available devices:

I'm not sure what to look at next.

Thanks in advance for any thoughts or suggestions.

This is a long post. Hopefully I'm giving enough information to go on.

I'm trying to complete a trial of a VDI solution we're hoping to migrate away from VMware Horizon View to. The software has support for Proxmox, which we've used on our primary virtualization cluster for a few years now. I have the NVIDIA GRID GPU drivers and tools installed and running, but I'm running into an issue.

I should add - we're a licensed NVIDIA GRID vGPU customer - we're not using the vgpu_unlock modification or anything like that.

I have followed the guide here:

https://pve.proxmox.com/wiki/NVIDIA_vGPU_on_Proxmox_VE

Server specs:

Dell PowerEdge R7525

2x AMD EPYC 7F72 (24c48t) CPUs

1.0TB RAM

3x NVIDIA A10 GPUs

In the Dell BIOS I have "SR-IOV Global Enable" enabled.

UEFI with Secure Boot Enabled

NVIDIA Drivers were signed, keys adoped in MOK

Proxmox 8.1 (with latest updates as of this post)

NVIDIA-GRID-Linux-KVM-550.54.16-550.54.15-551.78 package from NVIDIA's enterprise download site

/proc/cmdline:

BOOT_IMAGE=/vmlinuz-6.5.13-5-pve root=ZFS=/ROOT/pve-1 ro root=ZFS=rpool/ROOT/pve-1 boot=zfs quiet amd_iommu=on iommu=ptLinux kernel reports at boot:

AMD-Vi: Interrupt remapping enabledpci 0000:60:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:40:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:20:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:00:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:e0:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:c0:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:a0:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:80:00.2: AMD-Vi: IOMMU performance counters supportedpci 0000:60:00.2: AMD-Vi: Found IOMMU cap 0x40pci 0000:40:00.2: AMD-Vi: Found IOMMU cap 0x40pci 0000:20:00.2: AMD-Vi: Found IOMMU cap 0x40pci 0000:00:00.2: AMD-Vi: Found IOMMU cap 0x40pci 0000:e0:00.2: AMD-Vi: Found IOMMU cap 0x40pci 0000:c0:00.2: AMD-Vi: Found IOMMU cap 0x40pci 0000:a0:00.2: AMD-Vi: Found IOMMU cap 0x40pci 0000:80:00.2: AMD-Vi: Found IOMMU cap 0x40perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).perf/amd_iommu: Detected AMD IOMMU #1 (2 banks, 4 counters/bank).perf/amd_iommu: Detected AMD IOMMU #2 (2 banks, 4 counters/bank).perf/amd_iommu: Detected AMD IOMMU #3 (2 banks, 4 counters/bank).perf/amd_iommu: Detected AMD IOMMU #4 (2 banks, 4 counters/bank).perf/amd_iommu: Detected AMD IOMMU #5 (2 banks, 4 counters/bank).perf/amd_iommu: Detected AMD IOMMU #6 (2 banks, 4 counters/bank).perf/amd_iommu: Detected AMD IOMMU #7 (2 banks, 4 counters/bank).I do not see any DMAR errors.

All GPUs (and their sub-PCIe devices) are displayed in separate IOMMU groups, visible using:

pvesh get /nodes/localhost/hardware/pci --pci-class-blacklist ""

nvidia-smi vgpu displays:

+-----------------------------------------------------------------------------+| NVIDIA-SMI 550.54.16 Driver Version: 550.54.16 ||---------------------------------+------------------------------+------------+| GPU Name | Bus-Id | GPU-Util || vGPU ID Name | VM ID VM Name | vGPU-Util ||=================================+==============================+============|| 0 NVIDIA A10 | 00000000:21:00.0 | 0% |+---------------------------------+------------------------------+------------+| 1 NVIDIA A10 | 00000000:81:00.0 | 0% |+---------------------------------+------------------------------+------------+| 2 NVIDIA A10 | 00000000:E2:00.0 | 0% |+---------------------------------+------------------------------+------------+I also see all of the NVIDIA profiles available in:

/sys/bus/pci/devices/0000:*/mdev_supported_types/*

However,

The Proxmox interface will not show any available devices:

I'm not sure what to look at next.

Thanks in advance for any thoughts or suggestions.