Hello,

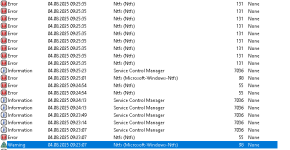

we are experiencing strange NTFS data corruption problem within some of our VMs.

The corruption only occours occasionally on the "pool disks" of our archiving application VMs. At some point Windows refuses to use the disks until a chkdsk repair is done. Although this is working in general some files are mostly damaged afterwards which is not acceptable permanently.

The disks are about 3T and (mostly) under some kind of load.

The application works like this:

* receiving and saving the customer data to pool disk

* reading the data again and archiving/writing it to tape

* deleting the data from disk

Our configuration:

* 3 nodes with Proxmox 8.4

* Ceph 18.2.4 with ~150T total disk space (nvme)

* EC pool with ~90T usable space

* affected VMs:

** all Windows Server 2022

** all disks are RBD images and accessed via the "VirtIO SCSI single" controller

** 500G system disk (no problems so far)

** 2,5T or 3T pool/data disk (problems occour irregularly)

As the problems occour only irregularly it's really hard to debug them. As a first step we deactivated the writeback cache on the pooldisks which seems to at least have reduced the frequency of the problems - but this could also only be a conincidence.

On one node we tried changing the file system to ReFS and so far the disk had no more problems, which of course could only be a coincidence. As my colleagues had heavy other problems with ReFS in the past we are still very reluctant to fully change to it right now.

The ceph itself is running fine and neither the system disks of affected VMs nor any other VMs (Linux and Windows) seem to have these problems.

*Did anybody run into similar problems and has a good solution or at least a workaround for them?

* Has anybody some experience with ReFS and would you recommend to use it?

we are experiencing strange NTFS data corruption problem within some of our VMs.

The corruption only occours occasionally on the "pool disks" of our archiving application VMs. At some point Windows refuses to use the disks until a chkdsk repair is done. Although this is working in general some files are mostly damaged afterwards which is not acceptable permanently.

The disks are about 3T and (mostly) under some kind of load.

The application works like this:

* receiving and saving the customer data to pool disk

* reading the data again and archiving/writing it to tape

* deleting the data from disk

Our configuration:

* 3 nodes with Proxmox 8.4

* Ceph 18.2.4 with ~150T total disk space (nvme)

* EC pool with ~90T usable space

* affected VMs:

** all Windows Server 2022

** all disks are RBD images and accessed via the "VirtIO SCSI single" controller

** 500G system disk (no problems so far)

** 2,5T or 3T pool/data disk (problems occour irregularly)

As the problems occour only irregularly it's really hard to debug them. As a first step we deactivated the writeback cache on the pooldisks which seems to at least have reduced the frequency of the problems - but this could also only be a conincidence.

On one node we tried changing the file system to ReFS and so far the disk had no more problems, which of course could only be a coincidence. As my colleagues had heavy other problems with ReFS in the past we are still very reluctant to fully change to it right now.

The ceph itself is running fine and neither the system disks of affected VMs nor any other VMs (Linux and Windows) seem to have these problems.

*Did anybody run into similar problems and has a good solution or at least a workaround for them?

* Has anybody some experience with ReFS and would you recommend to use it?