I have a simple proxmox setup with two hosts. These hosts are connected to a switch at 10Gbps and directly connected to each other at 10Gbps.

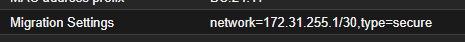

I have the migration settings set to use this directly connected network.

I am also able to achieve 10Gbps connectivity using iperf3.

However, when I perform a migration between hosts I get nowhere near 10Gbps speed. A VM that I would expect take maybe 2 minutes at most, takes over 6 minutes. Is there something I am doing wrong? I thought this was going to be more or less "plug and play". Any help is greatly appreciated.

I have the migration settings set to use this directly connected network.

I am also able to achieve 10Gbps connectivity using iperf3.

Code:

-----------------------------------------------------------

Server listening on 5201 (test #1)

-----------------------------------------------------------

Accepted connection from 172.31.255.2, port 47640

[ 5] local 172.31.255.1 port 5201 connected to 172.31.255.2 port 47644

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 1.15 GBytes 9.89 Gbits/sec

[ 5] 1.00-2.00 sec 1.15 GBytes 9.90 Gbits/sec

[ 5] 2.00-3.00 sec 1.15 GBytes 9.90 Gbits/sec

[ 5] 3.00-4.00 sec 1.15 GBytes 9.90 Gbits/sec

[ 5] 4.00-5.00 sec 1.15 GBytes 9.90 Gbits/sec

[ 5] 5.00-6.00 sec 1.15 GBytes 9.90 Gbits/sec

[ 5] 6.00-7.00 sec 1.15 GBytes 9.90 Gbits/sec

[ 5] 7.00-8.00 sec 1.15 GBytes 9.90 Gbits/sec

[ 5] 7.00-8.00 sec 1.15 GBytes 9.90 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-8.00 sec 9.61 GBytes 10.3 Gbits/sec receiver

iperf3: the client has terminated

-----------------------------------------------------------However, when I perform a migration between hosts I get nowhere near 10Gbps speed. A VM that I would expect take maybe 2 minutes at most, takes over 6 minutes. Is there something I am doing wrong? I thought this was going to be more or less "plug and play". Any help is greatly appreciated.

Code:

()

2024-07-02 16:07:58 use dedicated network address for sending migration traffic (172.31.255.2)

2024-07-02 16:07:58 starting migration of VM 110 to node 'sisko' (172.31.255.2)

2024-07-02 16:07:58 found local disk 'fast:vm-110-disk-0' (attached)

2024-07-02 16:07:58 starting VM 110 on remote node 'sisko'

2024-07-02 16:07:59 volume 'fast:vm-110-disk-0' is 'fast:vm-110-disk-0' on the target

2024-07-02 16:07:59 start remote tunnel

2024-07-02 16:08:00 ssh tunnel ver 1

2024-07-02 16:08:00 starting storage migration

2024-07-02 16:08:00 scsi0: start migration to nbd:unix:/run/qemu-server/110_nbd.migrate:exportname=drive-scsi0

drive mirror is starting for drive-scsi0

drive-scsi0: transferred 0.0 B of 25.0 GiB (0.00%) in 0s

drive-scsi0: transferred 47.0 MiB of 25.0 GiB (0.18%) in 1s

drive-scsi0: transferred 67.0 MiB of 25.0 GiB (0.26%) in 2s

drive-scsi0: transferred 89.0 MiB of 25.0 GiB (0.35%) in 3s

drive-scsi0: transferred 93.0 MiB of 25.0 GiB (0.36%) in 4s

drive-scsi0: transferred 149.0 MiB of 25.0 GiB (0.58%) in 5s

drive-scsi0: transferred 175.0 MiB of 25.0 GiB (0.68%) in 6s

drive-scsi0: transferred 184.0 MiB of 25.0 GiB (0.72%) in 7s

drive-scsi0: transferred 217.0 MiB of 25.0 GiB (0.85%) in 8s

drive-scsi0: transferred 229.0 MiB of 25.0 GiB (0.89%) in 9s

drive-scsi0: transferred 239.0 MiB of 25.0 GiB (0.93%) in 10s

drive-scsi0: transferred 257.0 MiB of 25.0 GiB (1.00%) in 11s

drive-scsi0: transferred 272.0 MiB of 25.0 GiB (1.06%) in 12s

OMITTED DUE TO CHARACTER LIMIT

drive-scsi0: transferred 16.2 GiB of 25.0 GiB (64.85%) in 4m 1s

drive-scsi0: transferred 16.3 GiB of 25.0 GiB (65.08%) in 4m 2s

drive-scsi0: transferred 16.3 GiB of 25.0 GiB (65.34%) in 4m 3s

drive-scsi0: transferred 16.4 GiB of 25.0 GiB (65.59%) in 4m 4s

drive-scsi0: transferred 16.5 GiB of 25.0 GiB (65.87%) in 4m 5s

drive-scsi0: transferred 16.5 GiB of 25.0 GiB (66.10%) in 4m 6s

drive-scsi0: transferred 16.6 GiB of 25.0 GiB (66.36%) in 4m 7s

drive-scsi0: transferred 16.6 GiB of 25.0 GiB (66.56%) in 4m 8s

drive-scsi0: transferred 16.7 GiB of 25.0 GiB (66.83%) in 4m 9s

drive-scsi0: transferred 16.8 GiB of 25.0 GiB (67.05%) in 4m 10s

drive-scsi0: transferred 16.8 GiB of 25.0 GiB (67.27%) in 4m 11s

drive-scsi0: transferred 16.9 GiB of 25.0 GiB (67.54%) in 4m 12s

drive-scsi0: transferred 16.9 GiB of 25.0 GiB (67.79%) in 4m 13s

drive-scsi0: transferred 17.0 GiB of 25.0 GiB (68.02%) in 4m 14s

drive-scsi0: transferred 17.1 GiB of 25.0 GiB (68.27%) in 4m 15s

drive-scsi0: transferred 17.1 GiB of 25.0 GiB (68.51%) in 4m 16s

drive-scsi0: transferred 17.2 GiB of 25.0 GiB (68.79%) in 4m 17s

drive-scsi0: transferred 17.3 GiB of 25.0 GiB (69.06%) in 4m 18s

drive-scsi0: transferred 17.3 GiB of 25.0 GiB (69.32%) in 4m 19s

drive-scsi0: transferred 17.4 GiB of 25.0 GiB (69.59%) in 4m 20s

drive-scsi0: transferred 17.5 GiB of 25.0 GiB (69.84%) in 4m 21s

drive-scsi0: transferred 17.5 GiB of 25.0 GiB (70.09%) in 4m 22s

drive-scsi0: transferred 17.6 GiB of 25.0 GiB (70.35%) in 4m 23s

drive-scsi0: transferred 17.7 GiB of 25.0 GiB (70.61%) in 4m 24s

drive-scsi0: transferred 17.7 GiB of 25.0 GiB (70.88%) in 4m 25s

drive-scsi0: transferred 17.8 GiB of 25.0 GiB (71.14%) in 4m 26s

drive-scsi0: transferred 17.9 GiB of 25.0 GiB (71.42%) in 4m 27s

drive-scsi0: transferred 17.9 GiB of 25.0 GiB (71.68%) in 4m 28s

drive-scsi0: transferred 18.0 GiB of 25.0 GiB (71.91%) in 4m 29s

drive-scsi0: transferred 18.0 GiB of 25.0 GiB (72.17%) in 4m 30s

drive-scsi0: transferred 18.1 GiB of 25.0 GiB (72.40%) in 4m 31s

drive-scsi0: transferred 18.2 GiB of 25.0 GiB (72.66%) in 4m 32s

drive-scsi0: transferred 18.2 GiB of 25.0 GiB (72.93%) in 4m 33s

drive-scsi0: transferred 18.3 GiB of 25.0 GiB (73.19%) in 4m 34s

drive-scsi0: transferred 18.4 GiB of 25.0 GiB (73.47%) in 4m 35s

drive-scsi0: transferred 18.4 GiB of 25.0 GiB (73.73%) in 4m 36s

drive-scsi0: transferred 18.5 GiB of 25.0 GiB (73.95%) in 4m 37s

drive-scsi0: transferred 18.5 GiB of 25.0 GiB (74.18%) in 4m 38s

drive-scsi0: transferred 18.6 GiB of 25.0 GiB (74.38%) in 4m 39s

drive-scsi0: transferred 18.6 GiB of 25.0 GiB (74.47%) in 4m 40s

drive-scsi0: transferred 18.6 GiB of 25.0 GiB (74.53%) in 4m 41s

drive-scsi0: transferred 18.8 GiB of 25.0 GiB (75.21%) in 4m 42s

drive-scsi0: transferred 18.9 GiB of 25.0 GiB (75.44%) in 4m 43s

drive-scsi0: transferred 18.9 GiB of 25.0 GiB (75.70%) in 4m 44s

drive-scsi0: transferred 19.0 GiB of 25.0 GiB (75.95%) in 4m 45s

drive-scsi0: transferred 19.0 GiB of 25.0 GiB (76.19%) in 4m 46s

drive-scsi0: transferred 19.1 GiB of 25.0 GiB (76.45%) in 4m 47s

drive-scsi0: transferred 19.2 GiB of 25.0 GiB (76.72%) in 4m 48s

drive-scsi0: transferred 19.2 GiB of 25.0 GiB (76.98%) in 4m 49s

drive-scsi0: transferred 19.3 GiB of 25.0 GiB (77.25%) in 4m 50s

drive-scsi0: transferred 19.4 GiB of 25.0 GiB (77.48%) in 4m 51s

drive-scsi0: transferred 19.4 GiB of 25.0 GiB (77.73%) in 4m 52s

drive-scsi0: transferred 19.5 GiB of 25.0 GiB (77.99%) in 4m 53s

drive-scsi0: transferred 19.6 GiB of 25.0 GiB (78.25%) in 4m 54s

drive-scsi0: transferred 19.6 GiB of 25.0 GiB (78.52%) in 4m 55s

drive-scsi0: transferred 19.7 GiB of 25.0 GiB (78.69%) in 4m 56s

drive-scsi0: transferred 19.7 GiB of 25.0 GiB (78.96%) in 4m 57s

drive-scsi0: transferred 19.8 GiB of 25.0 GiB (79.21%) in 4m 58s

drive-scsi0: transferred 19.9 GiB of 25.0 GiB (79.47%) in 4m 59s

drive-scsi0: transferred 19.9 GiB of 25.0 GiB (79.73%) in 5m

drive-scsi0: transferred 20.0 GiB of 25.0 GiB (79.98%) in 5m 1s

drive-scsi0: transferred 20.1 GiB of 25.0 GiB (80.22%) in 5m 2s

drive-scsi0: transferred 20.1 GiB of 25.0 GiB (80.47%) in 5m 3s

drive-scsi0: transferred 20.2 GiB of 25.0 GiB (80.71%) in 5m 4s

drive-scsi0: transferred 20.2 GiB of 25.0 GiB (80.96%) in 5m 5s

drive-scsi0: transferred 20.3 GiB of 25.0 GiB (81.23%) in 5m 6s

drive-scsi0: transferred 20.4 GiB of 25.0 GiB (81.47%) in 5m 7s

drive-scsi0: transferred 20.4 GiB of 25.0 GiB (81.76%) in 5m 8s

drive-scsi0: transferred 20.5 GiB of 25.0 GiB (81.99%) in 5m 9s

drive-scsi0: transferred 20.6 GiB of 25.0 GiB (82.26%) in 5m 10s

drive-scsi0: transferred 20.6 GiB of 25.0 GiB (82.44%) in 5m 11s

drive-scsi0: transferred 20.7 GiB of 25.0 GiB (82.71%) in 5m 12s

drive-scsi0: transferred 20.7 GiB of 25.0 GiB (82.99%) in 5m 13s

drive-scsi0: transferred 20.8 GiB of 25.0 GiB (83.24%) in 5m 14s

drive-scsi0: transferred 20.9 GiB of 25.0 GiB (83.51%) in 5m 15s

drive-scsi0: transferred 20.9 GiB of 25.0 GiB (83.77%) in 5m 16s

drive-scsi0: transferred 21.0 GiB of 25.0 GiB (84.02%) in 5m 17s

drive-scsi0: transferred 21.1 GiB of 25.0 GiB (84.27%) in 5m 18s

drive-scsi0: transferred 21.1 GiB of 25.0 GiB (84.54%) in 5m 19s

drive-scsi0: transferred 21.2 GiB of 25.0 GiB (84.80%) in 5m 20s

drive-scsi0: transferred 21.3 GiB of 25.0 GiB (85.04%) in 5m 21s

drive-scsi0: transferred 21.3 GiB of 25.0 GiB (85.29%) in 5m 22s

drive-scsi0: transferred 21.4 GiB of 25.0 GiB (85.57%) in 5m 23s

drive-scsi0: transferred 21.5 GiB of 25.0 GiB (85.83%) in 5m 24s

drive-scsi0: transferred 21.5 GiB of 25.0 GiB (86.10%) in 5m 25s

drive-scsi0: transferred 21.6 GiB of 25.0 GiB (86.36%) in 5m 26s

drive-scsi0: transferred 21.7 GiB of 25.0 GiB (86.60%) in 5m 27s

drive-scsi0: transferred 21.7 GiB of 25.0 GiB (86.86%) in 5m 28s

drive-scsi0: transferred 21.8 GiB of 25.0 GiB (87.11%) in 5m 29s

drive-scsi0: transferred 21.8 GiB of 25.0 GiB (87.29%) in 5m 30s

drive-scsi0: transferred 21.9 GiB of 25.0 GiB (87.53%) in 5m 31s

drive-scsi0: transferred 22.0 GiB of 25.0 GiB (87.80%) in 5m 32s

drive-scsi0: transferred 22.0 GiB of 25.0 GiB (88.06%) in 5m 33s

drive-scsi0: transferred 22.1 GiB of 25.0 GiB (88.32%) in 5m 35s

drive-scsi0: transferred 22.1 GiB of 25.0 GiB (88.55%) in 5m 36s

drive-scsi0: transferred 22.2 GiB of 25.0 GiB (88.82%) in 5m 37s

drive-scsi0: transferred 22.3 GiB of 25.0 GiB (89.08%) in 5m 38s

drive-scsi0: transferred 22.3 GiB of 25.0 GiB (89.31%) in 5m 39s

drive-scsi0: transferred 22.4 GiB of 25.0 GiB (89.56%) in 5m 40s

drive-scsi0: transferred 22.5 GiB of 25.0 GiB (89.84%) in 5m 41s

drive-scsi0: transferred 22.5 GiB of 25.0 GiB (90.09%) in 5m 42s

drive-scsi0: transferred 22.6 GiB of 25.0 GiB (90.35%) in 5m 43s

drive-scsi0: transferred 22.7 GiB of 25.0 GiB (90.61%) in 5m 44s

drive-scsi0: transferred 22.7 GiB of 25.0 GiB (90.87%) in 5m 45s

drive-scsi0: transferred 22.8 GiB of 25.0 GiB (91.13%) in 5m 46s

drive-scsi0: transferred 22.9 GiB of 25.0 GiB (91.40%) in 5m 47s

drive-scsi0: transferred 22.9 GiB of 25.0 GiB (91.64%) in 5m 48s

drive-scsi0: transferred 23.0 GiB of 25.0 GiB (91.89%) in 5m 49s

drive-scsi0: transferred 23.0 GiB of 25.0 GiB (92.16%) in 5m 50s

drive-scsi0: transferred 23.1 GiB of 25.0 GiB (92.40%) in 5m 51s

drive-scsi0: transferred 23.2 GiB of 25.0 GiB (92.65%) in 5m 52s

drive-scsi0: transferred 23.2 GiB of 25.0 GiB (92.90%) in 5m 53s

drive-scsi0: transferred 23.3 GiB of 25.0 GiB (93.15%) in 5m 54s

drive-scsi0: transferred 23.4 GiB of 25.0 GiB (93.41%) in 5m 55s

drive-scsi0: transferred 23.4 GiB of 25.0 GiB (93.65%) in 5m 56s

drive-scsi0: transferred 23.5 GiB of 25.0 GiB (93.92%) in 5m 57s

drive-scsi0: transferred 23.5 GiB of 25.0 GiB (94.18%) in 5m 58s

drive-scsi0: transferred 23.6 GiB of 25.0 GiB (94.41%) in 5m 59s

drive-scsi0: transferred 23.7 GiB of 25.0 GiB (94.66%) in 6m

drive-scsi0: transferred 23.7 GiB of 25.0 GiB (94.91%) in 6m 1s

drive-scsi0: transferred 23.8 GiB of 25.0 GiB (95.15%) in 6m 2s

drive-scsi0: transferred 23.9 GiB of 25.0 GiB (95.42%) in 6m 3s

drive-scsi0: transferred 23.9 GiB of 25.0 GiB (95.64%) in 6m 4s

drive-scsi0: transferred 24.0 GiB of 25.0 GiB (95.91%) in 6m 5s

drive-scsi0: transferred 24.0 GiB of 25.0 GiB (96.06%) in 6m 6s

drive-scsi0: transferred 24.0 GiB of 25.0 GiB (96.08%) in 6m 7s

drive-scsi0: transferred 24.0 GiB of 25.0 GiB (96.19%) in 6m 8s

drive-scsi0: transferred 24.5 GiB of 25.0 GiB (97.80%) in 6m 9s

drive-scsi0: transferred 24.7 GiB of 25.0 GiB (98.64%) in 6m 10s

drive-scsi0: transferred 25.0 GiB of 25.0 GiB (99.94%) in 6m 11s

drive-scsi0: transferred 25.0 GiB of 25.0 GiB (100.00%) in 6m 12s, ready

all 'mirror' jobs are ready

2024-07-02 16:14:12 starting online/live migration on unix:/run/qemu-server/110.migrate

2024-07-02 16:14:12 set migration capabilities

2024-07-02 16:14:12 migration downtime limit: 100 ms

2024-07-02 16:14:12 migration cachesize: 256.0 MiB

2024-07-02 16:14:12 set migration parameters

2024-07-02 16:14:12 start migrate command to unix:/run/qemu-server/110.migrate

2024-07-02 16:14:13 average migration speed: 2.0 GiB/s - downtime 44 ms

2024-07-02 16:14:13 migration status: completed

all 'mirror' jobs are ready

drive-scsi0: Completing block job_id...

drive-scsi0: Completed successfully.

drive-scsi0: mirror-job finished

2024-07-02 16:14:14 stopping NBD storage migration server on target.

Logical volume "vm-110-disk-0" successfully removed.

2024-07-02 16:14:16 migration finished successfully (duration 00:06:18)

TASK OK