Hi All

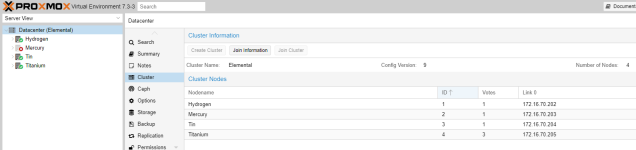

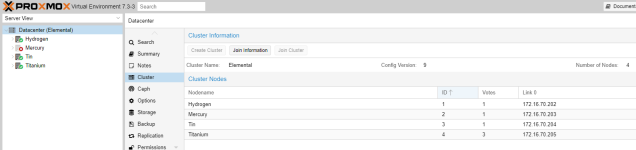

So I've been having a rejig of my environment, I added an extra node to my cluster (I now how 4), but one of the nodes after a reboot refuses to connect to the cluster. I've done heaps off googling, but with no joy. I want to avoid having to remove the node if possible.

My intent is to power down 2 of the nodes, as i want to use the two power efficient machines for day to day use, and power up the other two only when needed if I'm doing some work/testing something in the lab. I've weighted one of the nodes with more votes than normal to prevent the whole cluster falling over when only two are operational.

At the moment even with node 2 (Mercury) powered on, the cluster can't see it.

As far as I can see, the hostfile on the nodes are correct,

the only different I did find was that the corosync.conf file is was of date on Mercury, I suspect I might have updated the copy on node 1 (hydrogen) when Mercury was offline (adding some extra votes to the node I want to be master when I power down the old master, node 1), and I suspect I forgot to power up mercury before doing so. After updated it to match the others, corosync.service does start, but the node doesn't play with the others.

Here are some outputs which i found while looking at other peoples threads with cluster issues, which hopefully provide some clues. Nothing is leaping out at me hence seeking some expert advice

Please let me know what other logs/outputs I can provide, and hopefully i can avoid having to reinstall/re-add the node from scratch, and I promise I'll be more careful in future

So I've been having a rejig of my environment, I added an extra node to my cluster (I now how 4), but one of the nodes after a reboot refuses to connect to the cluster. I've done heaps off googling, but with no joy. I want to avoid having to remove the node if possible.

My intent is to power down 2 of the nodes, as i want to use the two power efficient machines for day to day use, and power up the other two only when needed if I'm doing some work/testing something in the lab. I've weighted one of the nodes with more votes than normal to prevent the whole cluster falling over when only two are operational.

At the moment even with node 2 (Mercury) powered on, the cluster can't see it.

As far as I can see, the hostfile on the nodes are correct,

the only different I did find was that the corosync.conf file is was of date on Mercury, I suspect I might have updated the copy on node 1 (hydrogen) when Mercury was offline (adding some extra votes to the node I want to be master when I power down the old master, node 1), and I suspect I forgot to power up mercury before doing so. After updated it to match the others, corosync.service does start, but the node doesn't play with the others.

Code:

root@Titanium:~# more /etc/corosync/corosync.conf

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: Hydrogen

nodeid: 1

quorum_votes: 1

ring0_addr: 172.16.70.202

}

node {

name: Mercury

nodeid: 2

quorum_votes: 1

ring0_addr: 172.16.70.203

}

node {

name: Tin

nodeid: 3

quorum_votes: 1

ring0_addr: 172.16.70.204

}

node {

name: Titanium

nodeid: 4

quorum_votes: 3

ring0_addr: 172.16.70.205

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: Elemental

config_version: 9

interface {

linknumber: 0

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}

root@Titanium:~#

Code:

root@Mercury:~# more /etc/corosync/corosync.conf

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: Hydrogen

nodeid: 1

quorum_votes: 1

ring0_addr: 172.16.70.202

}

node {

name: Mercury

nodeid: 2

quorum_votes: 1

ring0_addr: 172.16.70.203

}

node {

name: Tin

nodeid: 3

quorum_votes: 1

ring0_addr: 172.16.70.204

}

node {

name: Titanium

nodeid: 4

quorum_votes: 3

ring0_addr: 172.16.70.205

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: Elemental

config_version: 9

interface {

linknumber: 0

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}

root@Mercury:~#Here are some outputs which i found while looking at other peoples threads with cluster issues, which hopefully provide some clues. Nothing is leaping out at me hence seeking some expert advice

Code:

root@Mercury:~# systemctl status pve-cluster.service

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Sun 2022-12-04 14:54:56 GMT; 1h 43min ago

Process: 1558 ExecStart=/usr/bin/pmxcfs (code=exited, status=255/EXCEPTION)

CPU: 11ms

Dec 04 14:54:56 Mercury systemd[1]: pve-cluster.service: Scheduled restart job, restart counter is at 5.

Dec 04 14:54:56 Mercury systemd[1]: Stopped The Proxmox VE cluster filesystem.

Dec 04 14:54:56 Mercury systemd[1]: pve-cluster.service: Start request repeated too quickly.

Dec 04 14:54:56 Mercury systemd[1]: pve-cluster.service: Failed with result 'exit-code'.

Dec 04 14:54:56 Mercury systemd[1]: Failed to start The Proxmox VE cluster filesystem.

Code:

root@Titanium:~# systemctl status pve-cluster.service

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2022-12-01 23:09:52 GMT; 2 days ago

Process: 9949 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 9965 (pmxcfs)

Tasks: 7 (limit: 309308)

Memory: 68.5M

CPU: 5min 32.992s

CGroup: /system.slice/pve-cluster.service

└─9965 /usr/bin/pmxcfs

Dec 04 14:46:26 Titanium pmxcfs[9965]: [status] notice: received log

Dec 04 14:46:52 Titanium pmxcfs[9965]: [dcdb] notice: data verification successful

Dec 04 14:49:43 Titanium pmxcfs[9965]: [status] notice: received log

Dec 04 15:01:27 Titanium pmxcfs[9965]: [status] notice: received log

Dec 04 15:16:27 Titanium pmxcfs[9965]: [status] notice: received log

Dec 04 15:31:27 Titanium pmxcfs[9965]: [status] notice: received log

Dec 04 15:46:27 Titanium pmxcfs[9965]: [status] notice: received log

Dec 04 15:46:52 Titanium pmxcfs[9965]: [dcdb] notice: data verification successful

Dec 04 16:01:27 Titanium pmxcfs[9965]: [status] notice: received log

Dec 04 16:16:27 Titanium pmxcfs[9965]: [status] notice: received log

root@Titanium:~#

Code:

root@Mercury:~# journalctl -b -u pve-cluster

-- Journal begins at Mon 2021-12-06 22:00:47 GMT, ends at Sun 2022-12-04 16:29:55 GMT. --

Dec 04 14:54:54 Mercury systemd[1]: Starting The Proxmox VE cluster filesystem...

Dec 04 14:54:54 Mercury pmxcfs[1373]: fuse: mountpoint is not empty

Dec 04 14:54:54 Mercury pmxcfs[1373]: fuse: if you are sure this is safe, use the 'nonempty' mount option

Dec 04 14:54:54 Mercury pmxcfs[1373]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:54 Mercury pmxcfs[1373]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:54 Mercury pmxcfs[1373]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:54 Mercury pmxcfs[1373]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Control process exited, code=exited, status=255/EXCEPTION

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Failed with result 'exit-code'.

Dec 04 14:54:54 Mercury systemd[1]: Failed to start The Proxmox VE cluster filesystem.

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Scheduled restart job, restart counter is at 1.

Dec 04 14:54:54 Mercury systemd[1]: Stopped The Proxmox VE cluster filesystem.

Dec 04 14:54:54 Mercury systemd[1]: Starting The Proxmox VE cluster filesystem...

Dec 04 14:54:54 Mercury pmxcfs[1553]: fuse: mountpoint is not empty

Dec 04 14:54:54 Mercury pmxcfs[1553]: fuse: if you are sure this is safe, use the 'nonempty' mount option

Dec 04 14:54:54 Mercury pmxcfs[1553]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:54 Mercury pmxcfs[1553]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:54 Mercury pmxcfs[1553]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:54 Mercury pmxcfs[1553]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Control process exited, code=exited, status=255/EXCEPTION

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Failed with result 'exit-code'.

Dec 04 14:54:54 Mercury systemd[1]: Failed to start The Proxmox VE cluster filesystem.

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Scheduled restart job, restart counter is at 2.

Dec 04 14:54:54 Mercury systemd[1]: Stopped The Proxmox VE cluster filesystem.

Dec 04 14:54:54 Mercury systemd[1]: Starting The Proxmox VE cluster filesystem...

Dec 04 14:54:54 Mercury pmxcfs[1554]: fuse: mountpoint is not empty

Dec 04 14:54:54 Mercury pmxcfs[1554]: fuse: if you are sure this is safe, use the 'nonempty' mount option

Dec 04 14:54:54 Mercury pmxcfs[1554]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:54 Mercury pmxcfs[1554]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:54 Mercury pmxcfs[1554]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:54 Mercury pmxcfs[1554]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Control process exited, code=exited, status=255/EXCEPTION

Dec 04 14:54:54 Mercury systemd[1]: pve-cluster.service: Failed with result 'exit-code'.

Dec 04 14:54:54 Mercury systemd[1]: Failed to start The Proxmox VE cluster filesystem.

Dec 04 14:54:55 Mercury systemd[1]: pve-cluster.service: Scheduled restart job, restart counter is at 3.

Dec 04 14:54:55 Mercury systemd[1]: Stopped The Proxmox VE cluster filesystem.

Dec 04 14:54:55 Mercury systemd[1]: Starting The Proxmox VE cluster filesystem...

Dec 04 14:54:55 Mercury pmxcfs[1557]: fuse: mountpoint is not empty

Dec 04 14:54:55 Mercury pmxcfs[1557]: fuse: if you are sure this is safe, use the 'nonempty' mount option

Dec 04 14:54:55 Mercury pmxcfs[1557]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:55 Mercury pmxcfs[1557]: [main] crit: fuse_mount error: File exists

Dec 04 14:54:55 Mercury pmxcfs[1557]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:55 Mercury pmxcfs[1557]: [main] notice: exit proxmox configuration filesystem (-1)

Dec 04 14:54:55 Mercury systemd[1]: pve-cluster.service: Control process exited, code=exited, status=255/EXCEPTION

Dec 04 14:54:55 Mercury systemd[1]: pve-cluster.service: Failed with result 'exit-code'.

Dec 04 14:54:55 Mercury systemd[1]: Failed to start The Proxmox VE cluster filesystem.

Dec 04 14:54:55 Mercury systemd[1]: pve-cluster.service: Scheduled restart job, restart counter is at 4.Please let me know what other logs/outputs I can provide, and hopefully i can avoid having to reinstall/re-add the node from scratch, and I promise I'll be more careful in future

Last edited: