Hi all,

Weird one here. Or at least, something I haven't encountered before.

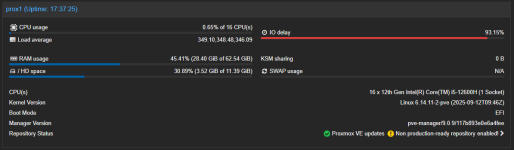

3 node cluster. Node 1 shows unknown, all guests as well:

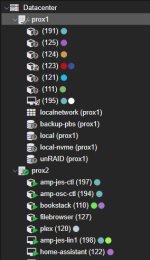

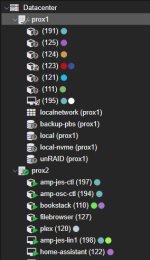

shows all node are there, and I can interact with node 1 from the GUI fine, but none of the guests are actually running.

Something that may or not be related, is that node 1 doesn't seem to register customer colours for the guest tags, indicating it's not reading datacenter.cfg correctly?

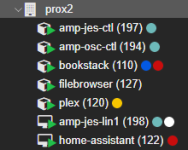

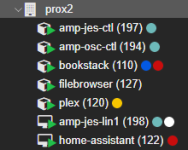

They should look like this (from node 2):

I notice there that node 2 doesn't have a status indicator on the node itself. I'm not sure what this signifies.

Any help here would be great!

Weird one here. Or at least, something I haven't encountered before.

3 node cluster. Node 1 shows unknown, all guests as well:

Code:

pvecm statusSomething that may or not be related, is that node 1 doesn't seem to register customer colours for the guest tags, indicating it's not reading datacenter.cfg correctly?

They should look like this (from node 2):

I notice there that node 2 doesn't have a status indicator on the node itself. I'm not sure what this signifies.

Any help here would be great!