Hi,

Proxmox Version: 8.4.14

Dealing with a strange issue on a new install of an latest Alpine linux VM, here is what I did:

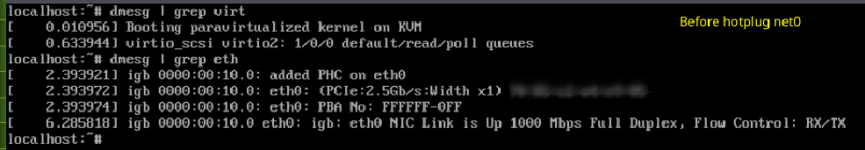

Passthrough enp2s0 ((02:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)) OK.

eth0 static IP (192.168.1.5) survives reboots, CAN ping from another computer. OK.

$ poweroff

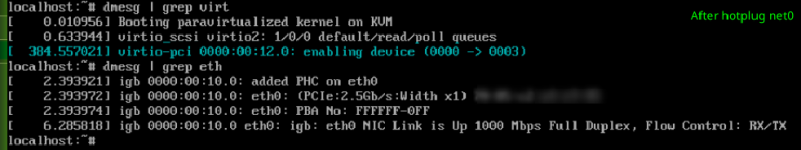

Add new NIC (vmbr0, virtIO) /// vm conf shows:: net0: virtio=AC:2A:12:87:A6:26,bridge=vmbr0,firewall=1 /// OK.

< Start >

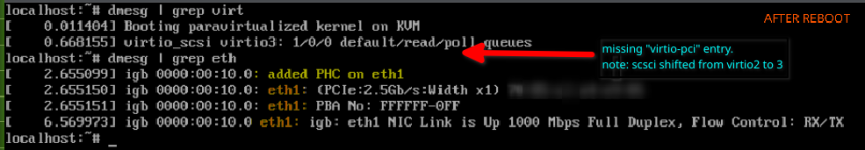

Now, eth0 doesn't work. CANNOT ping eth0 IP (192.168.1.5) from another computer. FAIL

Gateway IP (192.168.1.1) is valid and others can ping it. So, try to ping the gateway from eth0, NOTHING. FAIL

$ poweroff

Remove net0 virtIO nic

< start >

eth0 works fine again!

>> Also, in another iteration, tried hot plug/add the VirtIO nic (paravirtualized or other), while VM running and configure IP to what is now eth1.

eth0, eth1 both work fine and ping/respond OK. Reboot, all fails again. eth0 and eth1 won't ping / respond. Shutdown, remove VirtIO nic, eth0 starts working again!

Tried multiple iterations/installs and narrowed the process to above.

Adding virtIO nic (just add, no config and doesn't matter if it it VirtIO, E1000, E1000E, RTL8139...) fails whole network on this VM only.

Other VMs that are on this bridge(vmbr0) work perfectly fine!

Also, I have 2 other nics (pci slot) via passthrough in a pfSense VM and they work fine.

What did I miss?

Proxmox Version: 8.4.14

Dealing with a strange issue on a new install of an latest Alpine linux VM, here is what I did:

Passthrough enp2s0 ((02:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)) OK.

eth0 static IP (192.168.1.5) survives reboots, CAN ping from another computer. OK.

$ poweroff

Add new NIC (vmbr0, virtIO) /// vm conf shows:: net0: virtio=AC:2A:12:87:A6:26,bridge=vmbr0,firewall=1 /// OK.

< Start >

Now, eth0 doesn't work. CANNOT ping eth0 IP (192.168.1.5) from another computer. FAIL

Gateway IP (192.168.1.1) is valid and others can ping it. So, try to ping the gateway from eth0, NOTHING. FAIL

$ poweroff

Remove net0 virtIO nic

< start >

eth0 works fine again!

>> Also, in another iteration, tried hot plug/add the VirtIO nic (paravirtualized or other), while VM running and configure IP to what is now eth1.

eth0, eth1 both work fine and ping/respond OK. Reboot, all fails again. eth0 and eth1 won't ping / respond. Shutdown, remove VirtIO nic, eth0 starts working again!

Tried multiple iterations/installs and narrowed the process to above.

Adding virtIO nic (just add, no config and doesn't matter if it it VirtIO, E1000, E1000E, RTL8139...) fails whole network on this VM only.

Other VMs that are on this bridge(vmbr0) work perfectly fine!

Also, I have 2 other nics (pci slot) via passthrough in a pfSense VM and they work fine.

What did I miss?