Hi all,

I am relatively new to Proxmox and am looking to set up a test four-node cluster. Coming from VMware, I am trying to figure out what is the best way to set up the NIC configuration. Any guidance would be greatly appreciated.

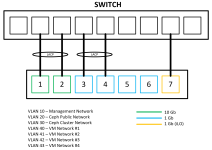

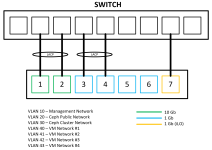

Each node has four 1 Gbps ports and two 10 Gbps ports. Per the picture below, my thought is to configure the two 10 Gb ports in a bond (bond0) for the two Ceph networks (public & cluster). The reason for the bond as opposed to dedicated NICs is for redundancy, should I ever lose one of the ports temporarily. Next, I would bond two of the 1 Gb ports (bond1) for the management network and VM networks. The reason why I am not using all four 1 Gb ports is to avoid having so many ports on a switch tied up to the servers.

Is this a good approach or is there a better approach I should be taking? This would be a lab environment at work, primarily used for testing and occasionally for demonstrations/trainings.

I am relatively new to Proxmox and am looking to set up a test four-node cluster. Coming from VMware, I am trying to figure out what is the best way to set up the NIC configuration. Any guidance would be greatly appreciated.

Each node has four 1 Gbps ports and two 10 Gbps ports. Per the picture below, my thought is to configure the two 10 Gb ports in a bond (bond0) for the two Ceph networks (public & cluster). The reason for the bond as opposed to dedicated NICs is for redundancy, should I ever lose one of the ports temporarily. Next, I would bond two of the 1 Gb ports (bond1) for the management network and VM networks. The reason why I am not using all four 1 Gb ports is to avoid having so many ports on a switch tied up to the servers.

Is this a good approach or is there a better approach I should be taking? This would be a lab environment at work, primarily used for testing and occasionally for demonstrations/trainings.