INFO: include disk 'ide0' 'local-lvm:vm-103-disk-0' 45G

INFO: include disk 'ide1' 'local-lvm:vm-103-disk-1' 400G

INFO: include disk 'efidisk0' 'local-lvm:vm-103-disk-2' 4M

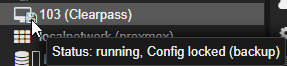

INFO: stopping virtual guest

INFO: creating vzdump archive '/mnt/pve/syno1821/dump/vzdump-qemu-103-2025_07_28-19_10_11.vma.zst'

INFO: starting kvm to execute backup task

INFO: started backup task '063e77d3-c61e-45f7-bac9-b18f311c9a0d'

INFO: resuming VM again after 186 seconds

INFO: 0% (2.2 GiB of 445.0 GiB) in 3s, read: 763.0 MiB/s, write: 682.1 MiB/s

INFO: 1% (4.5 GiB of 445.0 GiB) in 12s, read: 256.0 MiB/s, write: 239.4 MiB/s

and then nothing anymore for foreverTrying a backup of another VM also fails, just further down (percentage wise).

NFS is a simply synology with wildcard permits and nnfs v4.1

system logs shows this:

Jul 28 21:12:39 pve pvestatd[1256]: got timeout

Jul 28 21:12:39 pve pvestatd[1256]: unable to activate storage 'syno1821' - directory '/mnt/pve/syno1821' does not exist or is unreachable

Jul 28 21:12:39 pve pvestatd[1256]: status update time (10.177 seconds)

Jul 28 21:12:39 pve pvedaemon[1284]: VM 103 qmp command failed - VM 103 qmp command 'query-proxmox-support' failed - unable to connect to VM 103 qmp socket - timeout after 51 retries

What could be causing this?

INFO: include disk 'ide1' 'local-lvm:vm-103-disk-1' 400G

INFO: include disk 'efidisk0' 'local-lvm:vm-103-disk-2' 4M

INFO: stopping virtual guest

INFO: creating vzdump archive '/mnt/pve/syno1821/dump/vzdump-qemu-103-2025_07_28-19_10_11.vma.zst'

INFO: starting kvm to execute backup task

INFO: started backup task '063e77d3-c61e-45f7-bac9-b18f311c9a0d'

INFO: resuming VM again after 186 seconds

INFO: 0% (2.2 GiB of 445.0 GiB) in 3s, read: 763.0 MiB/s, write: 682.1 MiB/s

INFO: 1% (4.5 GiB of 445.0 GiB) in 12s, read: 256.0 MiB/s, write: 239.4 MiB/s

and then nothing anymore for foreverTrying a backup of another VM also fails, just further down (percentage wise).

NFS is a simply synology with wildcard permits and nnfs v4.1

system logs shows this:

Jul 28 21:12:39 pve pvestatd[1256]: got timeout

Jul 28 21:12:39 pve pvestatd[1256]: unable to activate storage 'syno1821' - directory '/mnt/pve/syno1821' does not exist or is unreachable

Jul 28 21:12:39 pve pvestatd[1256]: status update time (10.177 seconds)

Jul 28 21:12:39 pve pvedaemon[1284]: VM 103 qmp command failed - VM 103 qmp command 'query-proxmox-support' failed - unable to connect to VM 103 qmp socket - timeout after 51 retries

What could be causing this?

Last edited: