Hi,

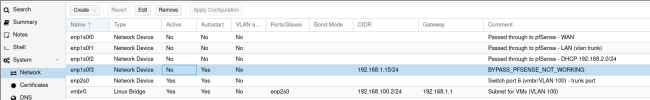

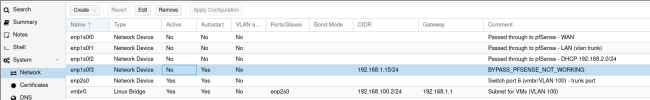

I'm running Proxmox with pfSense virtualized and have been doing that for around 1,5 years now... Now I'm migrating to new hardware and began messing with the configuration. Out of the box Proxmox works just nice with a static IP AFAIR. What I usually do is quickly to install pfSense virtualized and use that as a firewall/router. But once in a while I screw things up and lock myself up (mostly in the beginning). In any case, I would really like to be able to ssh my Proxmox host, even if the pfSense-VM isn't running or is malfunctioning. Here's the configuration, mostly working:

However, it's the last of the 4 Intel NIC ports (enp1s0f3) isn't working a I expect. As you see I pass through the first 3 physical ports. Then I have a built-in Realtek port which I successfully have bridged to vmbr0 - all that is good. Everything except the blue line above is working as I expect... What I would expect now is that if I insert a network cable into port 4 (=enp1s0f3) and then plug the other end into a laptop with wifi disabled, then I might have to statically configure the IP address and netmask, but from my laptop I expect to be able to ssh or log into Proxmox using the IP-address 192.168.1.15... But this isn't working... In fact, even though "Autostart" is "Yes", the network device never becomes "Active" (it just says "No"), furthermore the lights aren't blinking... I don't expect that, as this port should have direct access to the Proxmox host...

What is it I'm doing wrong? Below I some additional info... It's probably just a really small thing, please help/advice, thanks!

I'm running Proxmox with pfSense virtualized and have been doing that for around 1,5 years now... Now I'm migrating to new hardware and began messing with the configuration. Out of the box Proxmox works just nice with a static IP AFAIR. What I usually do is quickly to install pfSense virtualized and use that as a firewall/router. But once in a while I screw things up and lock myself up (mostly in the beginning). In any case, I would really like to be able to ssh my Proxmox host, even if the pfSense-VM isn't running or is malfunctioning. Here's the configuration, mostly working:

However, it's the last of the 4 Intel NIC ports (enp1s0f3) isn't working a I expect. As you see I pass through the first 3 physical ports. Then I have a built-in Realtek port which I successfully have bridged to vmbr0 - all that is good. Everything except the blue line above is working as I expect... What I would expect now is that if I insert a network cable into port 4 (=enp1s0f3) and then plug the other end into a laptop with wifi disabled, then I might have to statically configure the IP address and netmask, but from my laptop I expect to be able to ssh or log into Proxmox using the IP-address 192.168.1.15... But this isn't working... In fact, even though "Autostart" is "Yes", the network device never becomes "Active" (it just says "No"), furthermore the lights aren't blinking... I don't expect that, as this port should have direct access to the Proxmox host...

What is it I'm doing wrong? Below I some additional info... It's probably just a really small thing, please help/advice, thanks!

Code:

# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master vmbr0 state UP group default qlen 1000

link/ether 7c:d3:0a:1a:f4:d5 brd ff:ff:ff:ff:ff:ff

7: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 7c:d3:0a:1a:f4:d5 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.2/24 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::7ed3:aff:fe1a:f4d5/64 scope link

valid_lft forever preferred_lft forever

8: tap101i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master vmbr0 state UNKNOWN group default qlen 1000

link/ether 96:3d:1a:2c:96:6a brd ff:ff:ff:ff:ff:ff

312: veth103i0@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000

link/ether fe:42:de:0c:2b:14 brd ff:ff:ff:ff:ff:ff link-netnsid 0

# ip l show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master vmbr0 state UP mode DEFAULT group default qlen 1000

link/ether 7c:d3:0a:1a:f4:d5 brd ff:ff:ff:ff:ff:ff

7: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 7c:d3:0a:1a:f4:d5 brd ff:ff:ff:ff:ff:ff

8: tap101i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master vmbr0 state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 96:3d:1a:2c:96:6a brd ff:ff:ff:ff:ff:ff

311: veth103i0@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP mode DEFAULT group default qlen 1000

link/ether fe:27:78:e3:e5:ae brd ff:ff:ff:ff:ff:ff link-netnsid 0

/etc/network/interfaces:

auto lo

iface lo inet loopback

auto enp2s0

iface enp2s0 inet manual

#Switch port 6 (vmbr/VLAN 100) - trunk port

iface enp1s0f0 inet manual

#Passed through to pfSense - WAN

iface enp1s0f1 inet manual

#Passed through to pfSense - LAN (vlan trunk)

iface enp1s0f2 inet manual

#Passed through to pfSense - DHCP 192.168.2.0/24

auto enp1s0f3

iface enp1s0f3 inet static

address 192.168.1.15/24

#BYPASS_PFSENSE_NOT_WORKING

auto vmbr0

iface vmbr0 inet static

address 192.168.100.2/24

gateway 192.168.1.1

bridge-ports enp2s0

bridge-stp off

bridge-fd 0

post-up /sbin/ethtool -s enp2s0 wol g

#Subnet for VMs (VLAN 100)