Hello,

I've been fighting an issue for about a week now with some strange networking-related issues. My current setup is as follows:

3x brand-new ProxMox VE 7.3-6 hosts installed on Dell R6515 servers running kernel

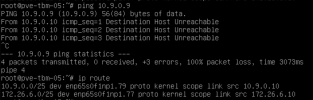

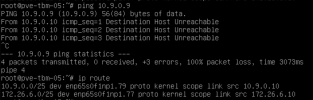

Our management network is on VLAN 79, and our CEPH network is on VLAN 77. With the current above configuration, upon reboot, our hypervisors and VMs have no connectivity whatsoever, including to other hosts in the same layer-2 network (incomplete ARP entries are present, and all pings show "Destination host unreachable"), despite "ip a" showing all interfaces as UP:

Running tcpdump on both the enp65s0f1np1.79 interface as well as vmbr1 only shows ARP traffic, nothing else. The only way to fix this issue is to comment out both

I have also attempted assigning the management network 79 to

I should mention this issue is only occuring on our ProxMox nodes that have Mellanox ConnectX-5s installed, we have other hosts utilizing Broadcom NICs that don't exhibit this same behavior. I already ensured our ConnectX5 NICs have been updated to the latest available firmware.

I am running out of ideas at this point unfortunately, short of swapping out our physical NIC hardware. Has anyone experienced similar issues in the past?

I've been fighting an issue for about a week now with some strange networking-related issues. My current setup is as follows:

3x brand-new ProxMox VE 7.3-6 hosts installed on Dell R6515 servers running kernel

5.15.85-1-pve. My /etc/network/interfaces file looks like the following:Our management network is on VLAN 79, and our CEPH network is on VLAN 77. With the current above configuration, upon reboot, our hypervisors and VMs have no connectivity whatsoever, including to other hosts in the same layer-2 network (incomplete ARP entries are present, and all pings show "Destination host unreachable"), despite "ip a" showing all interfaces as UP:

Running tcpdump on both the enp65s0f1np1.79 interface as well as vmbr1 only shows ARP traffic, nothing else. The only way to fix this issue is to comment out both

vmbr interfaces from /etc/network/interfaces, reboot the node entirely (systemctl restart networking alone doesn't have any effect), then uncomment the vmbr interfaces and run systemctl restart networking. After all these steps, everything is up and running again without issue. I have also attempted assigning the management network 79 to

vmbr1.79 instead of using the raw bridge device, but the same exact issue occurs.I should mention this issue is only occuring on our ProxMox nodes that have Mellanox ConnectX-5s installed, we have other hosts utilizing Broadcom NICs that don't exhibit this same behavior. I already ensured our ConnectX5 NICs have been updated to the latest available firmware.

I am running out of ideas at this point unfortunately, short of swapping out our physical NIC hardware. Has anyone experienced similar issues in the past?