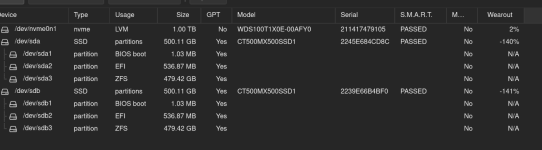

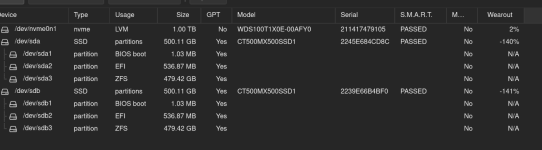

One of our servers uses two Crucial MX500 SSDs in a ZFS RAID1 setup as boot drives. By chance, I checked the servers' SMART values in the UI and it shows a whopping negative -140% wearout. Not sure what to make of this?

In the shell,

The drives have about 20K power on hours and about 55TB written so far, so given their 180TBW specs they should be far away from wearout ...

No self test errors have been logged either and so I'm wondering what's going on?

In the shell,

smartctl -a doesn't show anything extraordinary except the "Percent_Lifetime_Remaining", which happens to be 116%.

Code:

$ smartctl -a /dev/sda

[...]

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x002f 100 100 000 Pre-fail Always - 0

5 Reallocate_NAND_Blk_Cnt 0x0032 100 100 010 Old_age Always - 0

9 Power_On_Hours 0x0032 100 100 000 Old_age Always - 19715

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 20

171 Program_Fail_Count 0x0032 100 100 000 Old_age Always - 0

172 Erase_Fail_Count 0x0032 100 100 000 Old_age Always - 0

173 Ave_Block-Erase_Count 0x0032 240 240 000 Old_age Always - 1161

174 Unexpect_Power_Loss_Ct 0x0032 100 100 000 Old_age Always - 2

180 Unused_Reserve_NAND_Blk 0x0033 000 000 000 Pre-fail Always - 30

183 SATA_Interfac_Downshift 0x0032 100 100 000 Old_age Always - 0

184 Error_Correction_Count 0x0032 100 100 000 Old_age Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

194 Temperature_Celsius 0x0022 078 050 000 Old_age Always - 22 (Min/Max 0/50)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_ECC_Cnt 0x0032 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 100 100 000 Old_age Always - 0

202 Percent_Lifetime_Remain 0x0030 240 240 001 Old_age Offline - 116

206 Write_Error_Rate 0x000e 100 100 000 Old_age Always - 0

210 Success_RAIN_Recov_Cnt 0x0032 100 100 000 Old_age Always - 0

246 Total_LBAs_Written 0x0032 100 100 000 Old_age Always - 116458851999

247 Host_Program_Page_Count 0x0032 100 100 000 Old_age Always - 1084277588

248 FTL_Program_Page_Count 0x0032 100 100 000 Old_age Always - 8546353571The drives have about 20K power on hours and about 55TB written so far, so given their 180TBW specs they should be far away from wearout ...

No self test errors have been logged either and so I'm wondering what's going on?

Last edited: