Howdy All,

I recently upgraded from 8 to 9 following the official guide and have had no issues whatsoever up intill today. This is my test environment before i run the upgrade on my main cluster. I have no firewall enabled on this cluster.

I have tried adding another SDN VLAN on to this cluster today.with no success.

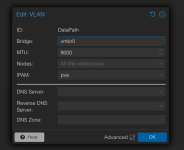

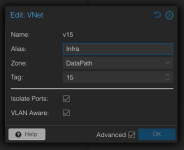

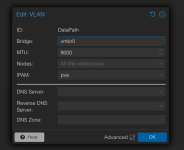

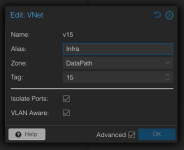

Here is my configuration from the gui

All i get when i run tcpdump -i enp1s0f1 -nn -e vlan 15 for that vlan is this:

16:39:59.384662 08:47:4c:59:3f:4b > 01:80:c2:00:00:00, ethertype 802.1Q (0x8100), length 64: vlan 15, p 7, 802.3LLC, dsap STP (0x42) Individual, ssap STP (0x42) Command, ctrl 0x03: STP 802.1w, Rapid STP, Flags [Proposal, Learn, Forward, Agreement], bridge-id f009.08:47:4c:59:3f:89.8801, length 36

checking the switch infrastructure outside of the server it seems to be fine as all ports are not in STP blocking and are normal.

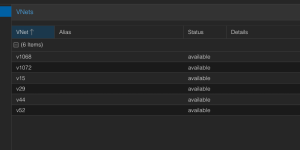

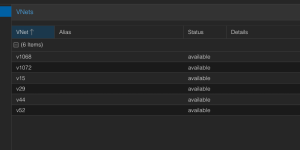

It says available in SDN all of the vnets are available

I have a seperate vlan dedicated to managment on the device that is seperate from the SDN which is vlan 16

My interface output from ip addr is:

6: enp1s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

altname enx0cc47abc5d13

7: enp1s0f1.16@enp1s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue master vmbr16 state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

8: vmbr16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

inet 100.117.8.159/24 scope global vmbr16

valid_lft forever preferred_lft forever

inet6 fe80::ec4:7aff:febc:5d13/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

9: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ec4:7aff:febc:5d13/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

21: vmbr0.15@vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue master v15 state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

22: v15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ec4:7aff:febc:5d13/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

I have 2 Virtual Machines on v15 that cannot communicate with each other.

13: tap138i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 9000 qdisc fq_codel master v15 state UNKNOWN group default qlen 1000

link/ether 1e:5a:8a:f2:02:98 brd ff:ff:ff:ff:ff:ff

16: tap113i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 9000 qdisc fq_codel master v15 state UNKNOWN group default qlen 1000

link/ether ca:bc:c8:47:fc:7c brd ff:ff:ff:ff:ff:ff

The other vNets on this interface are reachable from the outside and can ping each other, this behavior with v15 happens on all nodes in the cluster and so far only v15

root@wfprx-app10c1:~# cat /etc/pve/sdn/vnets.cfg

vnet: v1072

zone DataPath

alias Triton1

isolate-ports 1

tag 1072

vlanaware 1

vnet: v1068

zone DataPath

alias Triton2

isolate-ports 1

tag 1068

vlanaware 1

vnet: v29

zone DataPath

alias Skylab

isolate-ports 1

tag 29

vlanaware 1

vnet: v52

zone DataPath

alias Starlord

isolate-ports 1

tag 52

vlanaware 1

vnet: v44

zone DataPath

alias Io Lab

isolate-ports 1

tag 44

vlanaware 1

vnet: v15

zone DataPath

alias Infra

isolate-ports 1

tag 15

vlanaware 1

root@wfprx-app10c1:~# cat /etc/pve/sdn/zones.cfg

vlan: DataPath

bridge vmbr0

ipam pve

mtu 9000

running-conf

{"controllers":{"ids":{}},"fabrics":{"ids":{}},"vnets":{"ids":{"v1068":{"alias":"Triton1","tag":1068,"vlanaware":1,"type":"vnet","zone":"DataPath","isolate-ports":1},"v29":{"type":"vnet","zone":"DataPath","isolate-ports":1,"tag":29,"vlanaware":1,"alias":"Skylab"},"v1072":{"alias":"Triton2","tag":1072,"vlanaware":1,"type":"vnet","zone":"DataPath","isolate-ports":1},"v44":{"alias":"Io Lab","vlanaware":1,"tag":44,"isolate-ports":1,"zone":"DataPath","type":"vnet"},"v52":{"alias":"Starlord","zone":"DataPath","isolate-ports":1,"type":"vnet","vlanaware":1,"tag":52},"v15":{"alias":"Infra","isolate-ports":1,"zone":"DataPath","type":"vnet","vlanaware":1,"tag":15}}},"zones":{"ids":{"DataPath":{"bridge":"vmbr0","mtu":9000,"ipam":"pve","type":"vlan"}}},"subnets":{"ids":{}},"version":15}

Here is the /etc/network/interfaces.d/sdn configuration which matches the running configuration

#version:15

auto v1068

iface v1068

bridge_ports vmbr0.1068

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Triton1

auto v1072

iface v1072

bridge_ports vmbr0.1072

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Triton2

auto v15

iface v15

bridge_ports vmbr0.15

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Infra

auto v29

iface v29

bridge_ports vmbr0.29

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Skylab

auto v44

iface v44

bridge_ports vmbr0.44

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Io Lab

auto v52

iface v52

bridge_ports vmbr0.52

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Starlord

When attempting to have those 2 VM talk to each other it seems like layer 2 is broken

17:07:03.424079 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.79 tell 100.117.15.76, length 28

17:07:03.456097 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.1 tell 100.117.15.76, length 28

17:07:04.448112 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.79 tell 100.117.15.76, length 28

17:07:04.480102 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.1 tell 100.117.15.76, length 28

And i have rebooted this node as well.

root@wfprx-app10c1:/etc/network# uptime

17:02:29 up 2:13, 1 user, load average: 0.18, 0.13, 0.17

root@wfprx-app10c1:/etc/network#

I recently upgraded from 8 to 9 following the official guide and have had no issues whatsoever up intill today. This is my test environment before i run the upgrade on my main cluster. I have no firewall enabled on this cluster.

I have tried adding another SDN VLAN on to this cluster today.with no success.

Here is my configuration from the gui

All i get when i run tcpdump -i enp1s0f1 -nn -e vlan 15 for that vlan is this:

16:39:59.384662 08:47:4c:59:3f:4b > 01:80:c2:00:00:00, ethertype 802.1Q (0x8100), length 64: vlan 15, p 7, 802.3LLC, dsap STP (0x42) Individual, ssap STP (0x42) Command, ctrl 0x03: STP 802.1w, Rapid STP, Flags [Proposal, Learn, Forward, Agreement], bridge-id f009.08:47:4c:59:3f:89.8801, length 36

checking the switch infrastructure outside of the server it seems to be fine as all ports are not in STP blocking and are normal.

It says available in SDN all of the vnets are available

I have a seperate vlan dedicated to managment on the device that is seperate from the SDN which is vlan 16

My interface output from ip addr is:

6: enp1s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

altname enx0cc47abc5d13

7: enp1s0f1.16@enp1s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue master vmbr16 state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

8: vmbr16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

inet 100.117.8.159/24 scope global vmbr16

valid_lft forever preferred_lft forever

inet6 fe80::ec4:7aff:febc:5d13/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

9: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ec4:7aff:febc:5d13/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

21: vmbr0.15@vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue master v15 state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

22: v15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UP group default qlen 1000

link/ether 0c:c4:7a:bc:5d:13 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ec4:7aff:febc:5d13/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

I have 2 Virtual Machines on v15 that cannot communicate with each other.

13: tap138i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 9000 qdisc fq_codel master v15 state UNKNOWN group default qlen 1000

link/ether 1e:5a:8a:f2:02:98 brd ff:ff:ff:ff:ff:ff

16: tap113i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 9000 qdisc fq_codel master v15 state UNKNOWN group default qlen 1000

link/ether ca:bc:c8:47:fc:7c brd ff:ff:ff:ff:ff:ff

The other vNets on this interface are reachable from the outside and can ping each other, this behavior with v15 happens on all nodes in the cluster and so far only v15

root@wfprx-app10c1:~# cat /etc/pve/sdn/vnets.cfg

vnet: v1072

zone DataPath

alias Triton1

isolate-ports 1

tag 1072

vlanaware 1

vnet: v1068

zone DataPath

alias Triton2

isolate-ports 1

tag 1068

vlanaware 1

vnet: v29

zone DataPath

alias Skylab

isolate-ports 1

tag 29

vlanaware 1

vnet: v52

zone DataPath

alias Starlord

isolate-ports 1

tag 52

vlanaware 1

vnet: v44

zone DataPath

alias Io Lab

isolate-ports 1

tag 44

vlanaware 1

vnet: v15

zone DataPath

alias Infra

isolate-ports 1

tag 15

vlanaware 1

root@wfprx-app10c1:~# cat /etc/pve/sdn/zones.cfg

vlan: DataPath

bridge vmbr0

ipam pve

mtu 9000

running-conf

{"controllers":{"ids":{}},"fabrics":{"ids":{}},"vnets":{"ids":{"v1068":{"alias":"Triton1","tag":1068,"vlanaware":1,"type":"vnet","zone":"DataPath","isolate-ports":1},"v29":{"type":"vnet","zone":"DataPath","isolate-ports":1,"tag":29,"vlanaware":1,"alias":"Skylab"},"v1072":{"alias":"Triton2","tag":1072,"vlanaware":1,"type":"vnet","zone":"DataPath","isolate-ports":1},"v44":{"alias":"Io Lab","vlanaware":1,"tag":44,"isolate-ports":1,"zone":"DataPath","type":"vnet"},"v52":{"alias":"Starlord","zone":"DataPath","isolate-ports":1,"type":"vnet","vlanaware":1,"tag":52},"v15":{"alias":"Infra","isolate-ports":1,"zone":"DataPath","type":"vnet","vlanaware":1,"tag":15}}},"zones":{"ids":{"DataPath":{"bridge":"vmbr0","mtu":9000,"ipam":"pve","type":"vlan"}}},"subnets":{"ids":{}},"version":15}

Here is the /etc/network/interfaces.d/sdn configuration which matches the running configuration

#version:15

auto v1068

iface v1068

bridge_ports vmbr0.1068

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Triton1

auto v1072

iface v1072

bridge_ports vmbr0.1072

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Triton2

auto v15

iface v15

bridge_ports vmbr0.15

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Infra

auto v29

iface v29

bridge_ports vmbr0.29

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Skylab

auto v44

iface v44

bridge_ports vmbr0.44

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Io Lab

auto v52

iface v52

bridge_ports vmbr0.52

bridge_stp off

bridge_fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

alias Starlord

When attempting to have those 2 VM talk to each other it seems like layer 2 is broken

17:07:03.424079 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.79 tell 100.117.15.76, length 28

17:07:03.456097 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.1 tell 100.117.15.76, length 28

17:07:04.448112 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.79 tell 100.117.15.76, length 28

17:07:04.480102 bc:24:11:85:a2:3d > ff:ff:ff:ff:ff:ff, ethertype 802.1Q (0x8100), length 46: vlan 15, p 0, ethertype ARP (0x0806), Request who-has 100.117.15.1 tell 100.117.15.76, length 28

And i have rebooted this node as well.

root@wfprx-app10c1:/etc/network# uptime

17:02:29 up 2:13, 1 user, load average: 0.18, 0.13, 0.17

root@wfprx-app10c1:/etc/network#