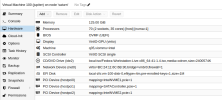

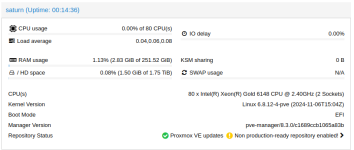

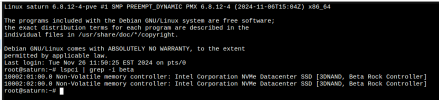

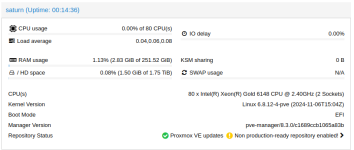

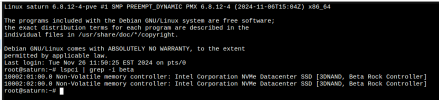

I'm working with a Dell Precision 7920, all virtualization flags are enabled in the BIOS. The proxmox host is saturn, the virtual guest is jupiter. Sorry for the long post, I'm trying to get as much pertinent data in as possible.

tl;dr: multi-socket system has u.2 NVMe PCIe drives resource mapped to a guest, half (one socket worth) disappear from host when VM guest is booted.

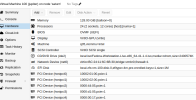

I am passing through four u.2 NVMe drives - in this host, each CPU socket supports 2x u.2. I also have 2x m.2 NVMe drives, which are booting the host.

Desired NVMe

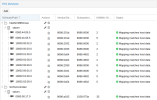

I am also passing through the built in SATA controller:

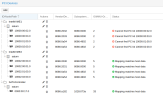

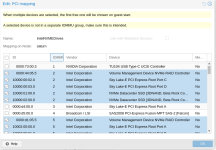

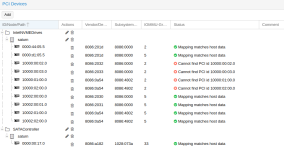

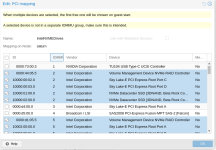

I have created resource mappings for the NVMe RAID Controller (which I do not intend to use) as well as the PCI Express Root ports, and the NVME devices:

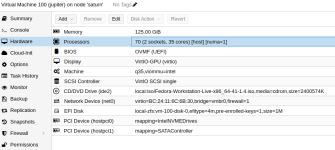

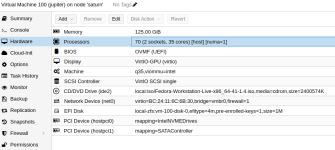

I have added these mapped devices to my VM, which is configured with 70 cores, host cpu passthrough and numa enabled:

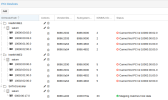

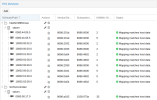

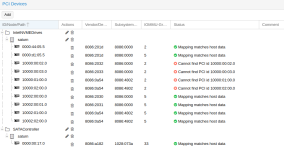

When I boot the VM, half of the NVMe drives disappear from the mapping, and are not visible to the virtual guest or the host:

tl;dr: multi-socket system has u.2 NVMe PCIe drives resource mapped to a guest, half (one socket worth) disappear from host when VM guest is booted.

root@saturn:~# numactl -spolicy: defaultpreferred node: currentphyscpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 cpubind: 0 1 nodebind: 0 1 membind: 0 1 preferred: I am passing through four u.2 NVMe drives - in this host, each CPU socket supports 2x u.2. I also have 2x m.2 NVMe drives, which are booting the host.

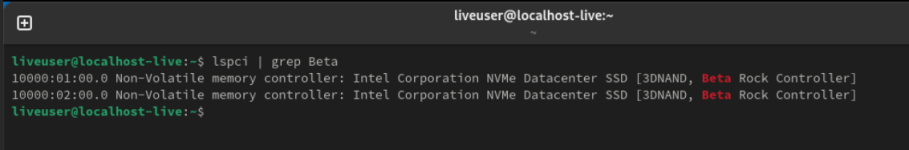

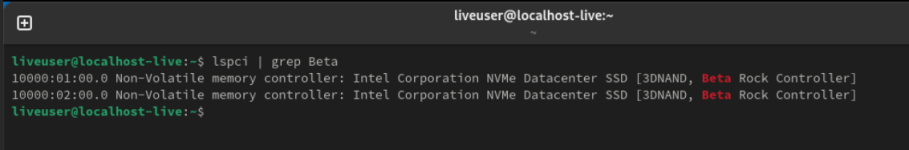

root@saturn:~# lspci | grep -i beta10000:01:00.0 Non-Volatile memory controller: Intel Corporation NVMe Datacenter SSD [3DNAND, Beta Rock Controller]10000:02:00.0 Non-Volatile memory controller: Intel Corporation NVMe Datacenter SSD [3DNAND, Beta Rock Controller]10002:01:00.0 Non-Volatile memory controller: Intel Corporation NVMe Datacenter SSD [3DNAND, Beta Rock Controller]10002:02:00.0 Non-Volatile memory controller: Intel Corporation NVMe Datacenter SSD [3DNAND, Beta Rock Controller]Desired NVMe

/devices/pci0000:d1/0000:d1:05.5/pci10002:00/10002:00:01.0/10002:02:00.0/nvme/nvme5/devices/pci0000:44/0000:44:05.5/pci10000:00/10000:00:02.0/10000:01:00.0/nvme/nvme0/devices/pci0000:44/0000:44:05.5/pci10000:00/10000:00:03.0/10000:02:00.0/nvme/nvme2/devices/pci0000:d1/0000:d1:05.5/pci10002:00/10002:00:00.0/10002:01:00.0/nvme/nvme4I am also passing through the built in SATA controller:

/devices/pci0000:00/0000:00:17.0I have created resource mappings for the NVMe RAID Controller (which I do not intend to use) as well as the PCI Express Root ports, and the NVME devices:

I have added these mapped devices to my VM, which is configured with 70 cores, host cpu passthrough and numa enabled:

When I boot the VM, half of the NVMe drives disappear from the mapping, and are not visible to the virtual guest or the host:

Last edited: