Hello everyone !

I have set up cluster with 2 nodes and 1 quorum device which is Raspberry Pi.

Something very strange happened today. I was setting up port-security on my switch when pv1/node1 get down. Without saving anything I just restarted my switch and pv1 start working. I saw that there was a triggered migration of my CT which was migrated to the other node (like not the main node1).

When I tried to migrate the CT from node2 to the main node1 this error log came :

Also an error occurred in the replication job :

One thing I pointed out was that the container was with a very old configuration applied (settings which I have done in about 3 months ago). I ran

Node 1 :

Node 2 :

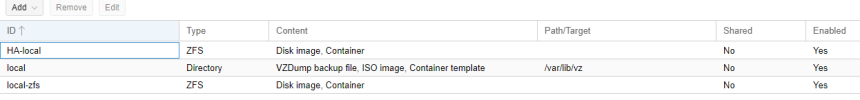

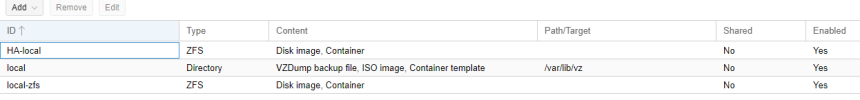

This is my set up of the Datacenter storage (The CT is on HA-local) :

What is causing this issue guys ?

Thanks in advance !

I have set up cluster with 2 nodes and 1 quorum device which is Raspberry Pi.

Something very strange happened today. I was setting up port-security on my switch when pv1/node1 get down. Without saving anything I just restarted my switch and pv1 start working. I saw that there was a triggered migration of my CT which was migrated to the other node (like not the main node1).

When I tried to migrate the CT from node2 to the main node1 this error log came :

task started by HA resource agent

2023-09-16 00:25:09 starting migration of CT 801 to node 'node1' ([B]PV1[/B])

2023-09-16 00:25:09 found local volume 'HA-local:subvol-801-disk-0' (in current VM config)

2023-09-16 00:25:09 found local volume 'HA-local:subvol-801-disk-1' (via storage)

2023-09-16 00:25:09 start replication job

2023-09-16 00:25:09 guest => CT 801, running => 0

2023-09-16 00:25:09 volumes => HA-local:subvol-801-disk-0

2023-09-16 00:25:10 create snapshot '__replicate_801-0_1694813109__' on HA-local:subvol-801-disk-0

2023-09-16 00:25:10 using secure transmission, rate limit: none

2023-09-16 00:25:10 full sync 'HA-local:subvol-801-disk-0' (__replicate_801-0_1694813109__)

2023-09-16 00:25:12 full send of rpool/subvol-801-disk-0@__replicate_801-0_1694813109__ estimated size is 3.06G

2023-09-16 00:25:12 total estimated size is 3.06G

2023-09-16 00:25:12 volume 'rpool/subvol-801-disk-0' already exists

2023-09-16 00:25:12 command 'zfs send -Rpv -- rpool/subvol-801-disk-0@__replicate_801-0_1694813109__' failed: got signal 13

send/receive failed, cleaning up snapshot(s)..

2023-09-16 00:25:12 delete previous replication snapshot '__replicate_801-0_1694813109__' on HA-local:subvol-801-disk-0

2023-09-16 00:25:13 end replication job with error: command 'set -o pipefail && pvesm export HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813109__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=node1' root@[B]PV1[/B] -- pvesm import HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813109__ -allow-rename 0' failed: exit code 255

2023-09-16 00:25:13 ERROR: command 'set -o pipefail && pvesm export HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813109__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=node1' root@[B]PV1[/B] -- pvesm import HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813109__ -allow-rename 0' failed: exit code 255

2023-09-16 00:25:13 aborting phase 1 - cleanup resources

2023-09-16 00:25:13 start final cleanup

2023-09-16 00:25:13 ERROR: migration aborted (duration 00:00:04): command 'set -o pipefail && pvesm export HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813109__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=node1' root@[B]PV1[/B] -- pvesm import HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813109__ -allow-rename 0' failed: exit code 255

TASK ERROR: migration aborted

Also an error occurred in the replication job :

2023-09-16 00:38:01 801-0: start replication job

2023-09-16 00:38:01 801-0: guest => CT 801, running => 1

2023-09-16 00:38:01 801-0: volumes => HA-local:subvol-801-disk-0

2023-09-16 00:38:03 801-0: freeze guest filesystem

2023-09-16 00:38:03 801-0: create snapshot '__replicate_801-0_1694813881__' on HA-local:subvol-801-disk-0

2023-09-16 00:38:03 801-0: thaw guest filesystem

2023-09-16 00:38:03 801-0: using secure transmission, rate limit: none

2023-09-16 00:38:03 801-0: full sync 'HA-local:subvol-801-disk-0' (__replicate_801-0_1694813881__)

2023-09-16 00:38:05 801-0: full send of rpool/subvol-801-disk-0@__replicate_801-0_1694813881__ estimated size is 3.04G

2023-09-16 00:38:05 801-0: total estimated size is 3.04G

2023-09-16 00:38:05 801-0: volume 'rpool/subvol-801-disk-0' already exists

2023-09-16 00:38:05 801-0: warning: cannot send 'rpool/subvol-801-disk-0@__replicate_801-0_1694813881__': signal received

2023-09-16 00:38:05 801-0: cannot send 'rpool/subvol-801-disk-0': I/O error

2023-09-16 00:38:05 801-0: command 'zfs send -Rpv -- rpool/subvol-801-disk-0@__replicate_801-0_1694813881__' failed: exit code 1

2023-09-16 00:38:06 801-0: delete previous replication snapshot '__replicate_801-0_1694813881__' on HA-local:subvol-801-disk-0

2023-09-16 00:38:06 801-0: end replication job with error: command 'set -o pipefail && pvesm export HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813881__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=node1' root@[B]PV1 [/B]-- pvesm import HA-local:subvol-801-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_801-0_1694813881__ -allow-rename 0' failed: exit code 255

One thing I pointed out was that the container was with a very old configuration applied (settings which I have done in about 3 months ago). I ran

zfs list -t all -r /rpool/ on both nodes : Node 1 :

NAME USED AVAIL REFER MOUNTPOINT

rpool 7.87G 221G 104K /rpool

rpool/ROOT 6.22G 221G 96K /rpool/ROOT

rpool/ROOT/pve-1 6.22G 221G 6.22G /

rpool/data 96K 221G 96K /rpool/data

rpool/subvol-801-disk-0 1.60G 98.4G 1.60G /rpool/subvol-801-disk-0

Node 2 :

NAME USED AVAIL REFER MOUNTPOINT

rpool 4.70G 167G 104K /rpool

rpool/ROOT 1.36G 167G 96K /rpool/ROOT

rpool/ROOT/pve-1 1.36G 167G 1.36G /

rpool/data 96K 167G 96K /rpool/data

rpool/subvol-801-disk-0 1.66G 98.3G 1.66G /rpool/subvol-801-disk-0

rpool/subvol-801-disk-1 1.60G 98.4G 1.60G /rpool/subvol-801-disk-1

rpool/subvol-801-disk-1@__replicate_801-0_1694725208__ 0B - 1.60G -

This is my set up of the Datacenter storage (The CT is on HA-local) :

What is causing this issue guys ?

Thanks in advance !

Last edited: