Is there a way (live or not live) to migrate virtual disks to another storage?

Background: I have a cluster with 4 nodes and 2 SAN. Two of them share one FC-SAN each and i want to move the virtual disks from one SAN to the other.

Is this possible?

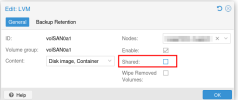

Pretty sure I've seen a live-migration dialogue in the Web-GUI where i could change the target storage before migrating. But not here. So i always get the error message "storage 'san01' is not available on node 'node04' (500)" - where i only have the san02-lvm

Is this a limitation of LVM-thick?

Migrating the Disk with the "move storage" Disk Action over a NFS-Share (all 4 nodes have access) works, but takes much more time.

Background: I have a cluster with 4 nodes and 2 SAN. Two of them share one FC-SAN each and i want to move the virtual disks from one SAN to the other.

Is this possible?

Pretty sure I've seen a live-migration dialogue in the Web-GUI where i could change the target storage before migrating. But not here. So i always get the error message "storage 'san01' is not available on node 'node04' (500)" - where i only have the san02-lvm

Is this a limitation of LVM-thick?

Migrating the Disk with the "move storage" Disk Action over a NFS-Share (all 4 nodes have access) works, but takes much more time.