Dear member,

I have the following HCI setup attached as high level overview,

4x Proxmox cluster nodes 8.3.2, with local SSD disks in CEPH cluster, each node has 12 interfaces

1x NAS with NFS connected to Proxmox cluster

All are running in semi-air-gaped network (L2 Only) accessible from single VM that has interfaces in the same network.

The environment running more than 80 critical VMs, and I'm planning to apply micro-segmentation instead rely on IPTABLES on each VM host level, i came to know that Proxmox NFTABLES is in preview-tech, and i have the following questions

1- Do I need to work with the nftables, or it is still not stable, and in case i rely on proxmox default iptables, later when nftables become production, is it easy to migrate the rules?

2- As far I understood that there is anti-lock rules for 22,8006 plus VNC for local access, since i have a VM within the same subnet, do i need still to make rule to allow my access?

3- Apply the firewall rule in the cluster level will apply it on all nodes, do i need still to create the rules in node as well, or no need?

4- i need to split the project in 2 sub-tasks (cluster/node level and VMs) if i enabled the firewall on cluster level and node enabled by default while i kept the VM disabled, will the node will have the rules applied ?

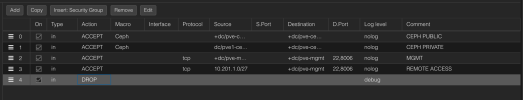

I also tried to tcpdump between nodes and found the following, plus some research to make sure i don't miss any rules, can you please confirm whether this is sufficient?

Thanks

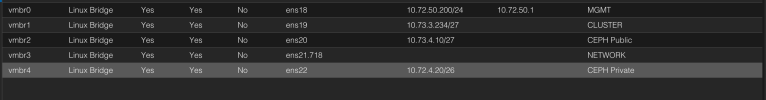

I have the following HCI setup attached as high level overview,

4x Proxmox cluster nodes 8.3.2, with local SSD disks in CEPH cluster, each node has 12 interfaces

- 2x 1Gbps interface (Proxmox Management)

- 2x 1Gbps interface (Proxmox Cluster)

- 2x 40Gbps interface (CEPH Public)

- 2x 40Gbps interface (CEPH Private)

- 2x 40Gbps interface (Internal Network)

- 2x 10Gbps interface (DMZ Network)

1x NAS with NFS connected to Proxmox cluster

All are running in semi-air-gaped network (L2 Only) accessible from single VM that has interfaces in the same network.

The environment running more than 80 critical VMs, and I'm planning to apply micro-segmentation instead rely on IPTABLES on each VM host level, i came to know that Proxmox NFTABLES is in preview-tech, and i have the following questions

1- Do I need to work with the nftables, or it is still not stable, and in case i rely on proxmox default iptables, later when nftables become production, is it easy to migrate the rules?

2- As far I understood that there is anti-lock rules for 22,8006 plus VNC for local access, since i have a VM within the same subnet, do i need still to make rule to allow my access?

3- Apply the firewall rule in the cluster level will apply it on all nodes, do i need still to create the rules in node as well, or no need?

4- i need to split the project in 2 sub-tasks (cluster/node level and VMs) if i enabled the firewall on cluster level and node enabled by default while i kept the VM disabled, will the node will have the rules applied ?

I also tried to tcpdump between nodes and found the following, plus some research to make sure i don't miss any rules, can you please confirm whether this is sufficient?

| Interface | Ports | Protocol | Source Interface | Destination Interface | Purpose |

| MGMT | 22, 443, 8006 | TCP | MGMT (Node 1-4) | MGMT (Node 1-4) | Proxmox management (SSH, GUI/API). |

| Cluster | 5404-5405 | UDP | Cluster (Node 1-4) | Cluster (Node 1-4) | Corosync cluster communication. |

| Ceph Public | 6789, 6800-7300 | TCP | Ceph Public (Node 1-4) | Ceph Public (Node 1-4) | Ceph MON and client-facing traffic. |

| Ceph Private | 6800-7300 | TCP | Ceph Private (Node 1-4) | Ceph Private (Node 1-4) | Ceph OSD replication and recovery. |

| Storage (NFS) | 2049, 111, 32765-32769 | TCP/UDP | Storage (Node 1-4) | NFS Server | NFS traffic for VM and container storage. |

| Backup | 8007, 22 | TCP | Backup (Node 1-4) | Proxmox Backup Server | Backup traffic for VM and container snapshots. |

| DMZ | Custom | TCP/UDP | DMZ (External Clients) | DMZ (Proxmox Nodes) | Application-specific traffic. |

| Internal | Custom | TCP/UDP | Internal (External Clients) | Internal (Proxmox Nodes) | Application-specific traffic. |

Thanks