Hi,

every day after a backup of VMs usage of RAM on some nodes in my cluster increases (from 5GB to 9 GB per day). It happenes until node crashes.

It started to happen after upgrade to Proxmox VE 8.2.2 and kernel 6.8.4-3-pve. As storage we use CEPH. First of all, I thought it was caused by Ceph, but I have some nodes without Ceph with the same issue.

This is the output of

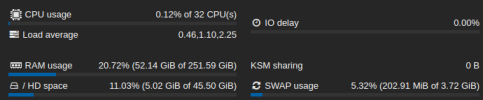

Memory usage graph in UI says that 192 GB is used. Today I migrated all VMs from node and it is still using 52 GBs of RAM:

Output from

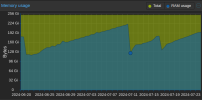

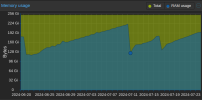

Here is graph of memory usage for last month:

Biggest dropdown is caused by reboot to make kernel upgrade to the latest from 6.8.4-3-pve to 6.8.8-2-pve. The issue still persists.

On Kernel 6.5.13-5-pve there wasn't any issue with RAM usage. Today I tried to downgrade kernel on one node, so I will post an update if it helped.

every day after a backup of VMs usage of RAM on some nodes in my cluster increases (from 5GB to 9 GB per day). It happenes until node crashes.

It started to happen after upgrade to Proxmox VE 8.2.2 and kernel 6.8.4-3-pve. As storage we use CEPH. First of all, I thought it was caused by Ceph, but I have some nodes without Ceph with the same issue.

This is the output of

free -h on node without Ceph:

Code:

total used free shared buff/cache available

Mem: 251Gi 146Gi 58Gi 94Mi 977Mi 105Gi

Swap: 3.7Gi 3.7Gi 6.2MiMemory usage graph in UI says that 192 GB is used. Today I migrated all VMs from node and it is still using 52 GBs of RAM:

Output from

free -h:

Code:

total used free shared buff/cache available

Mem: 251Gi 3.8Gi 198Gi 70Mi 654Mi 247Gi

Swap: 3.7Gi 202Mi 3.5GiHere is graph of memory usage for last month:

Biggest dropdown is caused by reboot to make kernel upgrade to the latest from 6.8.4-3-pve to 6.8.8-2-pve. The issue still persists.

On Kernel 6.5.13-5-pve there wasn't any issue with RAM usage. Today I tried to downgrade kernel on one node, so I will post an update if it helped.