Hello and happy new year!

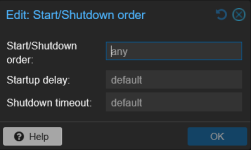

I read the documentation related to automatic startup and shutdown for the virtual machine here https://pve.proxmox.com/pve-docs/chapter-qm.html#qm_startup_and_shutdown

I have a cluster with 4 nodes and about 10 virtual machine per node.

After many try, I realized that automatic startup and shutdown is managed by proxmox for single node and not at cluster level.

This mean that if I configure a VM101 on node2 with a delay of 1 minute, the other virtual machines on node1, node3 and node4 are started ignoring the delay set for VM101.

Actually, I'm using a local script to manage the startup of all my VM at cluster level.

It's working, but I think that is not a good solution and I think that proxmox could manage it in a smarter way.

I leave here the script that I'm using if it could be usefull for Proxmox team to adapt it in some way for manage the startup and shutdown process at cluster level.

Thank you in advance.

I read the documentation related to automatic startup and shutdown for the virtual machine here https://pve.proxmox.com/pve-docs/chapter-qm.html#qm_startup_and_shutdown

I have a cluster with 4 nodes and about 10 virtual machine per node.

After many try, I realized that automatic startup and shutdown is managed by proxmox for single node and not at cluster level.

This mean that if I configure a VM101 on node2 with a delay of 1 minute, the other virtual machines on node1, node3 and node4 are started ignoring the delay set for VM101.

Actually, I'm using a local script to manage the startup of all my VM at cluster level.

It's working, but I think that is not a good solution and I think that proxmox could manage it in a smarter way.

I leave here the script that I'm using if it could be usefull for Proxmox team to adapt it in some way for manage the startup and shutdown process at cluster level.

Thank you in advance.

Rich (BB code):

# cat /etc/pve/pve-avviamacchinevirtuali.sh

#!/bin/bash

# File with the lists of VM to NOT to start

EXCLUDE_FILE="/etc/pve/pve-avviamacchinevirtuali-daescludere.conf"

# File with the lists of VM to start with delay

VM_ORDER_FILE="/etc/pve/pve-avviamacchinevirtuali-elenco.conf"

# File lock to prevent simultaneously execution through the nodes

LOCK_FILE="/etc/pve/pve-avviamacchinevirtuali.lock"

# Function to get the node where a specific VM reside

get_vm_node() {

VMID=$1

NODE=`pvesh get /cluster/resources -type vm --noborder --output-format json \

| MACCHINA="$VMID" perl -MJSON -ne 'my $j=decode_json($_); for my $res ($j->@*) { if ($res->{type} =~ /qemu|lxc/ && $res->{id} eq "qemu/$ENV{MACCHINA}") { print $res->{node}."\n"; last; }}'`

echo $NODE

}

# Function to create a lock file

create_lock() {

if [ -f "$LOCK_FILE" ]; then

echo "Another node is executing this script - exit!"

exit 0

else

touch "$LOCK_FILE"

fi

}

# Function to remove a lock file

remove_lock() {

rm -f "$LOCK_FILE"

}

# If the script fail, I remove the lock

trap remove_lock EXIT

# Check if file VM to exclude exists

if [[ ! -f "$EXCLUDE_FILE" ]]; then

echo "File $EXCLUDE_FILE not found! Exit."

exit 1

fi

# Create an array with VMID to exclude

EXCLUDE_VMS=()

while IFS= read -r line; do

# Rid of spaces and comment

VMID=$(echo "$line" | sed 's/#.*//g' | xargs)

if [[ -n "$VMID" ]]; then

EXCLUDE_VMS+=("$VMID")

fi

done < "$EXCLUDE_FILE"

# Check if a VM is set to be excluded

is_excluded() {

local vmid=$1

for excluded_vmid in "${EXCLUDE_VMS[@]}"; do

if [[ "$vmid" == "$excluded_vmid" ]]; then

return 0 # VM found in the list

fi

done

return 1 # VM not found in the list

}

# Create file lock

create_lock

# Set a delay to consent at Ceph to start correctly

SECONDI=120

echo "Wait $SECONDI before start any VM..."

sleep $SECONDI

# Read the file with the lists of VM to start with a specific order and with a specific delay

if [[ ! -f $VM_ORDER_FILE ]]; then

echo "File $VM_ORDER_FILE not found!"

exit 1

fi

# Loop on every VM

while read -r VMID DELAY; do

# Skip blank lines and comments

if [[ -z "$VMID" || "$VMID" =~ ^# ]]; then

continue

fi

if [[ ! -z "$VMID" && ! -z "$DELAY" ]]; then

# Get the node where VM lives

NODE=$(get_vm_node $VMID)

if [[ -z "$NODE" ]]; then

echo "VMID $VMID not found inside the cluster!"

continue

fi

# Get the state of the VM in JSON format

stato=$(pvesh get /nodes/${NODE}/qemu/${VMID}/status/current --output-format json 2>/dev/null)

# Extract the field "status" with jq

STATUS=$(echo "$json_data" | jq -r '.status')

if [[ "$STATUS" == "stopped" ]]; then

# Start the VM on the node

echo "Start VMID $VMID on node $NODE..."

pvesh create /nodes/${NODE}/qemu/${VMID}/status/start

# Delay before start the next VM

echo "Whait $DELAY seconds before start next VM..."

sleep $DELAY

else

echo "VMID $VMID is already started on node $NODE"

fi

fi

done < "$VM_ORDER_FILE"

# start of the remaining VM

VM_LIST=$(pvesh get /cluster/resources --type vm --output-format json)

# Loop for every VM at cluster level

echo "$VM_LIST" | jq -c '.[]' | while read -r VM; do

VMID=$(echo $VM | jq -r '.vmid')

NODE=$(echo $VM | jq -r '.node')

STATUS=$(echo $VM | jq -r '.status')

# Skip empty rows and comments

if [[ -z "$VMID" || "$VMID" =~ ^# ]]; then

continue

fi

# Check if VM is excluded from startup

if is_excluded "$VMID"; then

echo "VMID $VMID is excluded, it'll not be started!"

continue

fi

# Control if VM is stopped (status: "stopped") before start it

if [[ "$STATUS" == "stopped" ]]; then

echo "Start VMID $VMID on node $NODE..."

# I have to use qm command instead of pvesh because with pvesh is not possible to set a timeout during the startup process.

# For example, I have a VM with more than 20 ethernets that requires more than 40/45 seconds to startup and with pvesh

# the startup process in this case fails!

#pvesh create /nodes/${NODE}/qemu/${VMID}/status/start

ssh -n -o BatchMode=yes root@${NODE} qm start ${VMID} --timeout=300

# Delay before start next VM

DELAY=10

echo "Whait $DELAY seconds before start next VM..."

sleep $DELAY

else

echo "VMID $VMID is already started on node $NODE"

fi

done

# Start of Zabbix server as last VM to avoid floading messages!

#

# Get the state of the VM

NODE=$(get_vm_node 104)

stato=$(pvesh get /nodes/${NODE}/qemu/${VMID}/status/current --output-format json 2>/dev/null)

# Get "status" with jq

STATUS=$(echo "$json_data" | jq -r '.status')

if [[ "$STATUS" == "stopped" ]]; then

DELAY=120

echo "Whait $DELAY seconds before startup Zabbix server..."

sleep $DELAY

pvesh create /nodes/${NODE}/qemu/104/status/start

else

echo "VMID 104 is already started on node $NODE"

fi

echo "All VM started !"

# Remove lock file

remove_lock

# cat /etc/pve/pve-avviamacchinevirtuali-elenco.conf

#ID #SLEEP-IN-SECONDS

113 120

127 120

# cat /etc/pve/pve-avviamacchinevirtuali-daescludere.conf

# Lists of VM to not to start, one by row!

416

Last edited: