Hello, I've just resized a HDD RAID on my Proxmox Machine.

Then I resized the lvm pool using lvextend, and then I reszed the CT's volume from 5000G to 6000G via the web ui (resources -> select mp -> volume action -> resize).

However, the problem is that inside the LXC using df-h it still counts the volume as a 5000G one.

Therefore I'm here seeking help for the situation.

It's quite frustrating as normally just pressing resize on the web ui did everything. Also I confirmed the log that the task ended succesfully.

I've attached the captures & outputs of several disk-related commands below. I'm running PVE 8.1.3 on a Dell R740xd.

Any suggestions are welcomed,

Thanks in advance!

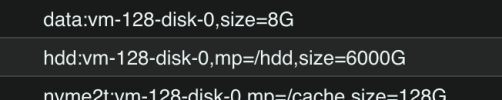

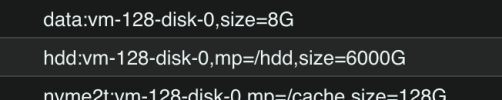

From PVE, LXC > Resources

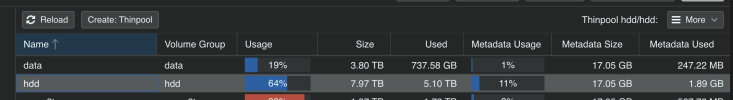

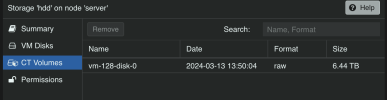

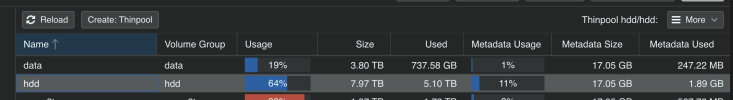

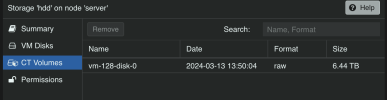

Node storage setup

Inside the Host:

Inside the LXC:

Then I resized the lvm pool using lvextend, and then I reszed the CT's volume from 5000G to 6000G via the web ui (resources -> select mp -> volume action -> resize).

However, the problem is that inside the LXC using df-h it still counts the volume as a 5000G one.

Therefore I'm here seeking help for the situation.

It's quite frustrating as normally just pressing resize on the web ui did everything. Also I confirmed the log that the task ended succesfully.

I've attached the captures & outputs of several disk-related commands below. I'm running PVE 8.1.3 on a Dell R740xd.

Any suggestions are welcomed,

Thanks in advance!

From PVE, LXC > Resources

Node storage setup

Inside the Host:

Code:

root@server:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 3.5T 0 disk

└─sda3 8:3 0 3.5T 0 part

├─data-data_tmeta 252:2 0 15.9G 0 lvm

│ └─data-data-tpool 252:8 0 3.5T 0 lvm

(...)

sdb 8:16 0 7.3T 0 disk

├─hdd-hdd_tmeta 252:6 0 15.9G 0 lvm

│ └─hdd-hdd-tpool 252:49 0 7.2T 0 lvm

│ ├─hdd-hdd 252:50 0 7.2T 1 lvm

│ └─hdd-vm--128--disk--0 252:51 0 5.9T 0 lvm

└─hdd-hdd_tdata 252:7 0 7.2T 0 lvm

└─hdd-hdd-tpool 252:49 0 7.2T 0 lvm

├─hdd-hdd 252:50 0 7.2T 1 lvm

└─hdd-vm--128--disk--0 252:51 0 5.9T 0 lvm

Code:

root@server:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

(...)

data data twi-aotz-- <3.46t 19.40 1.45

vm-152-disk-0 data Vwi-a-tz-- 128.00g data 58.02

vm-153-disk-0 data Vwi-a-tz-- 64.00g data 7.13

hdd hdd twi-aotz-- 7.24t 64.09 11.07

vm-128-disk-0 hdd Vwi-aotz-- <5.86t hdd 79.24

nvme2t nvme2t twi-aotz-- <1.79t 90.37 3.33

vm-108-disk-0 nvme2t Vwi-aotz-- 128.00g nvme2t 99.96

vm-112-disk-0 nvme2t Vwi-a-tz-- 128.00g nvme2t 95.17

vm-128-disk-0 nvme2t Vwi-aotz-- 128.00g nvme2t 99.95

vm-151-disk-0 nvme2t Vwi-a-tz-- 1.25t nvme2t 99.75

root pve -wi-ao---- 214.50g

swap pve -wi-ao---- 8.00gInside the LXC:

Code:

root@rclone:/# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/data-vm--128--disk--0 7.8G 785M 6.6G 11% /

/dev/mapper/hdd-vm--128--disk--0 4.9T 4.6T 23M 100% /hdd

/dev/mapper/nvme2t-vm--128--disk--0 125G 119G 99M 100% /cache

none 492K 4.0K 488K 1% /dev

efivarfs 304K 210K 90K 71% /sys/firmware/efi/efivars

tmpfs 63G 0 63G 0% /dev/shm

tmpfs 26G 80K 26G 1% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

Code:

root@rclone:/# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 3.5T 0 disk

`-sda3 8:3 0 3.5T 0 part

sdb 8:16 0 7.3T 0 disk

sdc 8:32 0 223.5G 0 disk

|-sdc1 8:33 0 1007K 0 part

|-sdc2 8:34 0 1G 0 part

`-sdc3 8:35 0 222.5G 0 part

nvme2n1 259:0 0 931.5G 0 disk

|-nvme2n1p1 259:1 0 931.5G 0 part

`-nvme2n1p9 259:2 0 8M 0 part

nvme1n1 259:3 0 931.5G 0 disk

|-nvme1n1p1 259:4 0 931.5G 0 part

`-nvme1n1p9 259:5 0 8M 0 part

nvme0n1 259:6 0 1.8T 0 disk

Last edited: