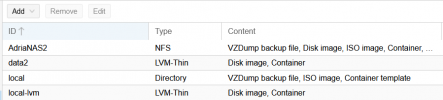

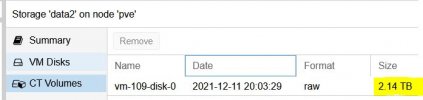

I have two RAID-10 spans configured on my server (4 x 1TB HDDs per RAID 10)

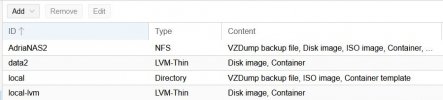

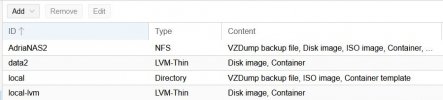

One stores the local and local-lvm (2TB storage)

The second span I turned into its own LVM-thin storage, i called it data2 (2TB storage)

On data2 I've created only one container. I wanted my data2 lvm-thin to only be used as a dedicated storage space for this one container.

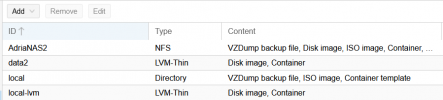

However my lvm-thin storage (data2) is filling up quickly and i dont understand why?

When I look inside my container it says its only using 454GB out of 2TB leaving 1.4TB available for use. Ok that sounds good...

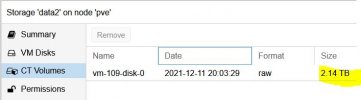

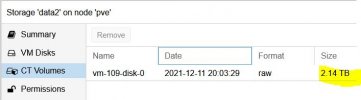

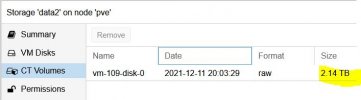

OK, but when I look at my LVM-Thin storage its almost completely full!?

This is the only container and data living on this LVM-thin (data2)

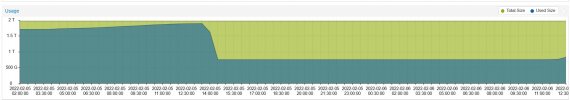

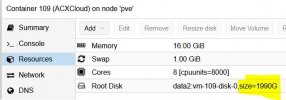

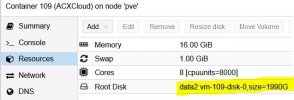

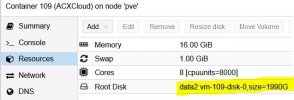

When I look at the container's resources I get even more confused...it says it has 1990GB dedicated in size to this LVM-thin, but in the CT Volumes menu its 2.14TB?

Here's an lvs command output

I don't understand what could be filling up this LVM-thin so much?

Was LVM-thin a poor choice as a storage pool for a single large container?

Thanks guys.

One stores the local and local-lvm (2TB storage)

The second span I turned into its own LVM-thin storage, i called it data2 (2TB storage)

On data2 I've created only one container. I wanted my data2 lvm-thin to only be used as a dedicated storage space for this one container.

However my lvm-thin storage (data2) is filling up quickly and i dont understand why?

When I look inside my container it says its only using 454GB out of 2TB leaving 1.4TB available for use. Ok that sounds good...

Code:

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/data2-vm--109--disk--0 2.0T 454G 1.4T 25% /

none 492K 4.0K 488K 1% /dev

udev 48G 0 48G 0% /dev/tty

tmpfs 48G 16K 48G 1% /dev/shm

tmpfs 9.5G 224K 9.5G 1% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 48G 0 48G 0% /sys/fs/cgroupOK, but when I look at my LVM-Thin storage its almost completely full!?

This is the only container and data living on this LVM-thin (data2)

When I look at the container's resources I get even more confused...it says it has 1990GB dedicated in size to this LVM-thin, but in the CT Volumes menu its 2.14TB?

Here's an lvs command output

Code:

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data2 data2 twi-aotz-- <1.79t 86.83 4.82

vm-109-disk-0 data2 Vwi-aotz-- 1.94t data2 79.84

data pve twi-aotz-- <1.67t 8.08 0.58

root pve -wi-ao---- 96.00g

snap_vm-100-disk-0_Prima pve Vri---tz-k 40.00g data vm-100-disk-0

snap_vm-101-disk-1_Working pve Vri---tz-k 32.00g data vm-101-disk-1

snap_vm-103-disk-0_PassBolt pve Vri---tz-k 12.00g data

snap_vm-107-disk-1_Working pve Vri---tz-k 12.00g data vm-107-disk-1

snap_vm-109-disk-1_Working pve Vri---tz-k 1.23t data vm-109-disk-1

snap_vm-110-disk-0_Certbot pve Vri---tz-k 100.00g data vm-110-disk-0

swap pve -wi-ao---- 8.00g

vm-100-disk-0 pve Vwi-aotz-- 40.00g data 5.85

vm-100-state-Prima pve Vwi-a-tz-- <32.49g data 5.65

vm-101-disk-1 pve Vwi-aotz-- 32.00g data 30.93

vm-102-disk-0 pve Vwi-aotz-- 12.00g data 28.66

vm-103-disk-0 pve Vwi-aotz-- 12.00g data snap_vm-103-disk-0_PassBolt 28.06

vm-104-disk-0 pve Vwi-aotz-- 12.00g data 20.52

vm-105-disk-0 pve Vwi-aotz-- 12.00g data 45.09

vm-107-disk-1 pve Vwi-aotz-- 12.00g data 26.01

vm-109-disk-1 pve Vwi-a-tz-- 1.23t data 4.97

vm-110-disk-0 pve Vwi-a-tz-- 100.00g data 11.16

vm-111-disk-2 pve Vwi-aotz-- 50.00g data 29.33

vm-111-state-First pve Vwi-a-tz-- <8.49g data 46.13

vm-112-disk-1 pve Vwi-a-tz-- 25.00g data 8.23

vm-113-disk-0 pve Vwi-aotz-- 10.00g data 13.29I don't understand what could be filling up this LVM-thin so much?

Was LVM-thin a poor choice as a storage pool for a single large container?

Thanks guys.

Attachments

Last edited: