HI

our scenario involves this:

5 nodes in HA

2 nodes installed in one place

2 nodes installed elsewhere

1 node installed elsewhere witout CEPH storage

all places are connected in 10 gigabits LAN

if I turn off 2 nodes at the same time in the same place, then 50% of the Ceph OSD are down, the data is there but the performance is very slow, such that it is not possible to work. How come?

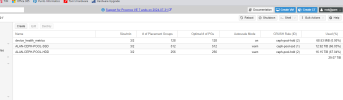

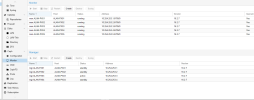

I attach screens of the ceph configuration and some screens during the shutdown of the two nodes.

question: could it be that the performances would have improved and returned to normal at the end of the rebalancing?

Thank you

our scenario involves this:

5 nodes in HA

2 nodes installed in one place

2 nodes installed elsewhere

1 node installed elsewhere witout CEPH storage

all places are connected in 10 gigabits LAN

if I turn off 2 nodes at the same time in the same place, then 50% of the Ceph OSD are down, the data is there but the performance is very slow, such that it is not possible to work. How come?

I attach screens of the ceph configuration and some screens during the shutdown of the two nodes.

question: could it be that the performances would have improved and returned to normal at the end of the rebalancing?

Thank you