Hi,

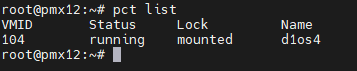

I have a container that worked perfectly fine until last week. Is is now impossible to do anything with it, from console or terminal.

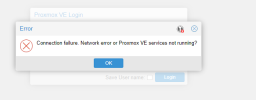

It seems like it cant reach the storage, when i go into the GUI and try to see the content section of this storage point it just stay loading forever :

Here are the informations about the conf:

pveversion :

the pct conf file :

the storage.cfg file :

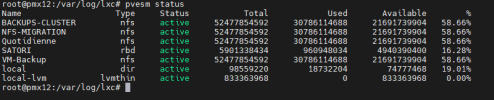

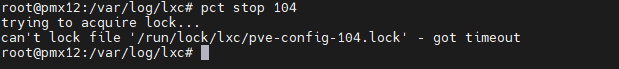

i tried to get more infos with these commands that i found online ;

root@pmx12:/var/log/lxc# lxc-start -lDEBUG -o lxc104start.log -F -n 104

lxc-start: 104: tools/lxc_start.c: main: 279 Container is already running

root@pmx12:/var/log/lxc# cat lxc104start.log

lxc-start 104 20240426134912.241 DEBUG commands - commands.c:lxc_cmd_rsp_recv:168 - Response data length for command "get_init_pid" is 0

lxc-start 104 20240426134912.241 DEBUG commands - commands.c:lxc_cmd_rsp_recv:168 - Response data length for command "get_state" is 0

lxc-start 104 20240426134912.241 DEBUG commands - commands.c:lxc_cmd_get_state:630 - Container "104" is in "RUNNING" state

lxc-start 104 20240426134912.241 ERROR lxc_start - tools/lxc_start.c:main:279 - Container is already running

root@pmx12:/var/log/lxc# df -T

Filesystem Type 1K-blocks Used Available Use% Mounted on

udev devtmpfs 65805112 0 65805112 0% /dev

tmpfs tmpfs 13165924 1465052 11700872 12% /run

/dev/mapper/pve-root ext4 98559220 18670776 74838896 20% /

tmpfs tmpfs 65829608 67488 65762120 1% /dev/shm

tmpfs tmpfs 5120 0 5120 0% /run/lock

tmpfs tmpfs 65829608 0 65829608 0% /sys/fs/cgroup

/dev/sdi2 vfat 523248 312 522936 1% /boot/efi

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-7

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-6

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-5

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-4

/dev/fuse fuse 30720 56 30664 1% /etc/pve

exampleip:/volume2/VM-Backup nfs 52477854592 30518762752 21959091840 59% /mnt/pve/VM-Backup

exampleip:/volume2/VM-Backup-Incre-S nfs 52477854592 30518762752 21959091840 59% /mnt/pve/Quotidienne

exampleip:/volume1/Logitheque nfs 52477854592 30518762752 21959091840 59% /mnt/pve/NFS-MIGRATION

exampleip:/volume1/cluster-backup nfs 52477854592 30518762752 21959091840 59% /mnt/pve/cluster-backup

tmpfs tmpfs 13165920 0 13165920 0% /run/user/0

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/NFS-MIGRATION

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/Quotidienne

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/VM-Backup

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/BACKUPS-CLUSTER

root@pmx12:/var/log/lxc# pct enter 104

lxc-attach: 104: attach.c: lxc_attach: 1136 No such file or directory - Failed to attach to mnt namespace of 580059

As you can see there is probably a storage problem, but i really dont know how to solve this, if someone could help it would be awesome.

Do you guys know how to get it back, or how to create a new ct from this one ?

PS : i know not upgrading pve sucks, but it is what it is, and i have to deal with it unfortunately

Thanks,

I have a container that worked perfectly fine until last week. Is is now impossible to do anything with it, from console or terminal.

It seems like it cant reach the storage, when i go into the GUI and try to see the content section of this storage point it just stay loading forever :

Here are the informations about the conf:

pveversion :

Code:

proxmox-ve: 6.1-2 (running kernel: 5.3.13-1-pve)

pve-manager: 6.1-5 (running version: 6.1-5/9bf06119)

pve-kernel-5.3: 6.1-1

pve-kernel-helper: 6.1-1

pve-kernel-5.0: 6.0-11

pve-kernel-5.3.13-1-pve: 5.3.13-1

pve-kernel-5.0.21-5-pve: 5.0.21-10

pve-kernel-5.0.21-2-pve: 5.0.21-7

pve-kernel-5.0.15-1-pve: 5.0.15-1

ceph: 14.2.6-pve1

ceph-fuse: 14.2.6-pve1

corosync: 3.0.2-pve4

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.13-pve1

libpve-access-control: 6.0-5

libpve-apiclient-perl: 3.0-2

libpve-common-perl: 6.0-9

libpve-guest-common-perl: 3.0-3

libpve-http-server-perl: 3.0-3

libpve-storage-perl: 6.1-3

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve3

lxc-pve: 3.2.1-1

lxcfs: 3.0.3-pve60

novnc-pve: 1.1.0-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.1-1

pve-cluster: 6.1-2

pve-container: 3.0-15

pve-docs: 6.1-3

pve-edk2-firmware: 2.20191127-1

pve-firewall: 4.0-9

pve-firmware: 3.0-4

pve-ha-manager: 3.0-8

pve-i18n: 2.0-3

pve-qemu-kvm: 4.1.1-2

pve-xtermjs: 3.13.2-1

qemu-server: 6.1-4

smartmontools: 7.0-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.2-pve2the pct conf file :

Code:

arch: amd64

cores: 2

hostname: d1os4

memory: 6144

net0: name=eth0,bridge=vmbr0,gw=example,hwaddr=example,ip=example,tag=5,type=veth

onboot: 1

ostype: ubuntu

rootfs: SATORI:vm-104-disk-0,size=50G

swap: 2048

lxc.apparmor.profile: unconfined

lxc.cgroup.devices.allow: a

lxc.cap.drop:the storage.cfg file :

Code:

dir: local

path /var/lib/vz

content backup,iso,vztmpl

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

rbd: SATORI

content rootdir,images

krbd 0

pool SATORIi tried to get more infos with these commands that i found online ;

root@pmx12:/var/log/lxc# lxc-start -lDEBUG -o lxc104start.log -F -n 104

lxc-start: 104: tools/lxc_start.c: main: 279 Container is already running

root@pmx12:/var/log/lxc# cat lxc104start.log

lxc-start 104 20240426134912.241 DEBUG commands - commands.c:lxc_cmd_rsp_recv:168 - Response data length for command "get_init_pid" is 0

lxc-start 104 20240426134912.241 DEBUG commands - commands.c:lxc_cmd_rsp_recv:168 - Response data length for command "get_state" is 0

lxc-start 104 20240426134912.241 DEBUG commands - commands.c:lxc_cmd_get_state:630 - Container "104" is in "RUNNING" state

lxc-start 104 20240426134912.241 ERROR lxc_start - tools/lxc_start.c:main:279 - Container is already running

root@pmx12:/var/log/lxc# df -T

Filesystem Type 1K-blocks Used Available Use% Mounted on

udev devtmpfs 65805112 0 65805112 0% /dev

tmpfs tmpfs 13165924 1465052 11700872 12% /run

/dev/mapper/pve-root ext4 98559220 18670776 74838896 20% /

tmpfs tmpfs 65829608 67488 65762120 1% /dev/shm

tmpfs tmpfs 5120 0 5120 0% /run/lock

tmpfs tmpfs 65829608 0 65829608 0% /sys/fs/cgroup

/dev/sdi2 vfat 523248 312 522936 1% /boot/efi

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-7

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-6

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-5

tmpfs tmpfs 65829608 24 65829584 1% /var/lib/ceph/osd/ceph-4

/dev/fuse fuse 30720 56 30664 1% /etc/pve

exampleip:/volume2/VM-Backup nfs 52477854592 30518762752 21959091840 59% /mnt/pve/VM-Backup

exampleip:/volume2/VM-Backup-Incre-S nfs 52477854592 30518762752 21959091840 59% /mnt/pve/Quotidienne

exampleip:/volume1/Logitheque nfs 52477854592 30518762752 21959091840 59% /mnt/pve/NFS-MIGRATION

exampleip:/volume1/cluster-backup nfs 52477854592 30518762752 21959091840 59% /mnt/pve/cluster-backup

tmpfs tmpfs 13165920 0 13165920 0% /run/user/0

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/NFS-MIGRATION

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/Quotidienne

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/VM-Backup

exampleip:/volume1/cluster-backup/dump test nfs 52477854592 30518762752 21959091840 59% /mnt/pve/BACKUPS-CLUSTER

root@pmx12:/var/log/lxc# pct enter 104

lxc-attach: 104: attach.c: lxc_attach: 1136 No such file or directory - Failed to attach to mnt namespace of 580059

As you can see there is probably a storage problem, but i really dont know how to solve this, if someone could help it would be awesome.

Do you guys know how to get it back, or how to create a new ct from this one ?

PS : i know not upgrading pve sucks, but it is what it is, and i have to deal with it unfortunately

Thanks,